Picture generated with Ideogram.ai

Who hasn’t heard about OpenAI? The AI analysis laboratory has modified the world due to its well-known product, ChatGPT.

It actually modified the panorama of AI implementation, and plenty of firms now rush to develop into the following huge factor.

Regardless of a lot competitors, OpenAI remains to be the go-to firm for any Generative AI enterprise wants as a result of it has probably the greatest fashions and steady assist. The corporate supplies many state-of-the-art Generative AI fashions with numerous job capabilities: Picture era, Textual content-to-Speech, and plenty of extra.

The entire fashions OpenAI presents can be found through API calls. With easy Python code, you’ll be able to already use the mannequin.

On this article, we’ll discover how you can use the OpenAI API with Python and numerous duties you are able to do. I hope you study lots from this text.

To observe this text, there are some things it is advisable put together.

A very powerful factor you want is the API Keys from OpenAI, as you can not entry the OpenAI fashions with out the important thing. To accumulate entry, you should register for an OpenAI account and request the API Key on the account web page. After you obtain the important thing, save that someplace you’ll be able to keep in mind, because it is not going to seem once more within the OpenAI interface.

The subsequent factor it is advisable set is to purchase the pre-paid credit score to make use of the OpenAI API. Lately, OpenAI introduced adjustments to how their billing works. As a substitute of paying on the finish of the month, we have to buy pre-paid credit score for the API name. You’ll be able to go to the OpenAI pricing web page to estimate the credit score you want. It’s also possible to test their mannequin web page to know which mannequin you require.

Lastly, it is advisable set up the OpenAI Python package deal in your surroundings. You are able to do that utilizing the next code.

Then, it is advisable set your OpenAI Key Setting variable utilizing the code under.

import os

os.environ['OPENAI_API_KEY'] = 'YOUR API KEY'

With all the things set, let’s begin exploring the API of the OpenAI fashions with Python.

The star of OpenAI API is their Textual content Generations mannequin. These Massive Language Fashions household can produce textual content output from the textual content enter known as immediate. Prompts are principally directions on what we anticipate from the mannequin, reminiscent of textual content evaluation, producing doc drafts, and plenty of extra.

Let’s begin by executing a easy Textual content Generations API name. We’d use the GPT-3.5-Turbo mannequin from OpenAI as the bottom mannequin. It’s not probably the most superior mannequin, however the most cost-effective are sometimes sufficient to carry out text-related duties.

from openai import OpenAI

shopper = OpenAI()

completion = shopper.chat.completions.create(

mannequin="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Generate me 3 Jargons that I can use for my Social Media content as a Data Scientist content creator"}

]

)

print(completion.decisions[0].message.content material)

- “Unleashing the power of predictive analytics to drive data-driven decisions!”

- “Diving deep into the data ocean to uncover valuable insights.”

- “Transforming raw data into actionable intelligence through advanced algorithms.”

The API Name for the Textual content Technology mannequin makes use of the API Endpoint chat.completions to create the textual content response from our immediate.

There are two required parameters for textual content Technology: mannequin and messages.

For the mannequin, you’ll be able to test the checklist of fashions that you should use on the associated mannequin web page.

As for the messages, we move a dictionary with two pairs: the position and the content material. The position key specified the position sender within the dialog mannequin. There are 3 completely different roles: system, consumer, and assistant.

Utilizing the position in messages, we may help set the mannequin conduct and an instance of how the mannequin ought to reply our immediate.

Let’s lengthen the earlier code instance with the position assistant to provide steerage on our mannequin. Moreover, we might discover some parameters for the Textual content Technology mannequin to enhance their end result.

completion = shopper.chat.completions.create(

mannequin="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Generate me 3 jargons that I can use for my Social Media content as a Data Scientist content creator."},

{"role": "assistant", "content": "Sure, here are three jargons: Data Wrangling is the key, Predictive Analytics is the future, and Feature Engineering help your model."},

{"role": "user", "content": "Great, can you also provide me with 3 content ideas based on these jargons?"}

],

max_tokens=150,

temperature=0.7,

top_p=1,

frequency_penalty=0

)

print(completion.decisions[0].message.content material)

In fact! Listed below are three content material concepts primarily based on the jargons supplied:

- “Unleashing the Power of Data Wrangling: A Step-by-Step Guide for Data Scientists” – Create a weblog put up or video tutorial showcasing finest practices and instruments for knowledge wrangling in a real-world knowledge science mission.

- “The Future of Predictive Analytics: Trends and Innovations in Data Science” – Write a thought management piece discussing rising developments and applied sciences in predictive analytics and the way they’re shaping the way forward for knowledge science.

- “Mastering Feature Engineering: Techniques to Boost Model Performance” – Develop an infographic or social media sequence highlighting completely different characteristic engineering strategies and their affect on enhancing the accuracy and effectivity of machine studying fashions.

The ensuing output follows the instance that we supplied to the mannequin. Utilizing the position assistant is beneficial if we’ve got a sure model or end result we would like the mannequin to observe.

As for the parameters, listed here are easy explanations of every parameter that we used:

- max_tokens: This parameter units the utmost variety of phrases the mannequin can generate.

- temperature: This parameter controls the unpredictability of the mannequin’s output. The next temperature leads to outputs which might be extra various and imaginative. The appropriate vary is from 0 to infinity, although values above 2 are uncommon.

- top_p: Also called nucleus sampling, this parameter helps decide the subset of the chance distribution from which the mannequin attracts its output. For example, a top_p worth of 0.1 signifies that the mannequin considers solely the highest 10% of the chance distribution for sampling. Its values can vary from 0 to 1, with increased values permitting for higher output variety.

- frequency_penalty: This penalizes repeated tokens within the mannequin’s output. The penalty worth can vary from -2 to 2, the place optimistic values discourage the repetition of tokens, and adverse values do the alternative, encouraging repeated phrase use. A price of 0 signifies that no penalty is utilized for repetition.

Lastly, you’ll be able to change the mannequin output to the JSON format with the next code.

completion = shopper.chat.completions.create(

mannequin="gpt-3.5-turbo",

response_format={ "type": "json_object" },

messages=[

{"role": "system", "content": "You are a helpful assistant designed to output JSON.."},

{"role": "user", "content": "Generate me 3 Jargons that I can use for my Social Media content as a Data Scientist content creator"}

]

)

print(completion.decisions[0].message.content material)

{

“jargons”: [

“Leveraging predictive analytics to unlock valuable insights”,

“Delving into the intricacies of advanced machine learning algorithms”,

“Harnessing the power of big data to drive data-driven decisions”

]

}

The result’s in JSON format and adheres to the immediate we enter into the mannequin.

For full Textual content Technology API documentation, you’ll be able to test them out on their devoted web page.

OpenAI mannequin is beneficial for textual content era use instances and can even name the API for picture era functions.

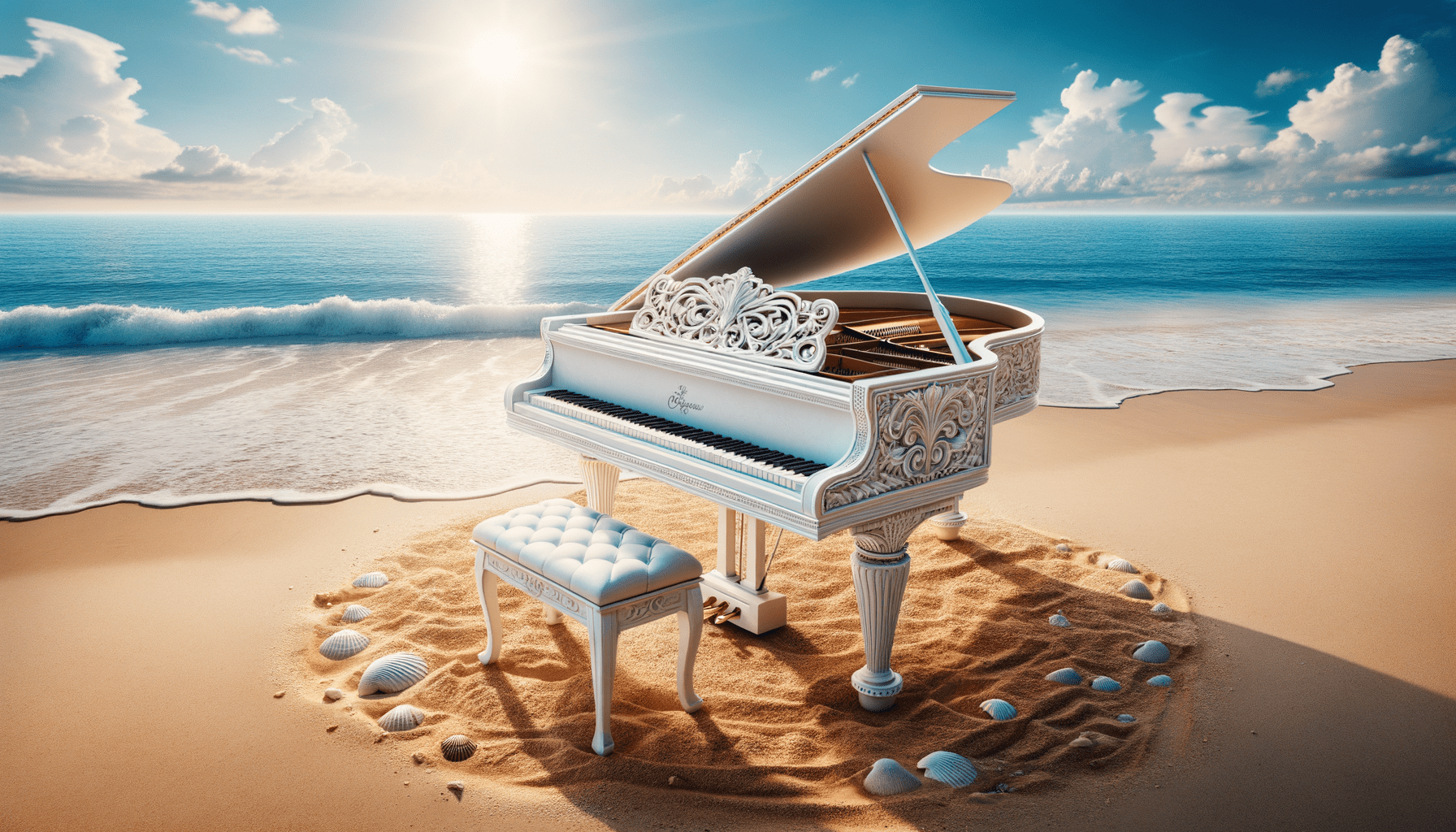

Utilizing the DALL·E mannequin, we are able to generate a picture as requested. The straightforward technique to carry out it’s utilizing the next code.

from openai import OpenAI

from IPython.show import Picture

shopper = OpenAI()

response = shopper.pictures.generate(

mannequin="dall-e-3",

immediate="White Piano on the Beach",

measurement="1792x1024",

high quality="hd",

n=1,

)

image_url = response.knowledge[0].url

Picture(url=image_url)

Picture generated with DALL·E 3

For the parameters, listed here are the reasons:

- mannequin: The picture era mannequin to make use of. At the moment, the API solely helps DALL·E 3 and DALL·E 2 fashions.

- immediate: That is the textual description primarily based on which the mannequin will generate a picture.

- measurement: Determines the decision of the generated picture. There are three decisions for the DALL·E 3 mannequin (1024×1024, 1024×1792 or 1792×1024).

- high quality: This parameter influences the standard of the generated picture. If computational time is required, “standard” is quicker than “hd.”

- n: Specifies the variety of pictures to generate primarily based on the immediate. DALL·E 3 can solely generate one picture at a time. DALL·E 2 can generate as much as 10 at a time.

It is usually potential to generate a variation picture from the present picture, though it’s solely obtainable utilizing the DALL·E 2 mannequin. The API solely accepts sq. PNG pictures under 4 MB as nicely.

from openai import OpenAI

from IPython.show import Picture

shopper = OpenAI()

response = shopper.pictures.create_variation(

picture=open("white_piano_ori.png", "rb"),

n=2,

measurement="1024x1024"

)

image_url = response.knowledge[0].url

Picture(url=image_url)

The picture may not be pretty much as good because the DALL·E 3 generations as it’s utilizing the older mannequin.

OpenAI is a number one firm that gives fashions that may perceive picture enter. This mannequin is known as the Imaginative and prescient mannequin, typically known as GPT-4V. The mannequin is able to answering questions given the picture we gave.

Let’s check out the Imaginative and prescient mannequin API. On this instance, I’d use the white piano picture we generate from the DALL·E 3 mannequin and retailer it domestically. Additionally, I’d create a perform that takes the picture path and returns the picture description textual content. Don’t neglect to alter the api_key variable to your API Key.

from openai import OpenAI

import base64

import requests

def provide_image_description(img_path):

shopper = OpenAI()

api_key = 'YOUR-API-KEY'

# Perform to encode the picture

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.learn()).decode('utf-8')

# Path to your picture

image_path = img_path

# Getting the base64 string

base64_image = encode_image(image_path)

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

payload = {

"model": "gpt-4-vision-preview",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": """Can you describe this image? """

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

],

"max_tokens": 300

}

response = requests.put up("https://api.openai.com/v1/chat/completions", headers=headers, json=payload)

return response.json()['choices'][0]['message']['content']

This picture encompasses a grand piano positioned on a serene seaside setting. The piano is white, indicating a end that’s typically related to class. The instrument is located proper on the fringe of the shoreline, the place the mild waves calmly caress the sand, making a foam that simply touches the bottom of the piano and the matching stool. The seaside environment suggest a way of tranquility and isolation with clear blue skies, fluffy clouds within the distance, and a relaxed sea increasing to the horizon. Scattered across the piano on the sand are quite a few seashells of assorted dimensions and shapes, highlighting the pure magnificence and serene ambiance of the setting. The juxtaposition of a classical music instrument in a pure seaside surroundings creates a surreal and visually poetic composition.

You’ll be able to tweak the textual content values within the dictionary above to match your Imaginative and prescient mannequin necessities.

OpenAI additionally supplies a mannequin to generate audio primarily based on their Textual content-to-Speech mannequin. It’s very simple to make use of, though the voice narration model is proscribed. Additionally, the mannequin has supported many languages, which you’ll be able to see on their language assist web page.

To generate the audio, you should use the under code.

from openai import OpenAI

shopper = OpenAI()

speech_file_path = "speech.mp3"

response = shopper.audio.speech.create(

mannequin="tts-1",

voice="alloy",

enter="I love data science and machine learning"

)

response.stream_to_file(speech_file_path)

You need to see the audio file in your listing. Attempt to play it and see if it’s as much as your customary.

At the moment, there are just a few parameters you should use for the Textual content-to-Speech mannequin:

- mannequin: The Textual content-to-Speech mannequin to make use of. Solely two fashions can be found (tts-1 or tts-1-hd), the place tts-1 optimizes pace and tts-1-hd for high quality.

- voice: The voice model to make use of the place all of the voice is optimized to english. The choice is alloy, echo, fable, onyx, nova, and shimmer.

- response_format: The audio format file. At the moment, the supported codecs are mp3, opus, aac, flac, wav, and pcm.

- pace: The generated audio pace. You’ll be able to choose values between 0.25 to 4.

- enter: The textual content to create the audio. At the moment, the mannequin solely helps as much as 4096 characters.

OpenAI supplies the fashions to transcribe and translate audio knowledge. Utilizing the whispers mannequin, we are able to transcribe audio from the supported language to the textual content information and translate them into english.

Let’s attempt a easy transcription from the audio file we generated beforehand.

from openai import OpenAI

shopper = OpenAI()

audio_file= open("speech.mp3", "rb")

transcription = shopper.audio.transcriptions.create(

mannequin="whisper-1",

file=audio_file

)

print(transcription.textual content)

I like knowledge science and machine studying.

It’s additionally potential to carry out translation from the audio information to the english language. The mannequin isn’t but obtainable to translate onto one other language.

from openai import OpenAI

shopper = OpenAI()

audio_file = open("speech.mp3", "rb")

translate = shopper.audio.translations.create(

mannequin="whisper-1",

file=audio_file

)

We’ve got explored a number of mannequin providers that OpenAI supplies, from Textual content Technology, Picture Technology, Audio Technology, Imaginative and prescient, and Textual content-to-Speech fashions. Every mannequin have their API parameter and specification it is advisable study earlier than utilizing them.

Cornellius Yudha Wijaya is an information science assistant supervisor and knowledge author. Whereas working full-time at Allianz Indonesia, he likes to share Python and knowledge ideas through social media and writing media. Cornellius writes on a wide range of AI and machine studying matters.