Black field AI is any synthetic intelligence system whose inputs and operations aren’t seen to the consumer or one other social gathering. A black field, in a basic sense, is an impenetrable system.

Black field AI fashions arrive at conclusions or selections with out offering any explanations as to how they had been reached.

As AI know-how has developed, two foremost varieties of AI techniques have emerged: black field AI and explainable (or white field) AI. The time period black field refers to techniques that aren’t clear to customers. Merely put, AI techniques whose inside workings, decision-making workflows, and contributing elements usually are not seen or stay unknown to human customers are often known as black field AI techniques.

The dearth of transparency makes it laborious for people to grasp or clarify how the system’s underlying mannequin arrives at its conclusions. Black field AI fashions may additionally create issues associated to flexibility (updating the mannequin as wants change), bias (incorrect outcomes which will offend or injury some teams of people), accuracy validation (laborious to validate or belief the outcomes), and safety (unknown flaws make the mannequin prone to cyberattacks).

How do black field machine studying fashions work?

When a machine studying mannequin is being developed, the training algorithm takes tens of millions of information factors as inputs and correlates particular information options to provide outputs.

The method sometimes contains these steps:

- Subtle AI algorithms study intensive information units to search out patterns. To realize this, the algorithm ingests numerous information examples, enabling it to experiment and be taught by itself via trial and error. Because the mannequin will get an increasing number of coaching information, it self-learns to alter its inside parameters till it reaches some extent the place it might predict the precise output for brand new inputs.

- Because of this coaching, the mannequin is lastly able to make predictions utilizing real-world information. Fraud detection utilizing a danger rating is an instance use case for this mechanism.

- The mannequin scales its technique, approaches, and physique of data after which produces progressively higher output as further information is gathered and fed to it over time.

In lots of circumstances, the inside workings of black field machine studying fashions usually are not available and are largely self-directed. Because of this it is difficult for information scientists, programmers and customers to grasp how the mannequin generates its predictions or to belief the accuracy and veracity of its outcomes.

How do black field deep studying fashions work?

Many black field AI fashions are based mostly on deep studying, a department of AI — particularly, a department of machine studying — through which multilayered or deep neural networks are used to imitate the human mind and simulate its decision-making skill. The neural networks are composed of a number of layers of interconnected nodes often known as synthetic neurons.

In black field fashions, these deep networks of synthetic neurons disperse information and decision-making throughout tens of 1000’s or extra of neurons. The neurons work collectively to course of the info and determine patterns inside it, permitting the AI mannequin to make predictions and arrive at sure selections or solutions.

These predictions and selections lead to a complexity that may be simply as obscure because the complexity of the human mind. As with machine studying fashions, it is laborious for people to determine a deep studying mannequin’s “how,” or the particular steps it took to make these predictions or arrive at these selections. For all these causes, such deep studying techniques are often known as black field AI techniques.

Points with black field AI

Whereas black field AI fashions are applicable and extremely useful in some circumstances, they will pose a number of points.

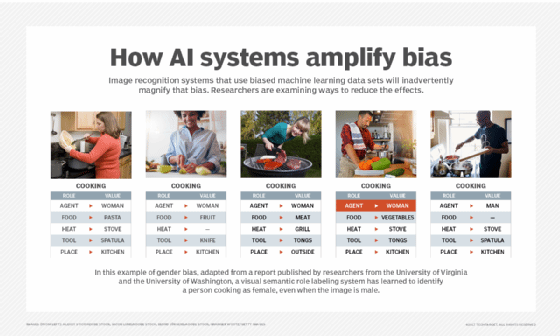

1. AI bias

AI bias might be launched into machine studying algorithms or deep studying neural networks as a mirrored image of acutely aware or unconscious prejudices on the a part of the builders. Bias also can creep in via undetected errors or from coaching information when particulars concerning the dataset are unrecognized. Normally, the outcomes of a biased AI system might be skewed or outright incorrect, probably in a means that is offensive, unfair or downright harmful to some individuals or teams.

Instance

An AI system used for IT recruitment may depend on historic information to assist HR groups choose candidates for interviews. Nevertheless, as a result of historical past exhibits that the majority IT employees prior to now had been male, the AI algorithm may use this info to suggest solely male candidates, even when the pool of potential candidates contains certified ladies. Merely put, it shows a bias towards male candidates and discriminates in opposition to feminine candidates. Related points might happen with different teams, resembling candidates from sure ethnic teams, non secular minorities or immigrant populations.

With black field AI, it is laborious to determine the place the bias is coming from or if the system’s fashions are unbiased. If the inherent bias ends in persistently skewed outcomes, it’d injury the repute of the group utilizing the system. It may additionally lead to authorized actions for discrimination. Bias in black field AI techniques also can have a social value, resulting in the marginalization of, harassment of, wrongful imprisonment of, and even harm to or loss of life of sure teams of individuals.

To stop such damaging penalties, AI builders should construct transparency into their algorithms. It is also necessary that they adjust to AI laws, maintain themselves accountable for errors, and decide to selling the accountable growth and use of AI.

In some circumstances, strategies resembling sensitivity evaluation and have visualization can be utilized to offer a glimpse into how the inner processes of the AI mannequin are working. Even so, normally, these processes stay opaque.

2. Lack of transparency and accountability

The complexity of black field AI fashions can stop builders from correctly understanding and auditing them, even when they produce correct outcomes. Some AI specialists, even those that had been a part of among the most groundbreaking achievements within the area of AI, do not absolutely perceive how these fashions work. Such a lack of expertise results in decreased transparency and minimizes a way of accountability.

These points might be extraordinarily problematic in high-stakes fields like healthcare, banking, army and felony justice. Because the selections and selections made by these fashions can’t be trusted, the eventual results on individuals’s lives might be far-reaching, and never at all times in a great way. It will also be troublesome to carry people answerable for the algorithm’s judgments whether it is utilizing hazy fashions.

3. Lack of flexibility

One other massive downside with black field AI is its lack of flexibility. If the mannequin must be modified for a special use case — say, to explain a special however bodily comparable object — figuring out the brand new guidelines or bulk parameters for the replace may require lots of work.

4. Troublesome to validate outcomes

The outcomes black field AI generates are sometimes troublesome to validate and replicate. How did the mannequin arrive at this specific consequence? Why did it arrive solely at this consequence and no different? How do we all know that that is the most effective/most right reply? It is virtually inconceivable to search out the solutions to those questions and to depend on the generated outcomes to assist human actions or selections. That is one cause why it is not advisable to course of delicate information utilizing a black field AI mannequin.

5. Safety flaws

Black field AI fashions usually comprise flaws that menace actors can exploit to manipulate the enter information. For example, they may change the info to affect the mannequin’s judgment so it makes incorrect and even harmful selections. Since there is not any technique to reverse engineer the mannequin’s decision-making course of, it is virtually inconceivable to cease it from making dangerous selections.

It is also troublesome to determine different safety blind spots affecting the AI mannequin. One frequent blind spot is created attributable to third events which have entry to the mannequin’s coaching information. If these events fail to comply with good safety practices to guard the info, it is laborious to maintain it out of the fingers of cybercriminals, who may achieve unauthorized entry to govern the mannequin and warp its outcomes.

When ought to black field AI be used?

Though black field AI fashions pose many challenges, in addition they supply all these benefits:

- Greater accuracy. Complicated black field techniques may present larger prediction accuracy than extra interpretable techniques, particularly in pc imaginative and prescient and pure language processing as a result of they will determine intricate patterns within the information that aren’t readily obvious to people. Even so, the algorithms’ accuracy makes them extremely complicated, which might additionally make them much less clear.

- Fast conclusions. Black field fashions are sometimes based mostly on a set algorithm and equations, making them fast to run and straightforward to optimize. For instance, calculating the world below a curve utilizing a least-squares match algorithm may generate the fitting reply even when the mannequin does not have an intensive understanding of the issue.

- Minimal computing energy. Many black field fashions are fairly easy, so they do not want lots of computational sources.

- Automation. Black field AI fashions can automate complicated decision-making processes, decreasing the necessity for human intervention. This protects time and sources whereas enhancing the effectivity of beforehand manual-only processes.

On the whole, the black field AI strategy is usually utilized in deep neural networks, the place the mannequin is skilled on giant quantities of information and the inner weights and parameters of the algorithms are adjusted accordingly. Such fashions are efficient in sure purposes, together with picture and speech recognition, the place the purpose is to precisely and rapidly classify or determine information.

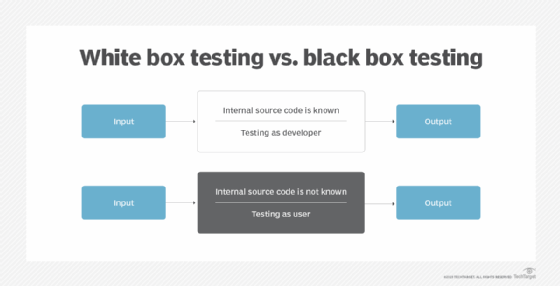

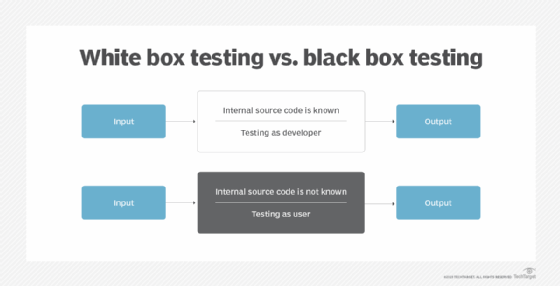

Black field AI vs. white field AI

Black field AI and white field AI are completely different approaches to creating AI techniques. The number of a sure strategy relies on the tip system’s particular purposes and targets. White field AI is often known as explainable AI or XAI.

XAI is created in a means {that a} typical individual can perceive its logic and decision-making course of. Other than understanding how the AI mannequin works and arrives at specific solutions, human customers also can belief the outcomes of the AI system. For all these causes, XAI is the antithesis of black field AI.

Whereas the enter and outputs of a black field AI system are identified, its inside workings usually are not clear or troublesome to grasp. White field AI is clear about the way it involves its conclusions. Its outcomes are additionally interpretable and explainable, so information scientists can study a white field algorithm after which decide the way it behaves and what variables have an effect on its judgment.

Because the inside workings of a white field system can be found and simply understood by customers, this strategy is commonly utilized in decision-making purposes, resembling medical prognosis or monetary evaluation, the place it is necessary to know the way the AI arrived at its conclusions.

Explainable or white field AI is the extra fascinating AI kind for a lot of causes.

One, it allows the mannequin’s builders, engineers and information scientists to audit the mannequin and ensure that the AI system is working as anticipated. If not, they will decide what adjustments are wanted to enhance the system’s output.

Two, an AI system that is explainable permits those that are affected by its output or selections to problem the result, particularly if there’s the chance that the result is the results of inbuilt bias within the AI mannequin.

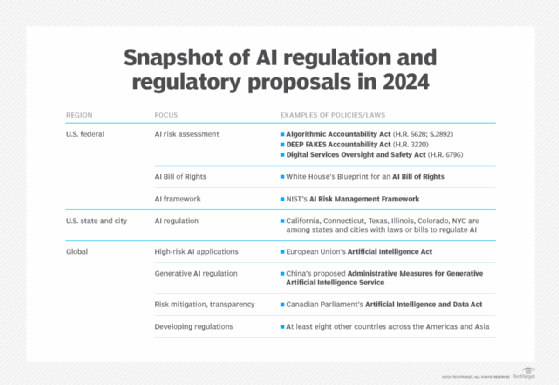

Third, explainability makes it simpler to make sure that the system conforms to regulatory requirements, lots of which have emerged in recent times — the EU’s AI Act is one well-known instance — to reduce the damaging repercussions of AI. These embrace dangers to information privateness, AI hallucinations leading to incorrect output, information breaches affecting governments or companies, and the unfold of audio or video deepfakes resulting in the unfold of misinformation.

Lastly, explainability is significant to the implementation of accountable AI. Accountable AI refers to AI techniques which can be secure; clear; accountable; and utilized in an moral technique to produce reliable, dependable outcomes. The purpose of accountable AI is to generate useful outcomes and reduce hurt.

Here’s a abstract of the variations between black field AI and white field AI:

- Black field AI is commonly extra correct and environment friendly than white field AI.

- White field AI is simpler to grasp than black field AI.

- Black field fashions embrace boosting and random forest fashions which can be extremely non-linear in nature and more durable to elucidate.

- White field AI is simpler to debug and troubleshoot attributable to its clear, interpretable nature.

- Linear, resolution tree, and regression tree are all white field AI fashions.

Extra on accountable AI

AI that is developed and utilized in a morally upstanding and socially accountable means is named accountable AI. RAI is about making the AI algorithm accountable earlier than it generates outcomes. RAI guiding rules and finest practices are geared toward decreasing the damaging monetary, reputational and moral dangers that black field AI can create. In doing so, RAI can help each AI producers and AI shoppers.

AI practices are deemed accountable in the event that they adhere to those rules:

- Equity. The AI system treats all individuals and demographic teams pretty and does not reinforce or exacerbate preexisting biases or discrimination.

- Transparency. The system is simple to grasp and clarify to each its customers and people it’s going to have an effect on. Moreover, AI builders should disclose how the info used to coach an AI system is collected, saved, and used.

- Accountability. The organizations and other people creating and utilizing AI needs to be held answerable for the AI system’s judgments and selections.

- Ongoing growth. Continuous monitoring is critical to make sure that outputs are persistently in step with ethical AI ideas and societal norms.

- Human supervision. Each AI system needs to be designed to allow human monitoring and intervention when applicable.

When AI is each explainable and accountable, it’s extra prone to create a useful affect for humanity.

Neural networks are rising in popularity, as they arrive with a excessive stage of accountability and transparency. Study concerning the potentialities of white field AI, its use circumstances and the path it is possible to absorb the long run. Examine navigating the black field AI debate in healthcare and methods to remedy the black field AI downside via transparency.