A neural community is a machine studying (ML) mannequin designed to course of information in a manner that mimics the operate and construction of the human mind. Neural networks are intricate networks of interconnected nodes, or synthetic neurons, that collaborate to deal with difficult issues.

Additionally known as synthetic neural networks (ANNs), neural nets or deep neural networks, neural networks characterize a sort of deep studying expertise that is categorised below the broader subject of synthetic intelligence (AI).

Neural networks are broadly utilized in quite a lot of functions, together with picture recognition, predictive modeling, decision-making and pure language processing (NLP). Examples of great business functions over the previous 25 years embrace handwriting recognition for test processing, speech-to-text transcription, oil exploration information evaluation, climate prediction and facial recognition.

How do neural networks work?

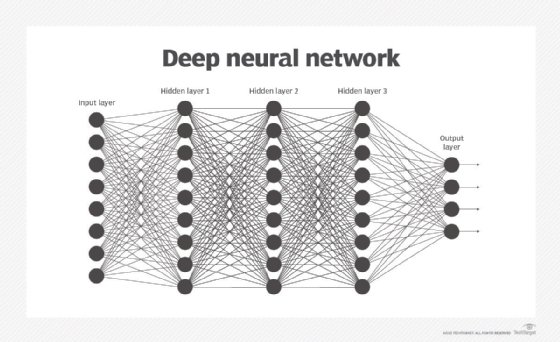

An ANN normally entails many processors working in parallel and organized in tiers or layers. There are sometimes three layers in a neural community: an enter layer, an output layer and a number of other hidden layers. The primary tier — analogous to optic nerves in human visible processing — receives the uncooked enter info. Every successive tier receives the output from the tier previous it quite than the uncooked enter, the identical manner organic neurons farther from the optic nerve obtain alerts from these nearer to it. The final tier produces the system’s output.

Every processing node has its personal small sphere of data, together with what it has seen and any guidelines it was initially programmed with or developed for itself. The tiers are extremely interconnected, which suggests every node in Tier N will probably be linked to many nodes in Tier N-1 — its inputs — and in Tier N+1, which gives enter information for the Tier N-1 nodes. There might be a number of nodes within the output layer, from which the reply it produces could be learn.

ANNs are famous for being adaptive, which suggests they modify themselves as they be taught from preliminary coaching, and subsequent runs present extra details about the world. Essentially the most primary studying mannequin is centered on weighting the enter streams, which is how every node measures the significance of enter information from every of its predecessors. Inputs that contribute to getting the appropriate solutions are weighted larger.

Functions of neural networks

Picture recognition was one of many first areas wherein neural networks had been efficiently utilized. However the expertise makes use of of neural networks have expanded to many extra areas, together with the next:

- Chatbots.

- Laptop imaginative and prescient.

- NLP, translation and language technology.

- Speech recognition.

- Advice engines.

- Inventory market forecasting.

- Supply driver route planning and optimization.

- Drug discovery and growth.

- Social media.

- Private assistants.

- Sample recognition.

- Regression evaluation.

- Course of and high quality management.

- Focused advertising via social community filtering and behavioral information insights.

- Generative AI.

- Quantum chemistry.

- Knowledge visualization.

Prime makes use of contain any course of that operates in line with strict guidelines or patterns and has massive quantities of knowledge. If the info concerned is just too massive for a human to make sense of in an affordable period of time, the method is probably going a primary candidate for automation via synthetic neural networks.

How are neural networks educated?

Sometimes, an ANN is initially educated, or fed massive quantities of knowledge. Coaching consists of offering enter and telling the community what the output needs to be. For instance, to construct a community that identifies the faces of actors, the preliminary coaching is likely to be a sequence of images, together with actors, non-actors, masks, statues and animal faces. Every enter is accompanied by matching identification, comparable to actors’ names or “not actor” or “not human” info. Offering the solutions allows the mannequin to regulate its inner weightings to do its job higher.

For instance, if nodes David, Dianne and Dakota inform node Ernie that the present enter picture is an image of Brad Pitt, however node Durango says it is George Clooney, and the coaching program confirms it is Pitt, Ernie decreases the load it assigns to Durango’s enter and will increase the load it offers to David, Dianne and Dakota.

In defining the principles and making determinations — the selections of every node on what to ship to the subsequent layer primarily based on inputs from the earlier tier — neural networks use a number of rules. These embrace gradient-based coaching, fuzzy logic, genetic algorithms and Bayesian strategies. They is likely to be given some primary guidelines about object relationships within the information being modeled.

For instance, a facial recognition system is likely to be instructed, “Eyebrows are found above eyes,” or “Mustaches are below a nose. Mustaches are above and/or beside a mouth.” Preloading guidelines could make coaching sooner and the mannequin extra highly effective sooner. Nevertheless it additionally consists of assumptions in regards to the nature of the issue, which may show to be both irrelevant and unhelpful, or incorrect and counterproductive, making the choice about what, if any, guidelines to construct unimportant.

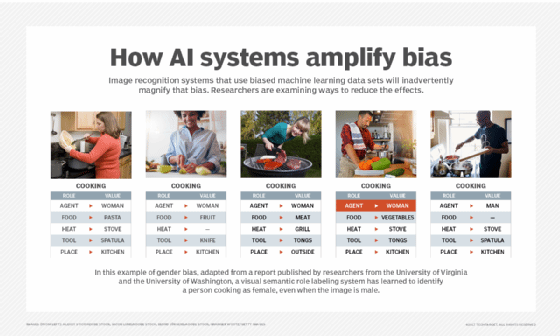

Additional, the assumptions folks make when coaching algorithms trigger neural networks to amplify cultural biases. Biased information units are an ongoing problem in coaching techniques that discover solutions on their very own via sample recognition in information. If the info feeding the algorithm is not impartial — and nearly no information is — the machine propagates bias.

Sorts of neural networks

Neural networks are generally described when it comes to their depth, together with what number of layers they’ve between enter and output, or the mannequin’s so-called hidden layers. This is the reason the time period neural community is used nearly synonymously with deep studying. Neural networks can be described by the variety of hidden nodes the mannequin has, or when it comes to what number of enter layers and output layers every node has. Variations on the basic neural community design allow numerous types of ahead and backward propagation of knowledge amongst tiers.

Particular kinds of ANNs embrace the next:

Feed-forward neural networks

One of many easiest variants of neural networks, these cross info in a single route, via numerous enter nodes, till it makes it to the output node. The community may or won’t have hidden node layers, making their functioning extra interpretable. It is ready to course of massive quantities of noise. The sort of ANN computational mannequin is utilized in applied sciences comparable to facial recognition and laptop imaginative and prescient.

Recurrent neural networks

Extra advanced in nature, recurrent neural networks (RNNs) save the output of processing nodes and feed the consequence again into the mannequin. That is how the mannequin learns to foretell the result of a layer. Every node within the RNN mannequin acts as a reminiscence cell, persevering with the computation and execution of operations.

This neural community begins with the identical entrance propagation as a feed-forward community, however then goes on to recollect all processed info to reuse it sooner or later. If the community’s prediction is inaccurate, then the system self-learns and continues working towards the right prediction throughout backpropagation. The sort of ANN is steadily utilized in text-to-speech conversions.

Convolutional neural networks

Convolutional neural networks (CNNs) are one of the crucial in style fashions used right now. This computational mannequin makes use of a variation of multilayer perceptrons and incorporates a number of convolutional layers that may be both completely linked or pooled. These convolutional layers create characteristic maps that file a area of the picture that is finally damaged into rectangles and despatched out for nonlinear processing.

The CNN mannequin is especially in style within the realm of picture recognition. It has been utilized in most of the most superior functions of AI, together with facial recognition, textual content digitization and NLP. Different use circumstances embrace paraphrase detection, sign processing and picture classification.

Deconvolutional neural networks

Deconvolutional neural networks use a reversed CNN studying course of. They attempt to discover misplaced options or alerts which may have initially been thought-about unimportant to the CNN system’s activity. This community mannequin can be utilized in picture synthesis and evaluation.

Modular neural networks

These comprise a number of neural networks working individually from each other. The networks do not talk or intervene with one another’s actions through the computation course of. Consequently, advanced or large computational processes could be carried out extra effectively.

Perceptron neural networks

These characterize probably the most primary type of neural networks and had been launched in 1958 by Frank Rosenblatt, an American psychologist who’s additionally thought-about to be the daddy of deep studying. The perceptron is particularly designed for binary classification duties, enabling it to distinguish between two courses primarily based on enter information.

Multilayer perceptron networks

Multilayer perceptron (MLP) networks include a number of layers of neurons, together with an enter layer, a number of hidden layers, and an output layer. Every layer is absolutely linked to the subsequent, that means that each neuron in a single layer is linked to each neuron within the subsequent layer. This structure allows MLPs to be taught advanced patterns and relationships in information, making them appropriate for numerous classification and regression duties.

Radial foundation operate networks

Radial foundation operate networks use radial foundation capabilities as activation capabilities. They’re sometimes used for operate approximation, time sequence prediction and management techniques.

Transformer neural networks

Transformer neural networks are reshaping NLP and different fields via a variety of developments. Launched by Google in a 2017 paper, transformers are particularly designed to course of sequential information, comparable to textual content, by successfully capturing relationships and dependencies between parts within the sequence, no matter their distance from each other.

Transformer neural networks have gained reputation as an alternative choice to CNNs and RNNs as a result of their “attention mechanism” allows them to seize and course of a number of parts in a sequence concurrently, which is a definite benefit over different neural community architectures.

Generative adversarial networks

Generative adversarial networks include two neural networks — a generator and a discriminator — that compete towards one another. The generator creates pretend information, whereas the discriminator evaluates its authenticity. A majority of these neural networks are broadly used for producing sensible photographs and information augmentation processes.

Benefits of synthetic neural networks

Synthetic neural networks provide the next advantages:

- Parallel processing. ANNs’ parallel processing skills imply the community can carry out a couple of job at a time.

- Function extraction. Neural networks can mechanically be taught and extract related options from uncooked information, which simplifies the modeling course of. Nonetheless, conventional ML strategies differ from neural networks within the sense that they typically require guide characteristic engineering.

- Data storage. ANNs retailer info on your entire community, not simply in a database. This ensures that even when a small quantity of knowledge disappears from one location, your entire community continues to function.

- Nonlinearity. The power to be taught and mannequin nonlinear, advanced relationships helps mannequin the real-world relationships between enter and output.

- Fault tolerance. ANNs include fault tolerance, which suggests the corruption or fault of a number of cells of the ANN will not cease the technology of output.

- Gradual corruption. This implies the community slowly degrades over time as an alternative of degrading immediately when an issue happens.

- Unrestricted enter variables. No restrictions are positioned on the enter variables, comparable to how they need to be distributed.

- Commentary-based selections. ML means the ANN can be taught from occasions and make selections primarily based on the observations.

- Unorganized information processing. ANNs are exceptionally good at organizing massive quantities of knowledge by processing, sorting and categorizing it.

- Skill to be taught hidden relationships. ANNs can be taught the hidden relationships in information with out commanding any fastened relationship. This implies ANNs can higher mannequin extremely risky information and nonconstant variance.

- Skill to generalize information. The power to generalize and infer unseen relationships on unseen information means ANNs can predict the output of unseen information.

Disadvantages of synthetic neural networks

Together with their quite a few advantages, neural networks even have some drawbacks, together with the next:

- Lack of guidelines. The dearth of guidelines for figuring out the right community construction means the suitable ANN structure can solely be discovered via trial, error and expertise.

- Computationally costly. Neural networks comparable to ANNs use many computational sources. Due to this fact, coaching neural networks could be computationally costly and time-consuming, requiring important processing energy and reminiscence. This is usually a barrier for organizations with restricted sources or these needing real-time processing.

- {Hardware} dependency. The requirement of processors with parallel processing skills makes neural networks depending on {hardware}.

- Numerical translation. The community works with numerical info, that means all issues should be translated into numerical values earlier than they are often introduced to the ANN.

- Lack of belief. The dearth of clarification behind probing options is likely one of the greatest disadvantages of ANNs. The lack to clarify the why or how behind the answer generates a scarcity of belief within the community.

- Inaccurate outcomes. If not educated correctly, ANNs can typically produce incomplete or inaccurate outcomes.

- Black field nature. Due to their black field AI mannequin, it may be difficult to know how neural networks make their predictions or categorize information.

- Overfitting. Neural networks are vulnerable to overfitting, notably when educated on small information units. They’ll find yourself studying the noise within the coaching information as an alternative of the underlying patterns, which can lead to poor efficiency on new and unseen information.

Historical past and timeline of neural networks

The historical past of neural networks spans a number of many years and has seen appreciable developments. The next examines the essential milestones and developments within the historical past of neural networks:

- Forties. In 1943, mathematicians Warren McCulloch and Walter Pitts constructed a circuitry system that ran easy algorithms and was meant to approximate the functioning of the human mind.

- Fifties. In 1958, Rosenblatt created the perceptron, a type of synthetic neural community able to studying and making judgments by modifying its weights. The perceptron featured a single layer of computing items and will deal with issues that had been linearly separate.

- Seventies. Paul Werbos, an American scientist, developed the backpropagation technique, which facilitated the coaching of multilayer neural networks. It made deep studying attainable by enabling weights to be adjusted throughout the community primarily based on the error calculated on the output layer.

- Eighties. Cognitive psychologist and laptop scientist Geoffrey Hinton, laptop scientist Yann LeCun and a gaggle of fellow researchers started investigating the idea of connectionism, which emphasizes the concept cognitive processes emerge via interconnected networks of straightforward processing items. This era paved the way in which for contemporary neural networks and deep studying fashions.

- Nineties. Jürgen Schmidhuber and Sepp Hochreiter, each laptop scientists from Germany, proposed the lengthy short-term reminiscence recurrent neural community framework in 1997.

- 2000s. Hinton and his colleagues on the College of Toronto pioneered restricted Boltzmann machines, a type of generative synthetic neural community that permits unsupervised studying. RBMs opened the trail for deep perception networks and deep studying algorithms.

- 2010s. Analysis in neural networks picked up nice velocity round 2010. The large information development, the place corporations amass huge troves of knowledge, and parallel computing gave information scientists the coaching information and computing sources wanted to run advanced ANNs. In 2012, a neural community named AlexNet gained the ImageNet Massive Scale Visible Recognition Problem, a picture classification competitors.

- 2020s and past. Neural networks proceed to endure fast growth, with developments in structure, coaching strategies and functions. Researchers are exploring new community constructions comparable to transformers and graph neural networks, which excel in NLP and understanding advanced relationships. Moreover, methods comparable to switch studying and self-supervised studying are enabling fashions to be taught from smaller information units and generalize higher. These developments are driving progress in fields comparable to healthcare, autonomous automobiles and local weather modeling.

Uncover the method for constructing a machine studying mannequin, together with information assortment, preparation, coaching, analysis and iteration. Observe these important steps to kick-start your ML mission.