Picture by Writer

As an information scientist, you want Python for detailed information evaluation, information visualization, and modeling. Nevertheless, when your information is saved in a relational database, you have to use SQL (Structured Question Language) to extract and manipulate the info. However how do you combine SQL with Python to unlock the total potential of your information?

On this tutorial, we’ll study to mix the facility of SQL with the pliability of Python utilizing SQLAlchemy and Pandas. We are going to learn to connect with databases, execute SQL queries utilizing SQLAlchemy, and analyze and visualize information utilizing Pandas.

Set up Pandas and SQLAlchemy utilizing:

pip set up pandas sqlalchemy

1. Saving the Pandas DataFrame as an SQL Desk

To create the SQL desk utilizing the CSV dataset, we’ll:

- Create a SQLite database utilizing the SQLAlchemy.

- Load the CSV dataset utilizing the Pandas. The countries_poluation dataset consists of the Air High quality Index (AQI) for all international locations on this planet from 2017 to 2023.

- Convert all of the AQI columns from object to numerical and drop row with lacking values.

# Import needed packages

import pandas as pd

import psycopg2

from sqlalchemy import create_engine

# creating the brand new db

engine = create_engine(

"sqlite:///kdnuggets.db")

# learn the CSV dataset

information = pd.read_csv("/work/air_pollution new.csv")

col = ['2017', '2018', '2019', '2020', '2021', '2022', '2023']

for s in col:

information[s] = pd.to_numeric(information[s], errors="coerce")

information = information.dropna(subset=[s])

- Save the Pandas dataframe as a SQL desk. The `to_sql` operate requires a desk title and the engine object.

# save the dataframe as a SQLite desk

information.to_sql('countries_poluation', engine, if_exists="replace")

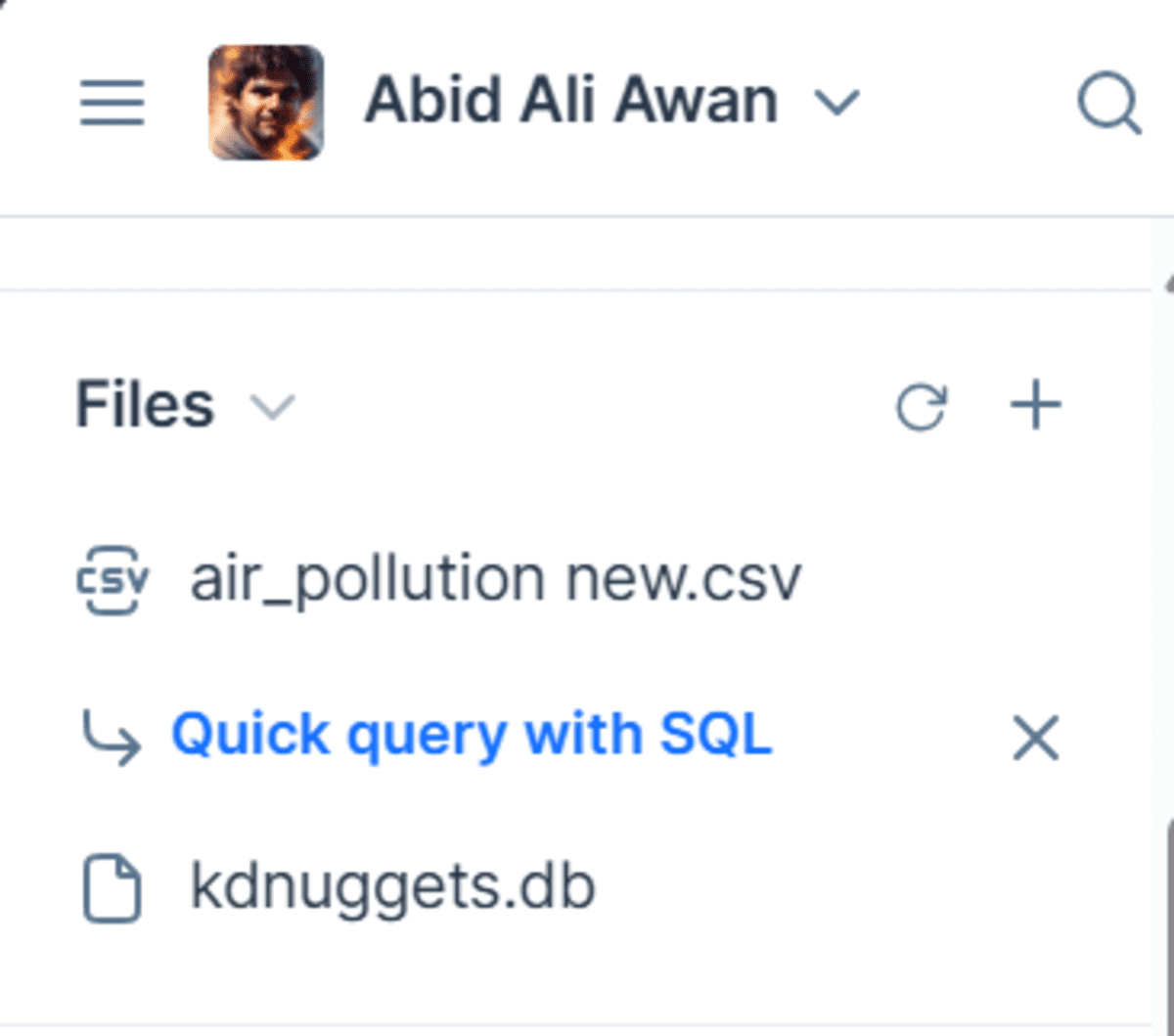

Consequently, your SQLite database is saved in your file listing.

Word: I’m utilizing Deepnote for this tutorial to run the Python code seamlessly. Deepnote is a free AI Cloud Pocket book that can enable you to rapidly run any information science code.

2. Loading the SQL Desk utilizing Pandas

To load the whole desk from the SQL database as a Pandas dataframe, we’ll:

- Set up the reference to our database by offering the database URL.

- Use the `pd.read_sql_table` operate to load the whole desk and convert it right into a Pandas dataframe. The operate requires desk anime, engine objects, and column names.

- Show the highest 5 rows.

import pandas as pd

import psycopg2

from sqlalchemy import create_engine

# set up a reference to the database

engine = create_engine("sqlite:///kdnuggets.db")

# learn the sqlite desk

table_df = pd.read_sql_table(

"countries_poluation",

con=engine,

columns=['city', 'country', '2017', '2018', '2019', '2020', '2021', '2022',

'2023']

)

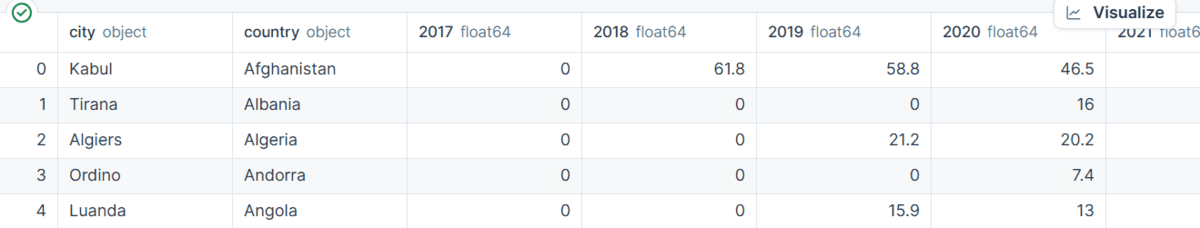

table_df.head()

The SQL desk has been efficiently loaded as a dataframe. This implies that you could now use it to carry out information evaluation and visualization utilizing fashionable Python packages corresponding to Seaborn, Matplotlib, Scipy, Numpy, and extra.

3. Operating the SQL Question utilizing Pandas

As an alternative of proscribing ourselves to 1 desk, we will entry the whole database through the use of the `pd.read_sql` operate. Simply write a easy SQL question and supply it with the engine object.

The SQL question will show two columns from the “countries_population” desk, kind it by the “2023” column, and show the highest 5 outcomes.

# learn desk information utilizing sql question

sql_df = pd.read_sql(

"SELECT city,[2023] FROM countries_poluation ORDER BY [2023] DESC LIMIT 5",

con=engine

)

print(sql_df)

We bought to the highest 5 cities on this planet with the worst air high quality.

metropolis 2023

0 Lahore 97.4

1 Hotan 95.0

2 Bhiwadi 93.3

3 Delhi (NCT) 92.7

4 Peshawar 91.9

4. Utilizing the SQL Question Outcome with Pandas

We are able to additionally use the outcomes from SQL question and carry out additional evaluation. For instance, calculate the typical of the highest 5 cities utilizing Pandas.

average_air = sql_df['2023'].imply()

print(f"The average of top 5 cities: {average_air:.2f}")

Output:

The typical of prime 5 cities: 94.06

Or, create a bar plot by specifying the x and y arguments and the kind of plot.

sql_df.plot(x="city",y="2023",variety = "barh");

Conclusion

The chances of utilizing SQLAlchemy with Pandas are countless. You possibly can carry out easy information evaluation utilizing the SQL question, however to visualise the outcomes and even practice the machine studying mannequin, it’s a must to convert it right into a Pandas dataframe.

On this tutorial, now we have realized how you can load a SQL database into Python, carry out information evaluation, and create visualizations. In the event you loved this information, additionally, you will respect ‘A Information to Working with SQLite Databases in Python‘, which offers an in-depth exploration of utilizing Python’s built-in sqlite3 module.

Abid Ali Awan (@1abidaliawan) is an authorized information scientist skilled who loves constructing machine studying fashions. At present, he’s specializing in content material creation and writing technical blogs on machine studying and information science applied sciences. Abid holds a Grasp’s diploma in know-how administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students scuffling with psychological sickness.