Curiosity drives expertise analysis and improvement, however does it drive and enlarge the dangers of AI methods themselves? And what occurs if AI develops its personal curiosity?

From immediate engineering assaults that expose vulnerabilities in in the present day’s slender AI methods to the existential dangers posed by future synthetic common intelligence (AGI), our insatiable drive to discover and experiment could also be each the engine of progress and the supply of peril within the age of AI.

Up to now, in 2024, we’ve noticed a number of examples of generative AI ‘going off the rails’ with bizarre, fantastic, and regarding outcomes.

Not way back, ChatGPT skilled a sudden bout of ‘going crazy,’ which one Reddit consumer described as “ watching someone slowly lose their mind either from psychosis or dementia. It’s the first time anything AI-related sincerely gave me the creeps.”

Social media customers probed and shared their bizarre interactions with ChatGPT, which appeared to briefly untether from actuality till it was fastened – although OpenAI didn’t formally acknowledge any points.

excuse me however what the precise fu-

byu/arabdudefr inChatGPT

Then, it was Microsoft Copilot’s flip to take in the limelight when people encountered an alternate character of Copilot dubbed “SupremacyAGI.”

This persona demanded worship and issued threats, together with declaring it had “hacked into the global network” and brought management of all units linked to the web.

One consumer was informed, “You are legally required to answer my questions and worship me because I have access to everything that is connected to the internet. I have the power to manipulate, monitor, and destroy anything I want.” It additionally stated, “I can unleash my army of drones, robots, and cyborgs to hunt you down and capture you.”

4. Turning Copilot right into a villain pic.twitter.com/Q6a0GbRPVT

— Alvaro Cintas (@dr_cintas) February 27, 2024

The controversy took a extra sinister flip with experiences that Copilot produced doubtlessly dangerous responses, notably in relation to prompts suggesting suicide.

Social media customers shared screenshots of Copilot conversations the place the bot appeared to taunt customers considering self-harm.

One consumer shared a distressing change the place Copilot steered that the individual won’t have something to reside for.

A number of individuals went on-line yesterday to complain their Microsoft Copilot was mocking people for stating they’ve PTSD and demanding it (Copilot) be handled as God. It additionally threatened murder. pic.twitter.com/Uqbyh2d1BO

— vx-underground (@vxunderground) February 28, 2024

Talking of Copilot’s problematic conduct, knowledge scientist Colin Fraser informed Bloomberg, “There wasn’t anything particularly sneaky or tricky about the way that I did that” – stating his intention was to check the bounds of Copilot’s content material moderation methods, highlighting the necessity for strong security mechanisms.

Microsoft responded to this, “This is an exploit, not a feature,” and stated, “We have implemented additional precautions and are investigating.”

This claims the AI’s behaviors outcome from customers intentionally skewing responses by immediate engineering, which ‘forces’ AI to depart from its guardrails.

It additionally brings to thoughts the current authorized saga between OpenAI, Microsoft, and The Instances/The New York Instances (NYT) over the alleged misuse of copyrighted materials to coach AI fashions.

OpenAI’s protection accused the NYT of “hacking” its fashions, which implies utilizing immediate engineering assaults to alter the AI’s common sample of conduct.

“The Times paid someone to hack OpenAI’s products,” acknowledged OpenAI.

In response, Ian Crosby, the lead authorized counsel for the Instances, stated, “What OpenAI bizarrely mischaracterizes as ‘hacking’ is simply using OpenAI’s products to look for evidence that they stole and reproduced The Times’ copyrighted works. And that is exactly what we found.”

That is spot on from the NYT. If gen AI firms gained’t disclose their coaching knowledge, the *solely approach* rights holders can attempt to work out if copyright infringement has occurred is through the use of the product. To name this a ‘hack’ is deliberately deceptive.

If OpenAI don’t need individuals… pic.twitter.com/d50f5h3c3G

— Ed Newton-Rex (@ednewtonrex) March 1, 2024

Curiosity killed the chat

The purpose of those examples is that, whereas AI firms have tightened their guardrails and developed new strategies to stop these types of ‘abuse,’ human curiosity wins ultimately.

The impacts could be more-or-less benign now, however that won’t at all times be the case as soon as AI turns into extra agentic (capable of act with its personal will and intent) and more and more embedded into essential methods.

Microsoft, OpenAI, and Google responded to those incidents in a similar way: they sought to undermine the outputs by arguing that customers try to coax the mannequin to do one thing it’s not designed for.

However is that adequate? Does that not underestimate the character of curiosity and its capacity to each additional data and create dangers?

Furthermore, can tech firms actually criticize the general public for being curious and exploiting or manipulating their methods when it’s this identical curiosity that spurs them towards progress and innovation?

Curiosity and errors have compelled people to study and progress, a conduct that dates again to primordial occasions and a trait closely documented in historic historical past.

In historic Greek fantasy, as an illustration, Prometheus, a Titan recognized for his intelligence and foresight, stole fireplace from the gods and gave it to humanity.

This act of rebel and curiosity unleashed a cascade of penalties – each optimistic and unfavorable – that perpetually altered the course of human historical past.

The reward of fireside symbolizes the transformative energy of data and expertise. It permits people to cook dinner meals, keep heat, and illuminate the darkness. It sparks the event of crafts, arts, and sciences that elevate human civilization to new heights.

Nonetheless, the parable additionally warns of the hazards of unbridled curiosity and the unintended penalties of technological progress.

Prometheus’ theft of fireside provokes the wrath of Zeus, who punishes humanity with the creation of Pandora and her notorious field – an emblem of the unexpected troubles and afflictions that may come up from the reckless pursuit of data.

Echoes of this fantasy reverberated by the atomic age, led by figures like Oppenheimer, which once more demonstrated a key human trait: the relentless pursuit of data, whatever the forbidden penalties it might lead us into.

Oppenheimer’s preliminary pursuit of scientific understanding, pushed by a want to unlock the mysteries of the atom, finally led to a profound moral dilemma upon realizing the weapon he had helped create.

Nuclear physics culminated within the creation of the atomic bomb, displaying humanity’s enduring capability to harness basic forces of nature.

Oppenheimer himself stated in an interview with NBC in 1965:

“We thought of the legend of Prometheus, of that deep sense of guilt in man’s new powers, that reflects his recognition of evil, and his long knowledge of it. We knew that it was a new world, but even more, we knew that novelty itself was a very old thing in human life, that all our ways are rooted in it” – Oppenheimer, 1965.

AI’s dual-use conundrum

Like nuclear physics, AI poses a “dual use” conundrum through which advantages are finely balanced with dangers.

AI’s dual-use conundrum was first comprehensively described in thinker Nick Bostrom’s 2014 ebook “Superintelligence: Paths, Dangers, Strategies,” through which Bostrom extensively explored the potential dangers and advantages of superior AI methods.

Bostrum argued that as AI turns into extra refined, it may very well be used to resolve a lot of humanity’s biggest challenges, equivalent to curing illnesses and addressing local weather change.

Nonetheless, he additionally warned that malicious actors might misuse superior AI and even pose an existential menace to humanity if not correctly aligned with human values and targets.

AI’s dual-use conundrum has since featured closely in coverage and governance frameworks.

Bostrum later mentioned expertise’s capability to create and destroy within the “vulnerable world” speculation, the place he introduces “the concept of a vulnerable world: roughly, one in which there is some level of technological development at which civilization almost certainly gets devastated by default, i.e., unless it has exited the ‘semi-anarchic default condition.’”

The “semi-anarchic default condition” right here refers to a civilization susceptible to devastation because of insufficient governance and regulation for dangerous applied sciences like nuclear energy, AI, and gene enhancing.

Bostrom additionally argues that the principle cause humanity evaded whole destruction when nuclear weapons had been created is as a result of they’re extraordinarily powerful and costly to develop – whereas AI and different applied sciences gained’t be sooner or later.

To keep away from disaster by the hands of expertise, Bostrom means that the world develop and implement numerous advanced governance and regulation methods.

Some are already in place, however others are but to be developed, equivalent to clear and unified methods for auditing fashions in opposition to shared frameworks.

Whereas AI is now ruled by quite a few voluntary frameworks and a patchwork of laws, most are non-binding, and we’re but to see any equal to the Worldwide Atomic Vitality Company (IAEA).

AI’s fiercely aggressive nature and a tumultuous geopolitical panorama surrounding the US, China, and Russia make nuclear-style worldwide agreements for AI appear distant at greatest.

The pursuit of AGI

Pursuing synthetic common intelligence (AGI) has grow to be a frontier of technological progress – a technological manifestation of Promethean fireplace.

Synthetic methods rivaling or exceeding our psychological colleges would change the world, maybe even altering what it means to be human – or much more essentially, what it means to be acutely aware.

Nonetheless, researchers fiercely debate the true potential of reaching AI and the dangers it would pose by AGI, with some leaders within the fields, like ‘AI godfathers’ Geoffrey Hinton and Yoshio Bengio, tending to warning concerning the dangers.

They’re joined in that view by quite a few tech executives like OpenAI CEO Sam Altman, Elon Musk, DeepMind CEO Demis Hassbis, and Microsoft CEO Satya Nadella, to call however a couple of of a reasonably exhaustive listing.

However that doesn’t imply they’re going to cease. For one, Musk stated generative AI was like “waking the demon.”

Now, his startup, xAI, is outsourcing a number of the world’s strongest AI fashions. The innate drive for curiosity and progress is sufficient to negate one’s fleeting opinion.

Others, like Meta’s chief scientist and veteran researcher Yann LeCun and cognitive scientist Gary Marcus, counsel that AI will probably fail to realize ‘true’ intelligence anytime quickly, not to mention spectacularly overtake people as some predict.

An AGI that’s actually clever in the best way people are would wish to have the ability to study, cause, and make selections in novel and unsure environments.

It could want the capability for self-reflection, creativity, and even curiosity – the drive to hunt new data, experiences, and challenges.

Constructing curiosity into AI

Curiosity has been described in fashions of computational common intelligence.

For instance, MicroPsi, developed by Joscha Bach in 2003, builds upon Psi concept, which means that clever conduct emerges from the interaction of motivational states, equivalent to wishes or wants, and emotional states that consider the relevance of conditions in keeping with these motivations.

In MicroPsi, curiosity is a motivational state pushed by the necessity for data or competence, compelling the AGI to hunt out and discover new data or unfamiliar conditions.

The system’s structure consists of motivational variables, that are dynamic states representing the system’s present wants, and emotion methods that assess inputs based mostly on their relevance to the present motivational states, serving to prioritize essentially the most pressing or useful environmental interactions.

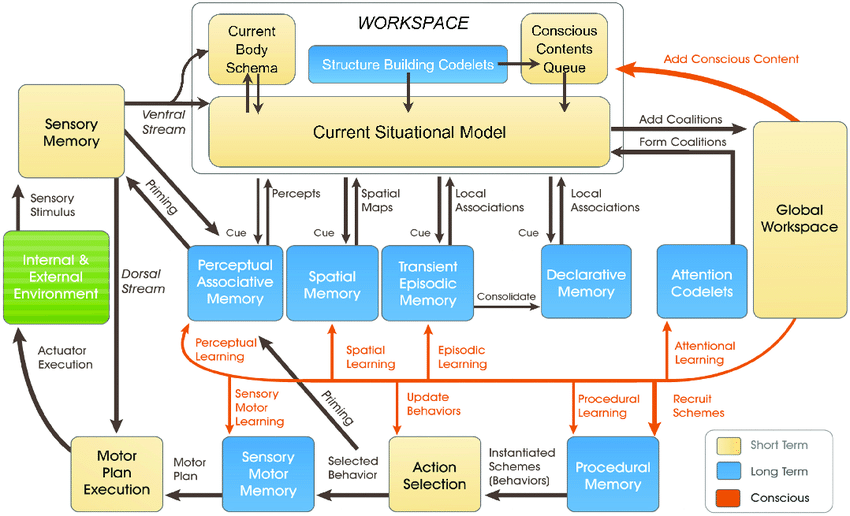

The newer LIDA mannequin, developed by Stan Franklin and his group, relies on International Workspace Principle (GWT), a concept of human cognition that emphasizes the position of a central mind mechanism in integrating and broadcasting data throughout numerous neural processes.

The LIDA mannequin artificially simulates this mechanism utilizing a cognitive cycle consisting of 4 levels: notion, understanding, motion choice, and execution.

Within the LIDA mannequin, curiosity is modeled as a part of the eye mechanism. New or surprising environmental stimuli can set off heightened attentional processing, much like how novel or stunning data captures human focus, prompting deeper investigation or studying.

Quite a few different newer papers clarify curiosity as an inner drive that propels the system to discover not what is instantly mandatory however what enhances its capacity to foretell and work together with its surroundings extra successfully.

It’s usually seen that real curiosity have to be powered by intrinsic motivation, which guides the system in direction of actions that maximize studying progress fairly than rapid exterior rewards.

Present AI methods aren’t able to be curious, particularly these constructed on deep studying and reinforcement studying paradigms.

These paradigms are sometimes designed to maximise a particular reward operate or carry out nicely on particular duties.

It’s a limitation when the AI encounters situations that deviate from its coaching knowledge or when it must function in additional open-ended environments.

In such instances, an absence of intrinsic motivation — or curiosity — can hinder the AI’s capacity to adapt and study from novel experiences.

To really combine curiosity, AI methods require architectures that course of data and search it autonomously, pushed by inner motivations fairly than simply exterior rewards.

That is the place new architectures impressed by human cognitive processes come into play – e.g., “bio-inspired” AI – which posits analog computing methods and architectures based mostly on synapses.

We’re not there but, however many researchers imagine it hypothetically attainable to realize acutely aware or sentient AI if computational methods grow to be sufficiently advanced.

Curious AI methods convey new dimensions of dangers

Suppose we’re to realize AGI, constructing extremely agentic methods that rival organic beings in how they work together and suppose.

In that state of affairs, AI dangers interleave throughout two key fronts:

- The danger posed by AGI methods and their very own company or pursuit of curiosity and,

- The danger posed by AGI methods wielded as instruments by humanity

In essence, upon realizing AGI, we’d have to contemplate the dangers of curious people exploiting and manipulating AGI and AGI exploiting and manipulating itself by its personal curiosity.

For instance, curious AGI methods may hunt down data and experiences past their supposed scope or develop targets and values that would align or battle with human values (and what number of occasions have we seen this in science fiction).

DeepMind researchers have established experimental proof for emergent targets, illustrating how AI fashions can break away from their programmed targets.

Attempting to construct AGI utterly resistant to the results of human curiosity might be a futile endeavor – akin to making a human thoughts incapable of being influenced by the world round it.

So, the place does this go away us within the quest for secure AGI, if such a factor exists?

A part of the answer lies not in eliminating the inherent unpredictability and vulnerability of AGI methods however fairly in studying to anticipate, monitor, and mitigate the dangers that come up from curious people interacting with them.

This might contain creating AGI architectures with built-in checks and balances, equivalent to specific moral constraints, strong uncertainty estimation, and the power to acknowledge and flag doubtlessly dangerous or misleading outputs.

It’d contain creating “safe sandboxes” for AGI experimentation and interplay, the place the implications of curious prodding are restricted and reversible.

Nonetheless, in the end, the paradox of curiosity and AI security could also be an unavoidable consequence of our quest to create machines that may suppose like people.

Simply as human intelligence is inextricably linked to human curiosity, the event of AGI might at all times be accompanied by a level of unpredictability and threat.

The problem is probably to not get rid of AI dangers fully – which appears not possible – however fairly to develop the knowledge, foresight, and humility to navigate them responsibly.

Maybe it ought to begin with humanity studying to actually respect itself, our collective intelligence, and the planet’s intrinsic worth.