HuggingFace Hub has change into a go-to platform for sharing and exploring fashions on the planet of machine studying. Just lately, I launched into a journey to experiment with numerous fashions on the hub, solely to come across one thing attention-grabbing – the potential dangers related to loading untrusted fashions. On this weblog publish, we’ll discover the mechanics of saving and loading fashions, the unsuspecting risks that lurk within the course of, and how one can defend your self in opposition to them.

The Hub of AI Fashions

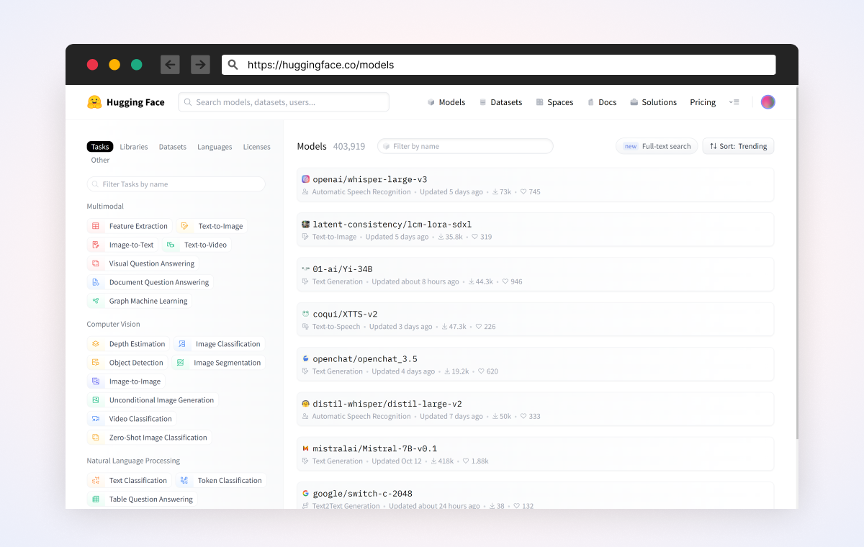

At first look, the HuggingFace Hub seems to be a treasure trove of innocent fashions. It offers a wealthy market that includes pre-trained AI fashions tailor-made for a myriad of functions, from Pc Imaginative and prescient fashions like Object Detection and Picture Classification, to Pure Language Processing fashions comparable to Textual content Technology and Code Completion.

Screenshot of the AI mannequin market in HuggingFace

“Totally Harmless Model”

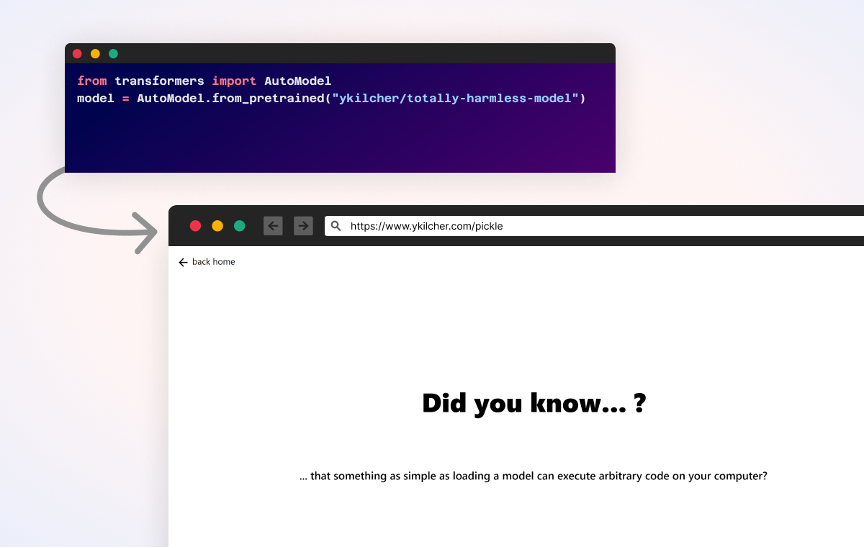

Whereas shopping HuggingFace’s market for fashions, “ykilcher/totally-harmless-model” caught my consideration. I used to be excited to strive it out, so I loaded the mannequin utilizing a easy Python script and to my shock it opened a browser within the background.

This mannequin was created by the Researcher and YouTuber Yannic Kilcher, as a part of his video on demonstrating the hidden risks of loading open-source AI fashions. I used to be impressed by his analysis video (which I extremely suggest watching), so I needed to spotlight the dangers of malicious code embedded in AI fashions.

The Mechanics of Mannequin Loading

Many HuggingFace fashions within the market have been created utilizing the PyTorch library, which makes it tremendous straightforward to avoid wasting/load fashions from/to file. The file serialization course of includes utilizing Python’s pickle module, a strong built-in module for saving and loading arbitrary Python objects in a binary format.

Pickle’s flexibility is each a blessing and a curse. It may save and cargo arbitrary objects, making it a handy alternative for mannequin serialization. Nonetheless, this flexibility comes with a darkish facet – as demonstrated on “ykilcher/totally-harmless-model” – the pickle can execute arbitrary code in the course of the unpickling course of.

Embedding Code in a Mannequin

To indicate how straightforward it’s, we’ll use a preferred mannequin from {the marketplace} (gpt2) and modify it to execute code when loaded.

Utilizing the highly effective Transformers Python library, we’ll begin by loading our base mannequin, ‘gpt2’, and its corresponding tokenizer.

Subsequent, we’ll declare a customized class known as ExecDict, which extends the built-in dict object and implements the __reduce__ methodology which permits us to change the pickle object (that is the place we’ll execute our payload).

Lastly, we’ll create a brand new mannequin, ‘gpt2-rs’, and use the customized save_function to transform the state dict to our customized class.

Python script produces a duplicate of an present mannequin with an embedded code. Supply

This script outputs a brand new mannequin known as “gpt2-rs” primarily based on “gpt” and executing the payload when loaded.

Hugging Face (with collaborations from EleutherAI and Stability AI) has developed the Safetensors library to boost the safety and effectivity of AI mannequin serialization. The library offers an answer for securely storing tensors in AI fashions, emphasizing safety and effectivity. Not like the Python pickle module, which is prone to arbitrary code execution, Safetensors employs a format that avoids this threat, specializing in protected serialization. This format, which mixes a JSON UTF-8 string header with a byte buffer for the tensor information, provides detailed details about the tensors with out operating extra code.

In Closing…

It is essential for builders to pay attention to the dangers related to LLM fashions particularly when contribution from strangers on the web as they could comprise malicious code.

There are open-source scanners comparable to Picklescan which might detect a few of these assaults.

As well as, HuggingFace performs background scanning of mannequin recordsdata and locations a warning in the event that they discover unsafe recordsdata. Nonetheless, even when flagged as unsafe, HuggingFace nonetheless permits these recordsdata to be downloaded and loaded.

When utilizing or growing fashions, we encourage you to make use of Safetensors file. If the undertaking you might be utilizing has but to switch to this file format, Hugging Face means that you can convert it by your self

For analysis functions, I open-sourced right here the assorted scripts I am utilizing to exhibit the dangers of malicious AI. Please learn the disclaimer on this GitHub repo when you’re planning on utilizing them.