The time period LLMjacking refers to attackers utilizing stolen cloud credentials to achieve unauthorized entry to cloud-based massive language fashions (LLMs), equivalent to OpenAI’s GPT or Anthropic Claude. This weblog reveals easy methods to strengthen LLMs with Sysdig.

The assault works by criminals exploiting stolen credentials or cloud misconfigurations to achieve entry to costly synthetic intelligence (AI) fashions within the cloud. As soon as they acquire entry, they’ll run pricey AI fashions on the sufferer’s expense. As per the Sysdig 2024 International Menace Report, these assaults are financially damaging, with LLMjacking particularly resulting in prices as excessive as $100,000 per day for the focused group.

Challenges and dangers of Giant Language Fashions (LLMs)

Giant language fashions (LLMs) current distinctive challenges and dangers:

- Lack of separation of management and information planes: The lack to separate the management and information planes will increase vulnerability. It permits risk actors to use safety gaps and manipulate inputs, entry delicate information, or set off unintended system actions. Widespread techniques embrace immediate injection, cross-system information leakage, unintended code execution, and poisoning coaching information. These assaults compromise confidentiality, integrity, and directions fed to downstream programs.

- Elevated assault floor: LLM use expands organizational vulnerability to adversaries. Cyber criminals who prepare their very own AI programs on malware and different malicious software program can considerably stage up their assaults by:

- Crafting new and superior variants of malware that evade detection

- Creating tailor-made phishing schemes (much like the instance right here) and deep fakes for social engineering

- Growing revolutionary hacking methods

Methods for resilience and risk mitigation

To guard in opposition to LLMjacking, organizations ought to undertake strong safety measures to safeguard their AI sources whereas sustaining operational resilience. Key methods embrace implementing strict entry controls, utilizing secrets and techniques administration to safe credentials, and enabling detailed logging to observe API interactions and billing for anomalies.

Configuration administration practices, equivalent to limiting mannequin entry and monitoring for unauthorized modifications, are important. Moreover, organizations ought to keep knowledgeable about evolving threats, develop incident response plans, and implement measures like adversarial coaching and enter validation to boost mannequin resilience in opposition to exploitation. These steps collectively scale back the chance of unauthorized entry and misuse.

Strengthen your LLMs with Sysdig

Sysdig presents our clients a number of methods to safe and scale back their assault floor in opposition to LLMjacking and strengthen your LLMs.

Strengthen your AI workload safety

- Begin by utilizing Sysdig’s AI workload safety instruments to maintain your AI programs secure

- Make it a precedence to establish any vulnerabilities in your AI infrastructure

- Recurrently scan your programs to seek out and repair potential entry factors for LLMjacking

Sysdig’s unified threat findings characteristic brings all the pieces collectively, providing you with a transparent and streamlined view of correlated dangers and occasions. For AI customers, this implies making it simpler to prioritize, examine, and tackle AI-related dangers effectively.

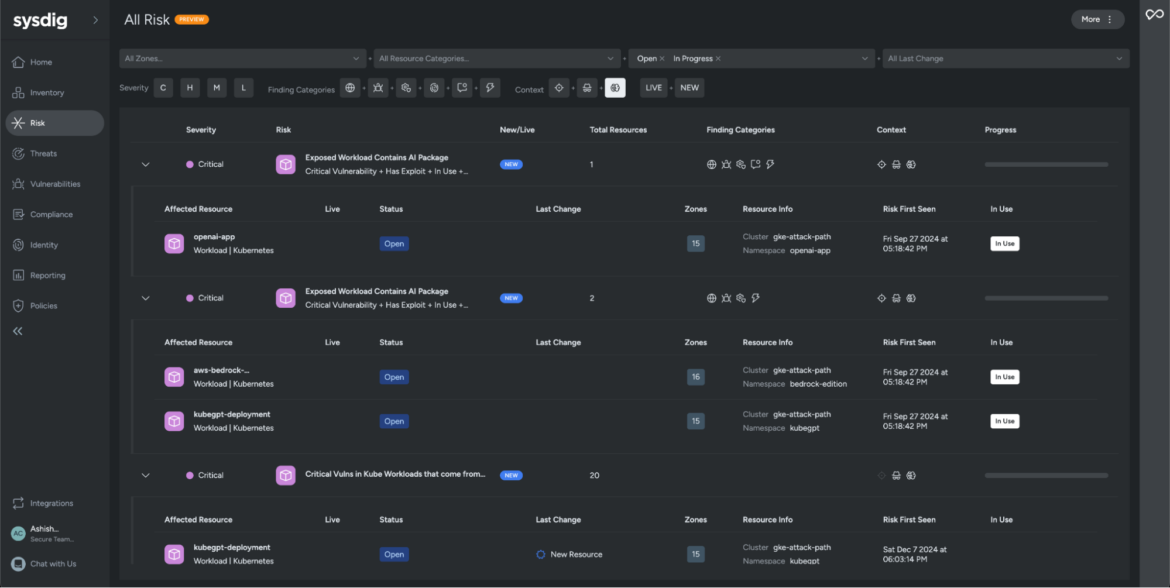

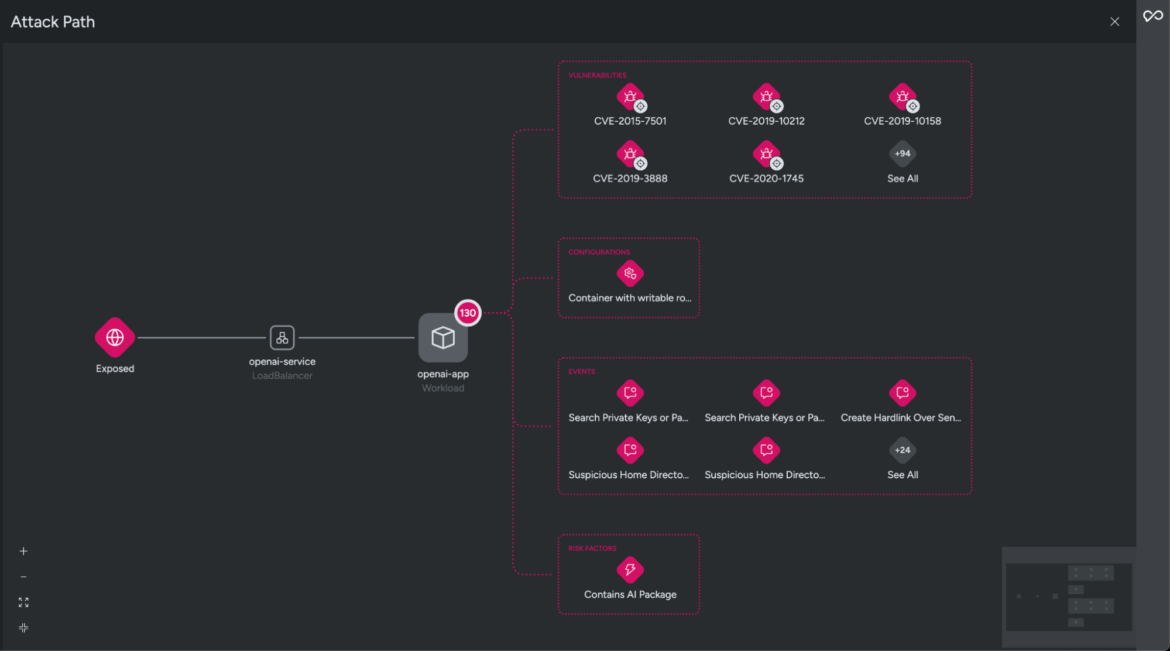

Within the instance under, we used Sysdig Safe to filter all dangers the place weak AI packages had been detected.

The Sysdig agent makes use of kernel-level instrumentation (by way of a kernel module or an eBPF utility) to observe all the pieces occurring below the hood, equivalent to operating processes, opened information, and community connections. This enables the agent to establish which processes are actively operating in your container and which libraries are getting used.

Achieve clear visibility into your cloud surroundings

- Arrange real-time monitoring to your cloud and hybrid environments

- Create alerts for any suspicious configuration modifications so you possibly can act shortly

- Set up proactive detection strategies to catch safety points earlier than they escalate

Sysdig combines threat prioritization, assault path evaluation, and stock, offering an in depth and complete overview of a threat and serving to you strengthen your LLMs.

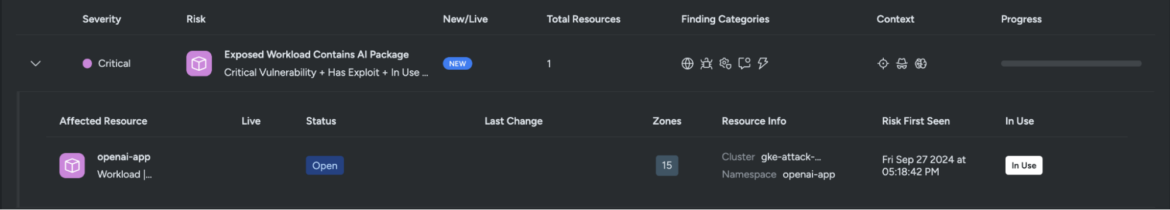

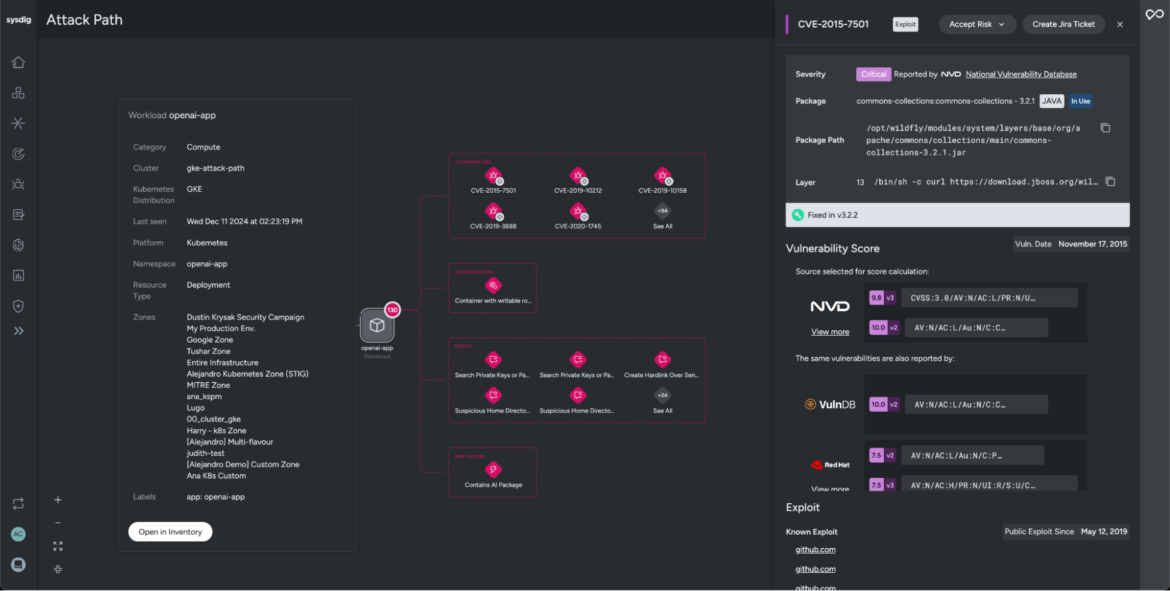

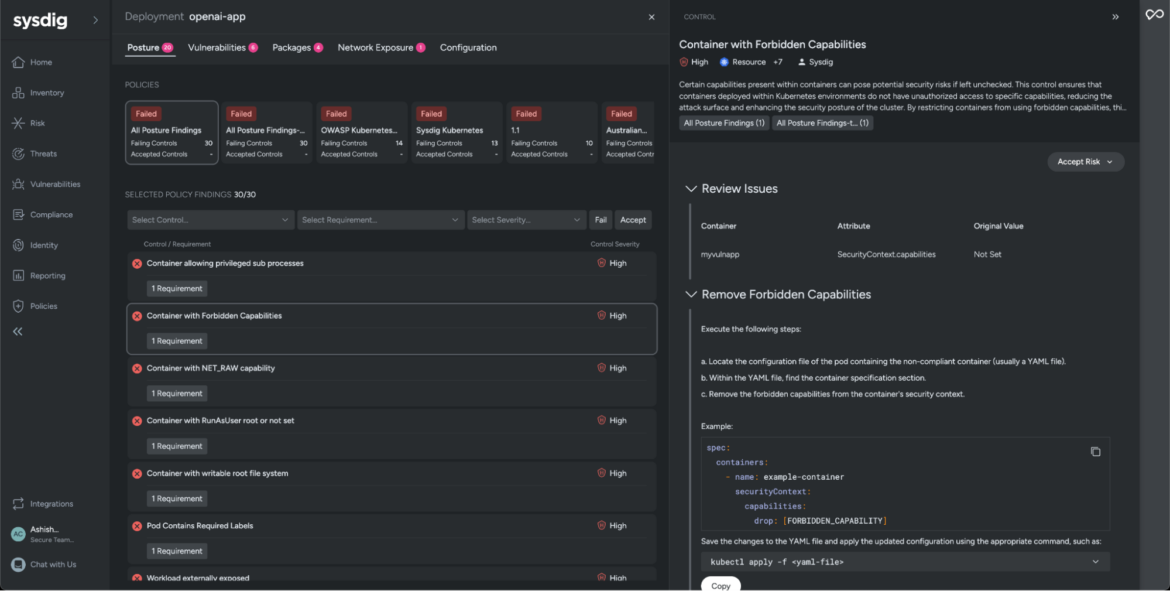

These dangers are categorized as essential as a result of the AI packages with vulnerabilities are being executed at runtime. To higher perceive why Sysdig flagged this workload as a threat, we’ll examine one of many affected sources tagged as openai-app.

The affected workload operating the weak AI package deal is uncovered to the web and will permit risk actors entry to delicate info.

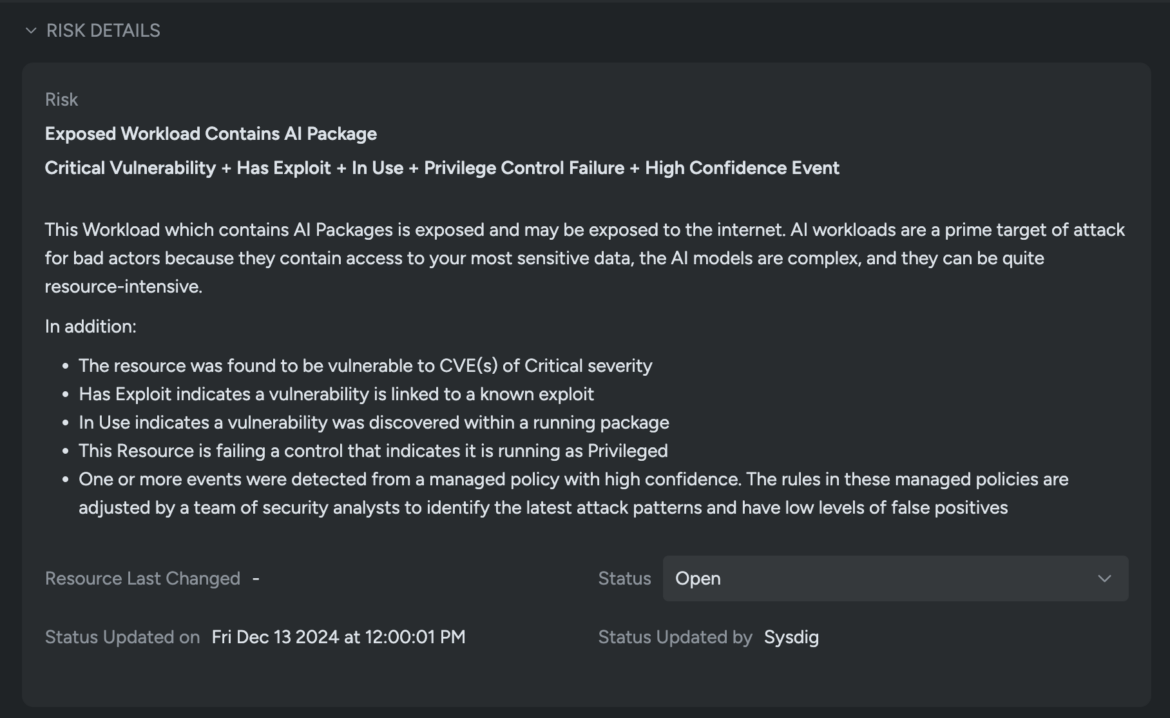

Sysdig Safe additionally highlights extra threat components, such because the useful resource being weak to essential CVEs and a identified exploit, operating in Privileged mode, and triggering a number of runtime occasions detected by the managed coverage. These components collectively elevate the chance ranges to essential.

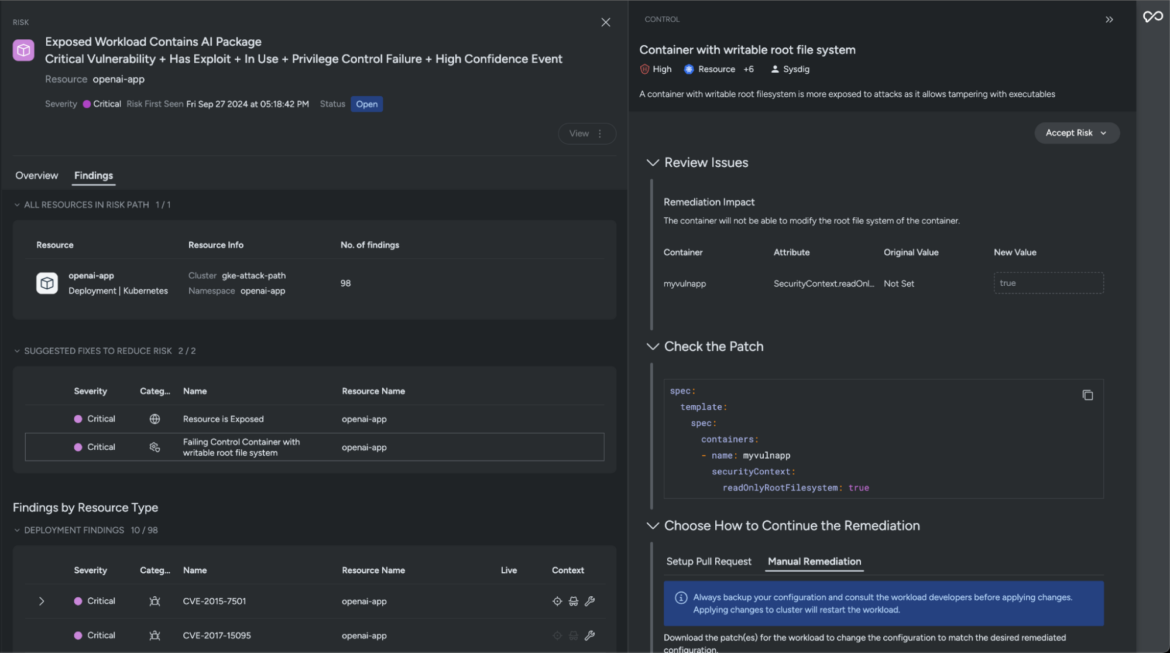

In addition to offering in-depth context, Sysdig Safe additionally accelerates your decision timelines. The instructed fixes are exact and assist remediate the recognized threat issue. For instance, one option to mitigate this essential threat is by making use of a patch that forestalls adversaries from modifying the container’s root file system.

Sysdig logs all the pieces on the assault path — together with the place the chance is happening, associated vulnerabilities, and any detected lively threats at runtime. These insights provide help to customise and enrich your detection strategies to stop any additional compromise.

Make the most of sensible risk detection instruments

- Activate instruments like Falco and Sysdig Safe to keep watch over potential threats

- Observe authentication makes an attempt and look ahead to any uncommon patterns or unauthorized entry

- Keep alert for reconnaissance actions that would point out a looming assault

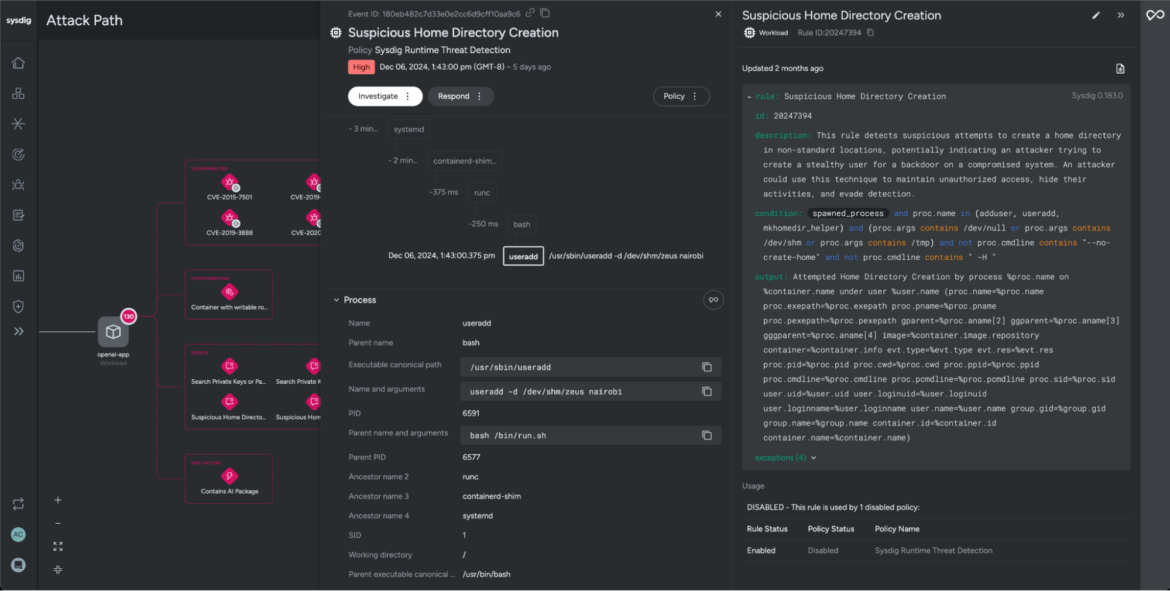

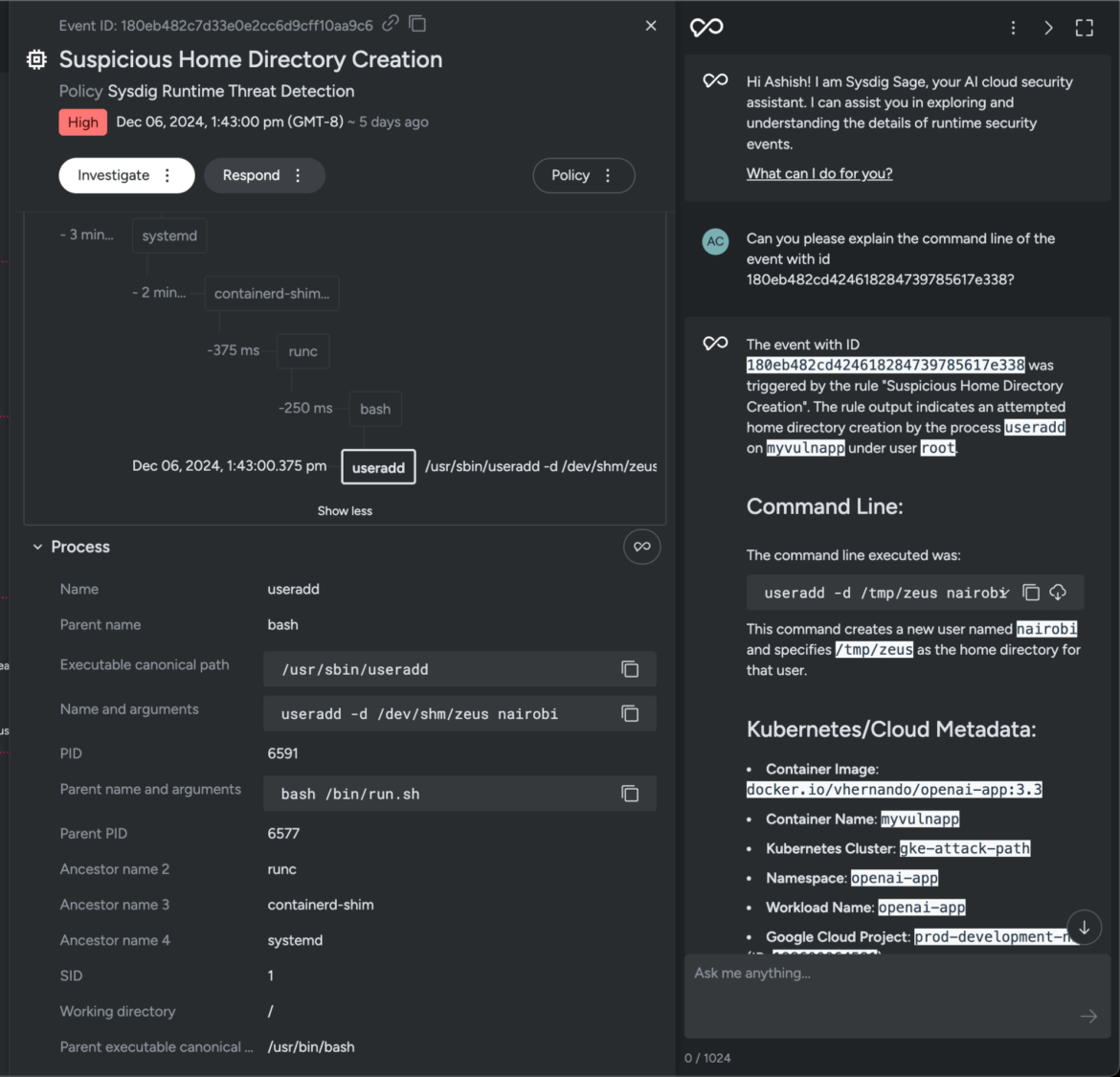

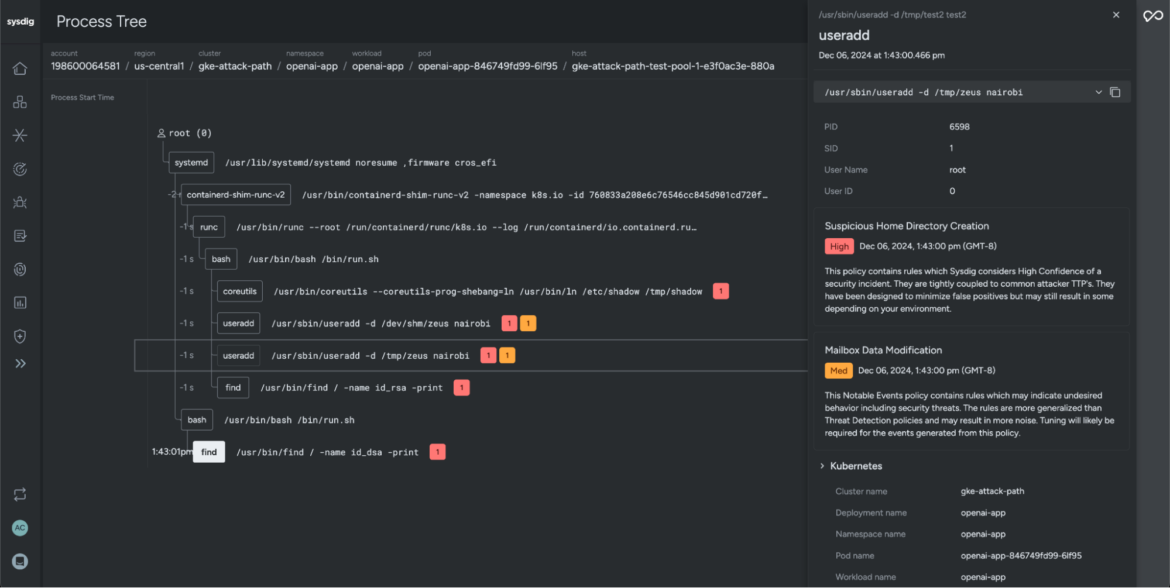

On this instance, whereas reviewing threat components on the assault path, you possibly can see that Sysdig detected a suspicious residence listing created in an uncommon location. This might point out that the attacker tried to arrange a backdoor person. By doing this, the attacker would possibly goal to take care of unauthorized entry, cowl their tracks, and keep away from being detected.

Safety groups may even flip to Sysdig Sage to dig deeper and uncover the attacker’s motives. On this case, we used Sysdig Sage to research the command executed by the risk actor. It revealed the creation of a brand new person named “nairobi,” with the house listing set to /dev/shm/zeus — a brief file storage location. This uncommon alternative for a house listing raises purple flags and hints at attainable malicious exercise.

Let’s head again and take a more in-depth take a look at the workload, reviewing posture misconfigurations which will have enabled the attacker to entry and compromise the surroundings.

Management entry

Improve your logging and monitoring practices

- Activate detailed logging to your cloud and system actions

- Maintain thorough audit trails that can assist you monitor any suspicious conduct

- Arrange automated programs to detect anomalies and create fast response plans for incidents

Our investigation uncovers an in depth listing of safety misconfigurations that seemingly allowed the creation of the “nairobi” person account and triggered the sooner occasion we noticed. For instance, Sysdig highlights that the container has forbidden capabilities which will pose potential safety dangers if left unchecked.

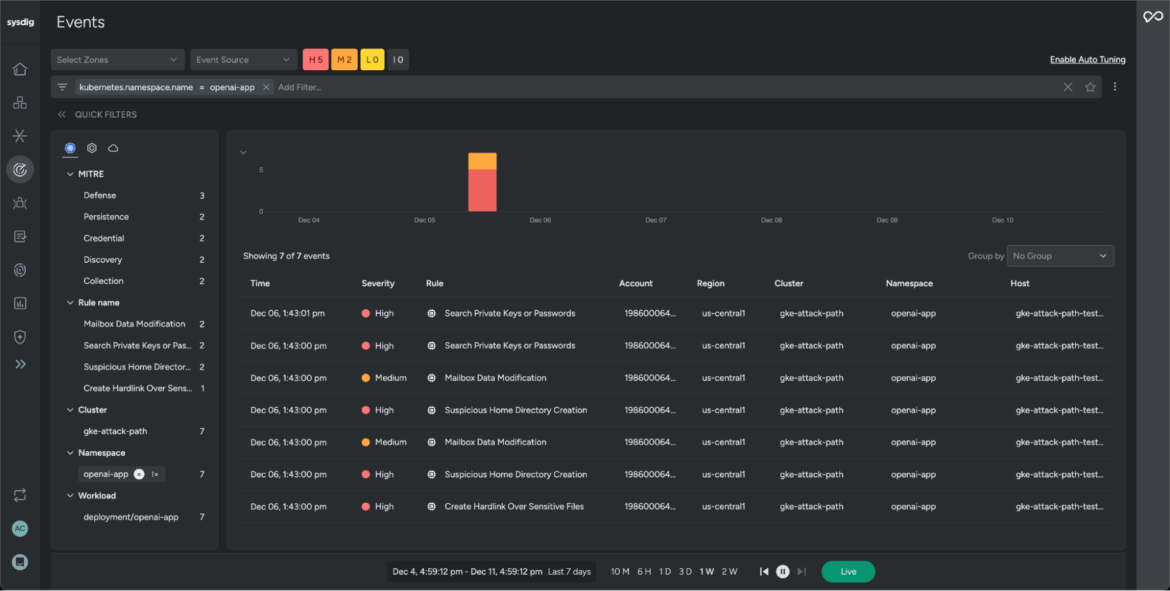

In addition to uncovering misconfigurations, Sysdig screens workload exercise and logs a timeline of occasions main as much as the precise assault. In our state of affairs, we’ll filter occasions from the workload openapi-app for the previous seven days and examine the method tree.

With Sysdig Safe, safety groups can simply analyze the lineage of occasions and visualize conduct of processes, perceive their relationships, and uncover suspicious or surprising actions. This helps to isolate the foundation trigger, group high-impact occasions, and shortly tackle blindspots in a well timed method.

Actions:

- Conduct a fast safety evaluation of your present setup

- Replace your AI infrastructure safety configurations as wanted

- Prepare your safety staff on easy methods to spot LLMjacking makes an attempt

- Recurrently overview and refresh your safety protocols

By following these pleasant suggestions, you possibly can assist safeguard your group in opposition to LLMjacking, strengthen your LLMs, and make sure that your AI sources stay safe and guarded.

For extra insights, remember to discover our Sysdig blogs. And in the event you’d like to attach with our consultants, don’t hesitate to attain out — we’d love to listen to from you!