Picture by Creator

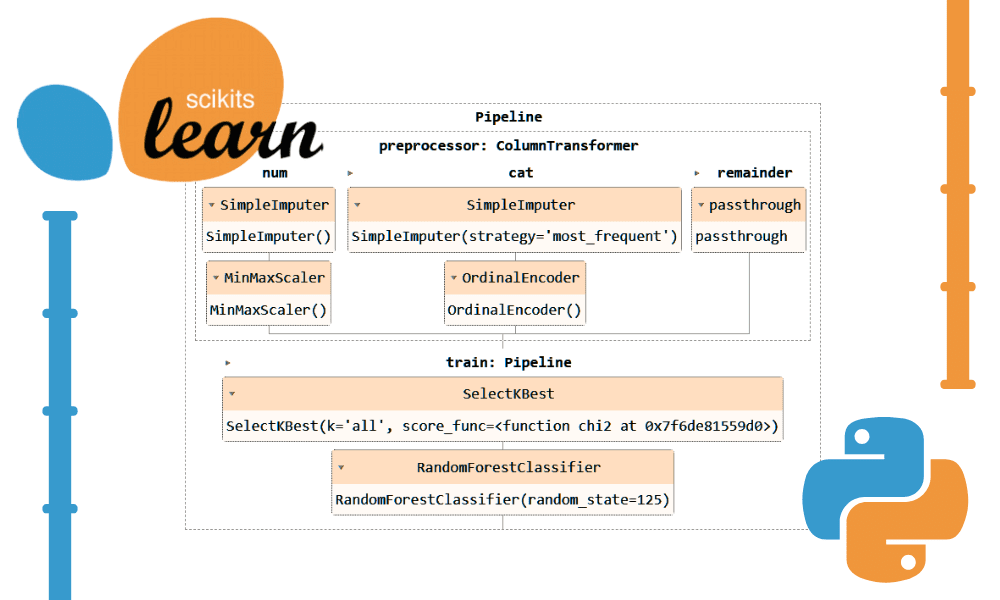

Utilizing Scikit-learn pipelines can simplify your preprocessing and modeling steps, scale back code complexity, guarantee consistency in knowledge preprocessing, assist with hyperparameter tuning, and make your workflow extra organized and simpler to take care of. By integrating a number of transformations and the ultimate mannequin right into a single entity, Pipelines improve reproducibility and make every little thing extra environment friendly.

On this tutorial, we will likely be working with the Financial institution Churn dataset from Kaggle to coach a Random Forest Classifier. We’ll examine the traditional method of information preprocessing and mannequin coaching with a extra environment friendly technique utilizing Scikit-learn pipelines and ColumnTransformers.

Within the knowledge processing pipeline, we are going to learn to rework each categorical and numerical columns individually. We’ll begin with a standard fashion of code after which present a greater method to carry out related processing.

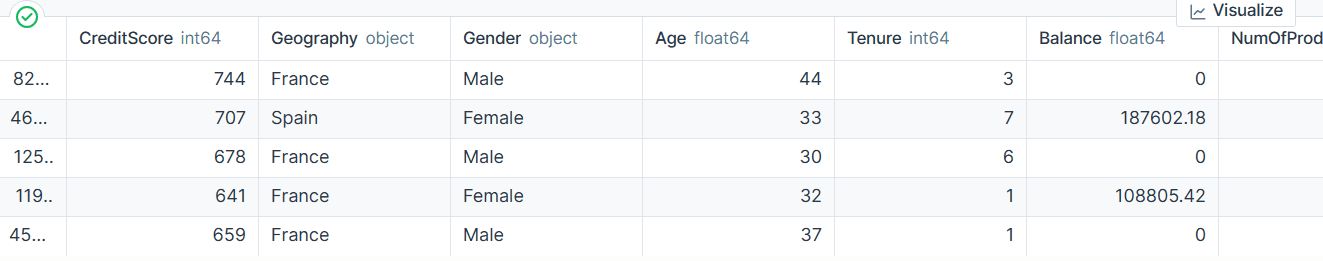

After extracting the information from the zip file, load the `prepare.csv` file with “id” because the index column. Drop pointless columns and shuffle the dataset.

import pandas as pd

bank_df = pd.read_csv("train.csv", index_col="id")

bank_df = bank_df.drop(['CustomerId', 'Surname'], axis=1)

bank_df = bank_df.pattern(frac=1)

bank_df.head()

We’ve categorical, integer, and float columns. The dataset seems to be fairly clear.

Easy Scikit-learn Code

As an information scientist, I’ve written this code a number of occasions. Our goal is to fill within the lacking values for each categorical and numerical options. To attain this, we are going to use a `SimpleImputer` with completely different methods for every kind of function.

After the lacking values are crammed in, we are going to convert categorical options to integers and apply min-max scaling on numerical options.

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import OrdinalEncoder, MinMaxScaler

cat_col = [1,2]

num_col = [0,3,4,5,6,7,8,9]

# Filling lacking categorical values

cat_impute = SimpleImputer(technique="most_frequent")

bank_df.iloc[:,cat_col] = cat_impute.fit_transform(bank_df.iloc[:,cat_col])

# Filling lacking numerical values

num_impute = SimpleImputer(technique="median")

bank_df.iloc[:,num_col] = num_impute.fit_transform(bank_df.iloc[:,num_col])

# Encode categorical options as an integer array.

cat_encode = OrdinalEncoder()

bank_df.iloc[:,cat_col] = cat_encode.fit_transform(bank_df.iloc[:,cat_col])

# Scaling numerical values.

scaler = MinMaxScaler()

bank_df.iloc[:,num_col] = scaler.fit_transform(bank_df.iloc[:,num_col])

bank_df.head()

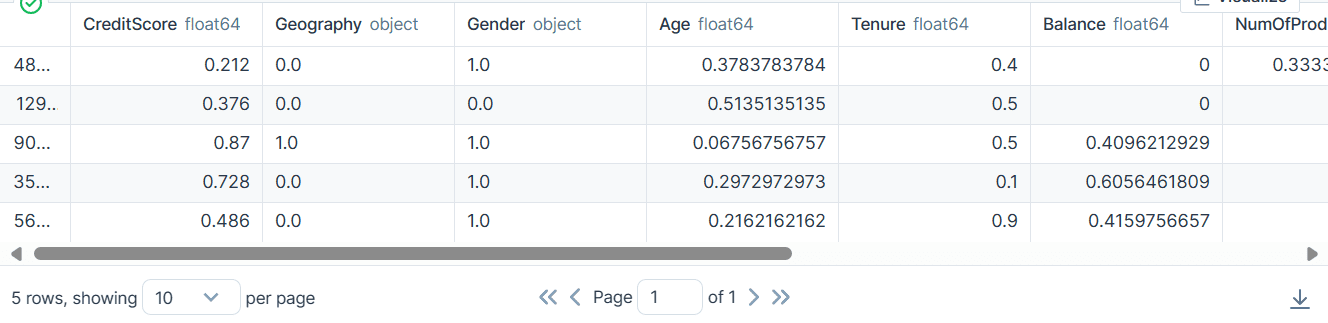

Consequently, we received a dataset that’s clear and remodeled with solely integer or float values.

Scikit-learn Pipelines Code

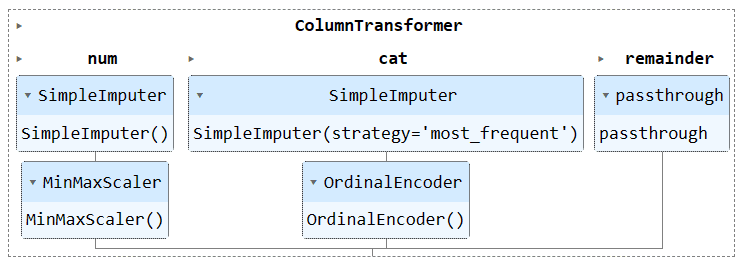

Let’s convert the above code utilizing the `Pipeline` and `ColumnTransformer`. As a substitute of making use of the preprocessing approach, we are going to create two pipelines. One is for numerical columns, and one is for categorical columns.

- Within the numerical pipeline, we’ve got used a easy impute with a “mean” technique and utilized a min-max scaler for normalization.

- Within the categorical pipeline, we used the easy imputer with the “most_frequent“ technique and the unique encoder to transform the classes into numerical values.

We mixed the 2 pipelines utilizing the ColumnTransformer and supplied every with the columns index. It is going to allow you to apply these pipelines on sure columns. For instance, a categorical transformer pipeline will likely be utilized to solely columns 1 and a pair of.

Be aware: the rest=”passthrough” implies that the columns that haven’t been processed will likely be added in the long run. In our case, it’s the goal column.

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import OrdinalEncoder, MinMaxScaler

from sklearn.compose import ColumnTransformer

from sklearn.pipeline import Pipeline

# Establish numerical and categorical columns

cat_col = [1,2]

num_col = [0,3,4,5,6,7,8,9]

# Transformers for numerical knowledge

numerical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='mean')),

('scaler', MinMaxScaler())

])

# Transformers for categorical knowledge

categorical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='most_frequent')),

('encoder', OrdinalEncoder())

])

# Mix transformers right into a ColumnTransformer

preproc_pipe = ColumnTransformer(

transformers=[

('num', numerical_transformer, num_col),

('cat', categorical_transformer, cat_col)

],

the rest="passthrough"

)

# Apply the preprocessing pipeline

bank_df = preproc_pipe.fit_transform(bank_df)

bank_df[0]

After the transformation, the ensuing array comprises numerical rework worth initially and categorical rework worth on the finish, based mostly on the order of the pipelines within the column transformer.

array([0.712 , 0.24324324, 0.6 , 0. , 0.33333333,

1. , 1. , 0.76443485, 2. , 0. ,

0. ])

You may run the pipeline object within the Jupyter Pocket book to visualise the pipeline. Be sure to have the newest model of Scikit-learn.

To coach and consider our mannequin, we have to cut up our dataset into two subsets: coaching and testing.

To do that, we are going to first create dependent and impartial variables and convert them into NumPy arrays. Then, we are going to use the `train_test_split` perform to separate the dataset into two subsets.

from sklearn.model_selection import train_test_split

X = bank_df.drop("Exited", axis=1).values

y = bank_df.Exited.values

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=125

)

Easy Scikit-learn Code

The standard manner of writing coaching code is to first carry out function choice utilizing `SelectKBest` after which present the brand new function to our Random Forest Classifier mannequin.

We’ll first prepare the mannequin utilizing the coaching set and consider the outcomes utilizing the testing dataset.

from sklearn.feature_selection import SelectKBest, chi2

from sklearn.ensemble import RandomForestClassifier

KBest = SelectKBest(chi2, okay="all")

X_train = KBest.fit_transform(X_train, y_train)

X_test = KBest.rework(X_test)

mannequin = RandomForestClassifier(n_estimators=100, random_state=125)

mannequin.match(X_train,y_train)

mannequin.rating(X_test, y_test)

We achieved a fairly good accuracy rating.

Scikit-learn Pipelines Code

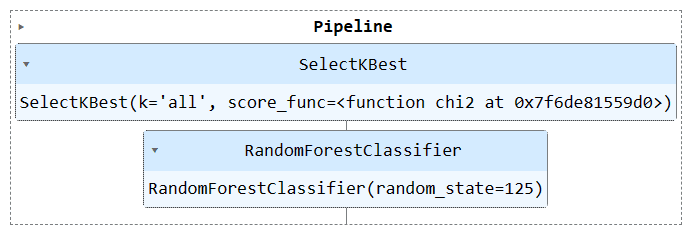

Let’s use the `Pipeline` perform to mix each coaching steps right into a pipeline. We are able to then match the mannequin on the coaching set and consider it on the testing set.

KBest = SelectKBest(chi2, okay="all")

mannequin = RandomForestClassifier(n_estimators=100, random_state=125)

train_pipe = Pipeline(

steps=[

("KBest", KBest),

("RFmodel", model),

]

)

train_pipe.match(X_train,y_train)

train_pipe.rating(X_test, y_test)

We achieved related outcomes, however the code seems to be extra environment friendly and easy. It is fairly straightforward so as to add or take away new steps from the coaching pipeline.

Run the pipeline object to visualise the pipeline.

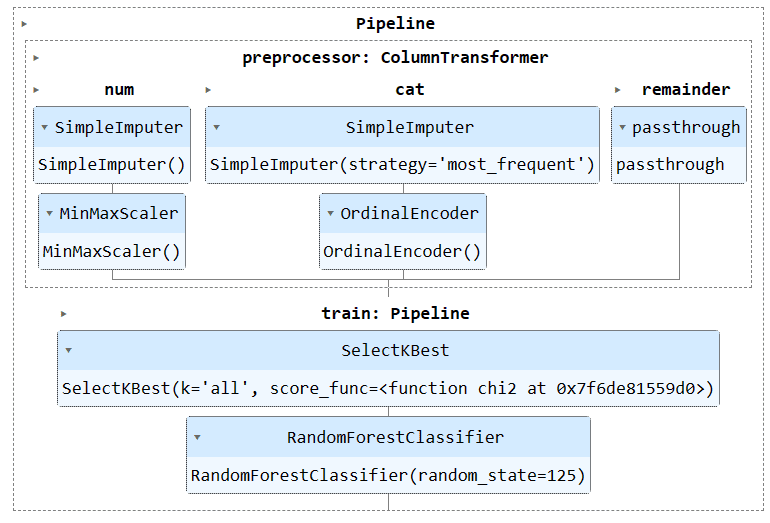

Now, we are going to mix each preprocessing and coaching pipeline by creating one other pipeline and including each pipelines.

Right here is the whole code:

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import OrdinalEncoder, MinMaxScaler

from sklearn.compose import ColumnTransformer

from sklearn.pipeline import Pipeline

from sklearn.feature_selection import SelectKBest, chi2

from sklearn.ensemble import RandomForestClassifier

#loading the information

bank_df = pd.read_csv("train.csv", index_col="id")

bank_df = bank_df.drop(['CustomerId', 'Surname'], axis=1)

bank_df = bank_df.pattern(frac=1)

# Splitting knowledge into coaching and testing units

X = bank_df.drop(["Exited"],axis=1)

y = bank_df.Exited

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=125

)

# Establish numerical and categorical columns

cat_col = [1,2]

num_col = [0,3,4,5,6,7,8,9]

# Transformers for numerical knowledge

numerical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='mean')),

('scaler', MinMaxScaler())

])

# Transformers for categorical knowledge

categorical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='most_frequent')),

('encoder', OrdinalEncoder())

])

# Mix pipelines utilizing ColumnTransformer

preproc_pipe = ColumnTransformer(

transformers=[

('num', numerical_transformer, num_col),

('cat', categorical_transformer, cat_col)

],

the rest="passthrough"

)

# Choosing the right options

KBest = SelectKBest(chi2, okay="all")

# Random Forest Classifier

mannequin = RandomForestClassifier(n_estimators=100, random_state=125)

# KBest and mannequin pipeline

train_pipe = Pipeline(

steps=[

("KBest", KBest),

("RFmodel", model),

]

)

# Combining the preprocessing and coaching pipelines

complete_pipe = Pipeline(

steps=[

("preprocessor", preproc_pipe),

("train", train_pipe),

]

)

# working the whole pipeline

complete_pipe.match(X_train,y_train)

# mannequin accuracy

complete_pipe.rating(X_test, y_test)

Output:

Visualizing the whole pipeline.

One of many main benefits of utilizing pipelines is you can save the pipeline with the mannequin. Throughout inference, you solely have to load the pipeline object, which will likely be able to course of the uncooked knowledge and offer you correct predictions. You needn’t re-write the processing and transformation capabilities within the app file, as it would work out of the field. This makes the machine studying workflow extra environment friendly and saves time.

Let’s first save the pipeline utilizing the skops-dev/skops library.

import skops.io as sio

sio.dump(complete_pipe, "bank_pipeline.skops")

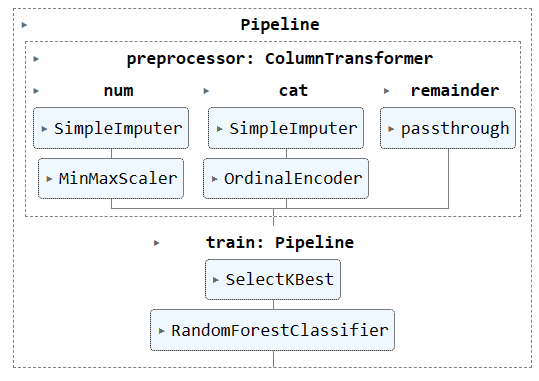

Then, load the saved pipeline and show the pipeline.

new_pipe = sio.load("bank_pipeline.skops", trusted=True)

new_pipe

As we are able to see, we’ve got efficiently loaded the pipeline.

To guage our loaded pipeline, we are going to make predictions on the check set after which calculate accuracy and F1 scores.

from sklearn.metrics import accuracy_score, f1_score

predictions = new_pipe.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

f1 = f1_score(y_test, predictions, common="macro")

print("Accuracy:", str(spherical(accuracy, 2) * 100) + "%", "F1:", spherical(f1, 2))

It seems we have to deal with minority courses to enhance our f1 rating.

The challenge information and code is offered on Deepnote Workspace. The workspace has two Notebooks: One with the Scikit-learn pipeline and one with out it.

On this tutorial, we discovered how Scikit-learn pipelines will help streamline machine studying workflows by chaining collectively sequences of information transforms and fashions. By combining preprocessing and mannequin coaching right into a single Pipeline object, we are able to simplify code, guarantee constant knowledge transformations, and make our workflows extra organized and reproducible.

Abid Ali Awan (@1abidaliawan) is a licensed knowledge scientist skilled who loves constructing machine studying fashions. Presently, he’s specializing in content material creation and writing technical blogs on machine studying and knowledge science applied sciences. Abid holds a Grasp’s diploma in expertise administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students battling psychological sickness.