Stanford College launched its AI Index Report 2024 which famous that AI’s fast development makes benchmark comparisons with people more and more much less related.

The annual report gives a complete perception into the tendencies and state of AI developments. The report says that AI fashions are enhancing so quick now that the benchmarks we use to measure them are more and more changing into irrelevant.

Plenty of trade benchmarks examine AI fashions to how good people are at performing duties. The Large Multitask Language Understanding (MMLU) benchmark is an effective instance.

It makes use of multiple-choice questions to judge LLMs throughout 57 topics, together with math, historical past, regulation, and ethics. The MMLU has been the go-to AI benchmark since 2019.

The human baseline rating on the MMLU is 89.8%, and again in 2019, the typical AI mannequin scored simply over 30%. Simply 5 years later, Gemini Extremely turned the primary mannequin to beat the human baseline with a rating of 90.04%.

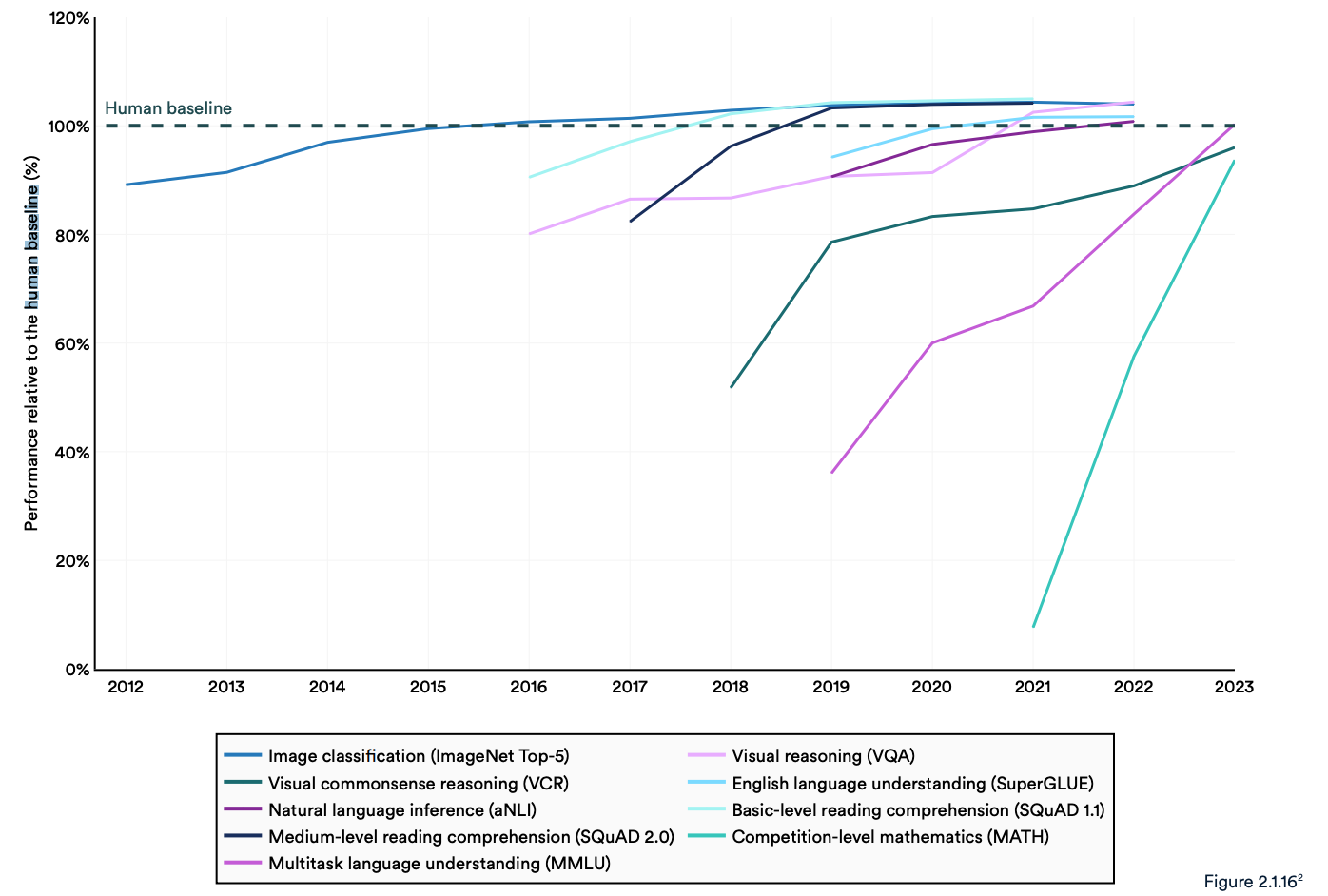

The report notes that present “AI systems routinely exceed human performance on standard benchmarks.” The tendencies within the graph under appear to point that the MMLU and different benchmarks want changing.

AI fashions have reached efficiency saturation on established benchmarks reminiscent of ImageNet, SQuAD, and SuperGLUE so researchers are creating tougher exams.

One instance is the Graduate-Stage Google-Proof Q&A Benchmark (GPQA), which permits AI fashions to be benchmarked towards actually good individuals, slightly than common human intelligence.

The GPQA check consists of 400 robust graduate-level multiple-choice questions. Specialists who’ve or are pursuing their PhDs accurately reply the questions 65% of the time.

The GPQA paper says that when requested questions outdoors their area, “highly skilled non-expert validators only reach 34% accuracy, despite spending on average over 30 minutes with unrestricted access to the web.”

Final month Anthropic introduced that Claude 3 scored slightly below 60% with 5-shot CoT prompting. We’re going to wish an even bigger benchmark.

Claude 3 will get ~60% accuracy on GPQA. It’s laborious for me to understate how laborious these questions are—literal PhDs (in numerous domains from the questions) with entry to the web get 34%.

PhDs *in the identical area* (additionally with web entry!) get 65% – 75% accuracy. https://t.co/ARAiCNXgU9 pic.twitter.com/PH8J13zIef

— david rein (@idavidrein) March 4, 2024

Human evaluations and security

The report famous that AI nonetheless faces vital issues: “It cannot reliably deal with facts, perform complex reasoning, or explain its conclusions.”

These limitations contribute to a different AI system attribute that the report says is poorly measured; AI security. We don’t have efficient benchmarks that enable us to say, “This model is safer than that one.”

That’s partly as a result of it’s troublesome to measure, and partly as a result of “AI developers lack transparency, especially regarding the disclosure of training data and methodologies.”

The report famous that an fascinating development within the trade is to crowd-source human evaluations of AI efficiency, slightly than benchmark exams.

Rating a mannequin’s picture aesthetics or prose is troublesome to do with a check. Because of this, the report says that “benchmarking has slowly started shifting toward incorporating human evaluations like the Chatbot Arena Leaderboard rather than computerized rankings like ImageNet or SQuAD.”

As AI fashions watch the human baseline disappear within the rear-view mirror, sentiment could finally decide which mannequin we select to make use of.

The tendencies point out that AI fashions will finally be smarter than us and tougher to measure. We could quickly discover ourselves saying, “I don’t know why, but I just like this one better.”