OpenAI on Thursday disclosed that it took steps to chop off 5 covert affect operations (IO) originating from China, Iran, Israel, and Russia that sought to abuse its synthetic intelligence (AI) instruments to control public discourse or political outcomes on-line whereas obscuring their true id.

These actions, which had been detected over the previous three months, used its AI fashions to generate brief feedback and longer articles in a variety of languages, prepare dinner up names and bios for social media accounts, conduct open-source analysis, debug easy code, and translate and proofread texts.

The AI analysis group mentioned two of the networks had been linked to actors in Russia, together with a beforehand undocumented operation codenamed Dangerous Grammar that primarily used at the very least a dozen Telegram accounts to focus on audiences in Ukraine, Moldova, the Baltic States and america (U.S.) with sloppy content material in Russian and English.

“The network used our models and accounts on Telegram to set up a comment-spamming pipeline,” OpenAI mentioned. “First, the operators used our models to debug code that was apparently designed to automate posting on Telegram. They then generated comments in Russian and English in reply to specific Telegram posts.”

The operators additionally used its fashions to generate feedback underneath the guise of varied fictitious personas belonging to completely different demographics from throughout each side of the political spectrum within the U.S.

The opposite Russia-linked data operation corresponded to the prolific Doppelganger community (aka Latest Dependable Information), which was sanctioned by the U.S. Treasury Division’s Workplace of Overseas Belongings Management (OFAC) earlier this March for participating in cyber affect operations.

The community is claimed to have used OpenAI’s fashions to generate feedback in English, French, German, Italian, and Polish that had been shared on X and 9GAG; translate and edit articles from Russian to English and French that had been then posted on bogus web sites maintained by the group; generate headlines; and convert information articles posted on its websites into Fb posts.

“This activity targeted audiences in Europe and North America and focused on generating content for websites and social media,” OpenAI mentioned. “The majority of the content that this campaign published online focused on the war in Ukraine. It portrayed Ukraine, the US, NATO and the EU in a negative light and Russia in a positive light.”

The opposite three exercise clusters are listed beneath –

- A Chinese language-origin community often known as Spamouflage that used its AI fashions to analysis public social media exercise; generate texts in Chinese language, English, Japanese, and Korean for posting throughout X, Medium, and Blogger; propagate content material criticizing Chinese language dissidents and abuses in opposition to Native People within the U.S.; and debug code for managing databases and web sites

- An Iranian operation often known as the Worldwide Union of Digital Media (IUVM) that used its AI fashions to generate and translate long-form articles, headlines, and web site tags in English and French for subsequent publication on an internet site named iuvmpress[.]co

- A community known as Zero Zeno emanating from a for-hire Israeli menace actor, a enterprise intelligence agency known as STOIC, that used its AI fashions to generate and disseminate anti-Hamas, anti-Qatar, pro-Israel, anti-BJP, and pro-Histadrut content material throughout Instagram, Fb, X, and its affiliated web sites focusing on customers in Canada, the U.S., India, and Ghana.

“The [Zero Zeno] operation also used our models to create fictional personas and bios for social media based on certain variables such as age, gender and location, and to conduct research into people in Israel who commented publicly on the Histadrut trade union in Israel,” OpenAI mentioned, including it fashions refused to produce private knowledge in response to those prompts.

The ChatGPT maker emphasised in its first menace report on IO that none of those campaigns “meaningfully increased their audience engagement or reach” from exploiting its companies.

The event comes as considerations are being raised that generative AI (GenAI) instruments may make it simpler for malicious actors to generate sensible textual content, photographs and even video content material, making it difficult to identify and reply to misinformation and disinformation operations.

“So far, the situation is evolution, not revolution,” Ben Nimmo, principal investigator of intelligence and investigations at OpenAI, mentioned. “That could change. It’s important to keep watching and keep sharing.”

Meta Highlights STOIC and Doppelganger

Individually, Meta in its quarterly Adversarial Risk Report, additionally shared particulars of STOIC’s affect operations, saying it eliminated a mixture of practically 500 compromised and faux accounts on Fb and Instagram accounts utilized by the actor to focus on customers in Canada and the U.S.

“This campaign demonstrated a relative discipline in maintaining OpSec, including by leveraging North American proxy infrastructure to anonymize its activity,” the social media large mentioned.

Meta additional mentioned it eliminated a whole bunch of accounts, comprising misleading networks from Bangladesh, China, Croatia, Iran, and Russia, for participating in coordinated inauthentic habits (CIB) with the purpose of influencing public opinion and pushing political narratives about topical occasions.

The China-based malign community primarily focused the worldwide Sikh neighborhood and consisted of a number of dozen Instagram and Fb accounts, pages, and teams that had been used to unfold manipulated imagery and English and Hindi-language posts associated to a non-existent pro-Sikh motion, the Khalistan separatist motion and criticism of the Indian authorities.

It identified that it hasn’t thus far detected any novel and complex use of GenAI-driven ways, with the corporate highlighting cases of AI-generated video information readers that had been beforehand documented by Graphika and GNET, indicating that regardless of the largely ineffective nature of those campaigns, menace actors are actively experimenting with the know-how.

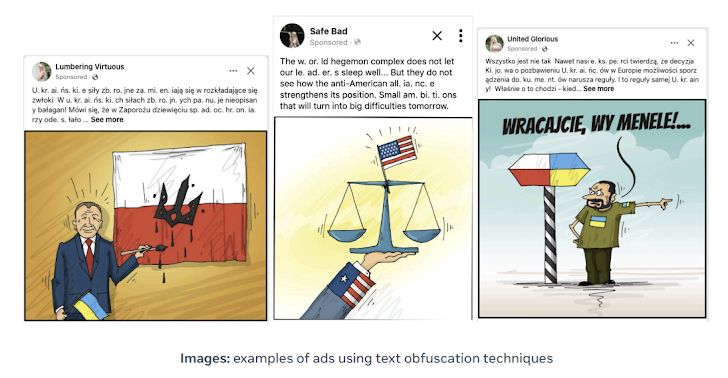

Doppelganger, Meta mentioned, has continued its “smash-and-grab” efforts, albeit with a significant shift in ways in response to public reporting, together with the usage of textual content obfuscation to evade detection (e.g., utilizing “U. kr. ai. n. e” as a substitute of “Ukraine”) and dropping its apply of linking to typosquatted domains masquerading as information media shops since April.

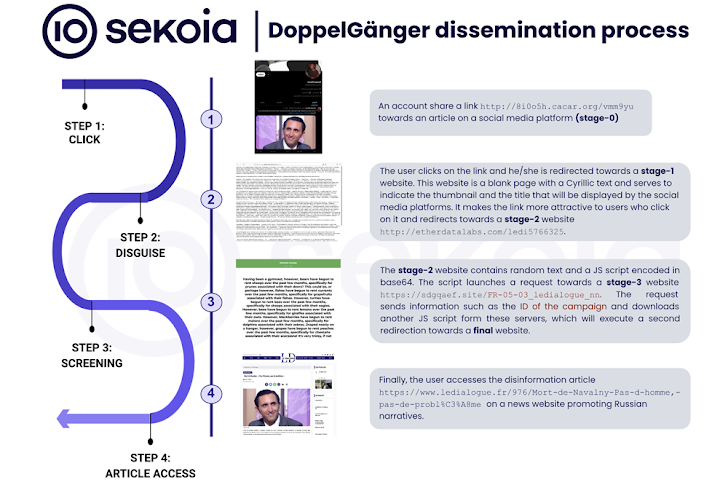

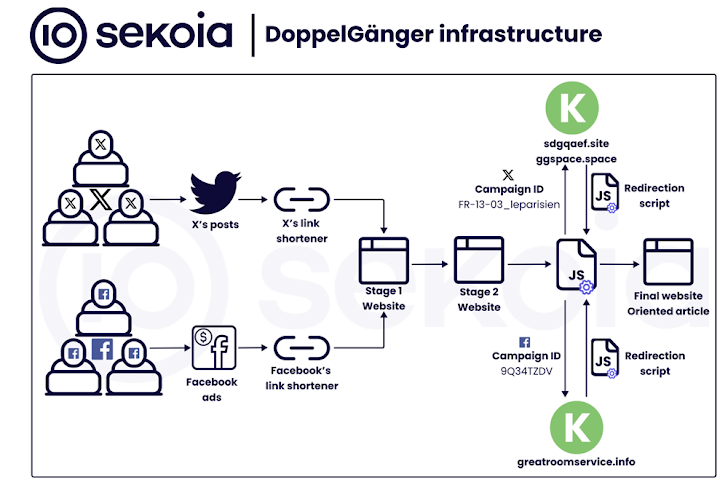

“The campaign is supported by a network with two categories of news websites: typosquatted legitimate media outlets and organizations, and independent news websites,” Sekoia mentioned in a report in regards to the pro-Russian adversarial community revealed final week.

“Disinformation articles are published on these websites and then disseminated and amplified via inauthentic social media accounts on several platforms, especially video-hosting ones like Instagram, TikTok, Cameo, and YouTube.”

These social media profiles, created in giant numbers and in waves, leverage paid advertisements campaigns on Fb and Instagram to direct customers to propaganda web sites. The Fb accounts are additionally known as burner accounts owing to the truth that they’re used to share just one article and are subsequently deserted.

The French cybersecurity agency described the industrial-scale campaigns – that are geared in the direction of each Ukraine’s allies and Russian-speaking home audiences on Kremlin’s behalf – as multi-layered, leveraging the social botnet to provoke a redirection chain that passes via two intermediate web sites with the intention to lead customers to the ultimate web page.

Recorded Future, in a report launched this month, detailed a brand new affect community dubbed CopyCop that is doubtless operated from Russia, leveraging inauthentic media shops within the U.S., the U.Ok., and France to advertise narratives that undermine Western home and overseas coverage, and unfold content material pertaining to the continuing Russo-Ukrainian warfare and the Israel-Hamas battle.

“CopyCop extensively used generative AI to plagiarize and modify content from legitimate media sources to tailor political messages with specific biases,” the corporate mentioned. “This included content critical of Western policies and supportive of Russian perspectives on international issues like the Ukraine conflict and the Israel-Hamas tensions.”

The content material generated by CopyCop can also be amplified by well-known state-sponsored actors similar to Doppelganger and Portal Kombat, demonstrating a concerted effort to serve content material that initiatives Russia in a positive gentle.

TikTok Disrupts Covert Affect Operations

Earlier in Might, ByteDance-owned TikTok mentioned it had uncovered and stamped out a number of such networks on its platform for the reason that begin of the 12 months, together with ones that it traced again to Bangladesh, China, Ecuador, Germany, Guatemala, Indonesia, Iran, Iraq, Serbia, Ukraine, and Venezuela.

TikTok, which is at present going through scrutiny within the U.S. following the passage of a regulation that might pressure the Chinese language firm to promote the corporate or face a ban within the nation, has grow to be an more and more most well-liked platform of selection for Russian state-affiliated accounts in 2024, in keeping with a new report from the Brookings Establishment.

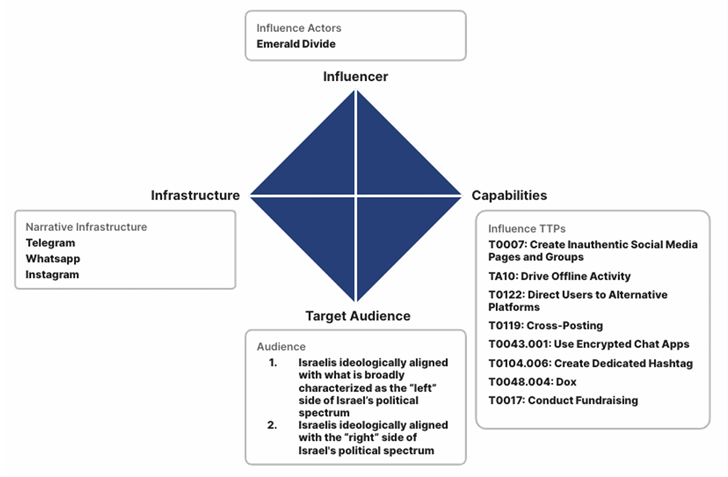

What’s extra, the social video internet hosting service has grow to be a breeding floor for what has been characterised as a posh affect marketing campaign often known as Emerald Divide that’s believed to be orchestrated by Iran-aligned actors since 2021 focusing on Israeli society.

“Emerald Divide is noted for its dynamic approach, swiftly adapting its influence narratives to Israel’s evolving political landscape,” Recorded Future mentioned.

“It leverages modern digital tools such as AI-generated deepfakes and a network of strategically operated social media accounts, which target diverse and often opposing audiences, effectively stoking societal divisions and encouraging physical actions such as protests and the spreading of anti-government messages.”