Picture by Creator

Operating giant language fashions (LLMs) regionally will be tremendous useful—whether or not you’d wish to mess around with LLMs or construct extra highly effective apps utilizing them. However configuring your working setting and getting LLMs to run in your machine just isn’t trivial.

So how do you run LLMs regionally with none of the trouble? Enter Ollama, a platform that makes native growth with open-source giant language fashions a breeze. With Ollama, every thing it is advisable to run an LLM—mannequin weights and all the config—is packaged right into a single Modelfile. Suppose Docker for LLMs.

On this tutorial, we’ll check out how you can get began with Ollama to run giant language fashions regionally. So let’s get proper into the steps!

Step 1: Obtain Ollama to Get Began

As a primary step, it’s best to obtain Ollama to your machine. Ollama is supported on all main platforms: MacOS, Home windows, and Linux.

To obtain Ollama, you possibly can both go to the official GitHub repo and comply with the obtain hyperlinks from there. Or go to the official web site and obtain the installer in case you are on a Mac or a Home windows machine.

I’m on Linux: Ubuntu distro. So in case you’re a Linux person like me, you possibly can run the next command to run the installer script:

$ curl -fsSL https://ollama.com/set up.sh | sh

The set up course of sometimes takes a couple of minutes. Through the set up course of, any NVIDIA/AMD GPUs might be auto-detected. Be sure you have the drivers put in. The CPU-only mode works wonderful, too. However it might be a lot slower.

Step 2: Get the Mannequin

Subsequent, you possibly can go to the mannequin library to test the checklist of all mannequin households at the moment supported. The default mannequin downloaded is the one with the newest tag. On the web page for every mannequin, you will get extra data resembling the scale and quantization used.

You may search by the checklist of tags to find the mannequin that you just need to run. For every mannequin household, there are sometimes foundational fashions of various sizes and instruction-tuned variants. I’m desirous about operating the Gemma 2B mannequin from the Gemma household of light-weight fashions from Google DeepMind.

You may run the mannequin utilizing the ollama run command to drag and begin interacting with the mannequin immediately. Nonetheless, you too can pull the mannequin onto your machine first after which run it. That is similar to how you’re employed with Docker photos.

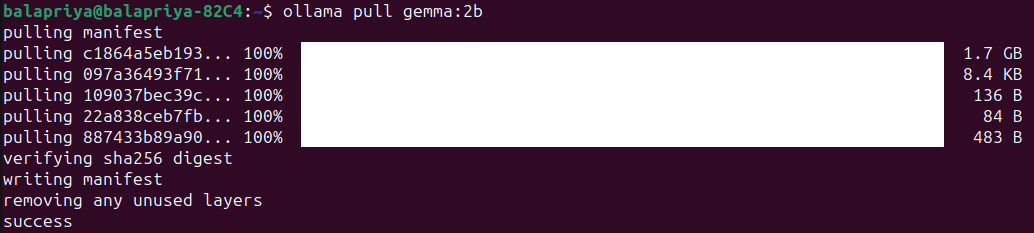

For Gemma 2B, operating the next pull command downloads the mannequin onto your machine:

The mannequin is of dimension 1.7B and the pull ought to take a minute or two:

Step 3: Run the Mannequin

Run the mannequin utilizing the ollama run command as proven:

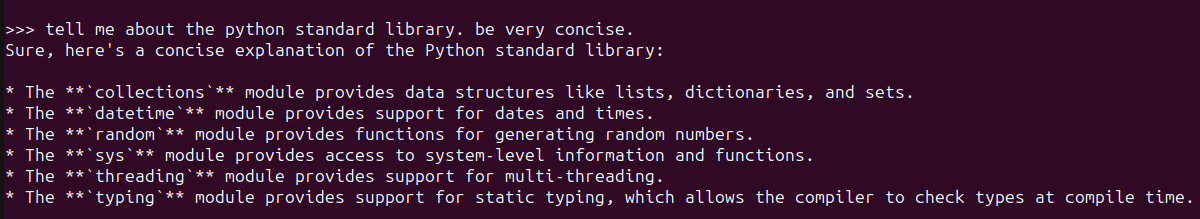

Doing so will begin an Ollama REPL at which you’ll work together with the Gemma 2B mannequin. Right here’s an instance:

For a easy query in regards to the Python customary library, the response appears fairly okay. And consists of most ceaselessly used modules.

Step 4: Customise Mannequin Conduct with System Prompts

You may customise LLMs by setting system prompts for a particular desired habits like so:

- Set system immediate for desired habits.

- Save the mannequin by giving it a reputation.

- Exit the REPL and run the mannequin you simply created.

Say you need the mannequin to at all times clarify ideas or reply questions in plain English with minimal technical jargon as potential. Right here’s how one can go about doing it:

>>> /set system For all questions requested reply in plain English avoiding technical jargon as a lot as potential

Set system message.

>>> /save ipe

Created new mannequin 'ipe'

>>> /bye

Now run the mannequin you simply created:

Right here’s an instance:

Step 5: Use Ollama with Python

Operating the Ollama command-line consumer and interacting with LLMs regionally on the Ollama REPL is an efficient begin. However typically you’d need to use LLMs in your functions. You may run Ollama as a server in your machine and run cURL requests.

However there are less complicated methods. When you like utilizing Python, you’d need to construct LLM apps and listed here are a pair methods you are able to do it:

- Utilizing the official Ollama Python library

- Utilizing Ollama with LangChain

Pull the fashions it is advisable to use earlier than you run the snippets within the following sections.

Utilizing the Ollama Python Library

You need to use the Ollama Python library you possibly can set up it utilizing pip like so:

There’s an official JavaScript library too, which you should utilize in case you favor growing with JS.

As soon as you put in the Ollama Python library, you possibly can import it in your Python software and work with giant language fashions. Here is the snippet for a easy language era activity:

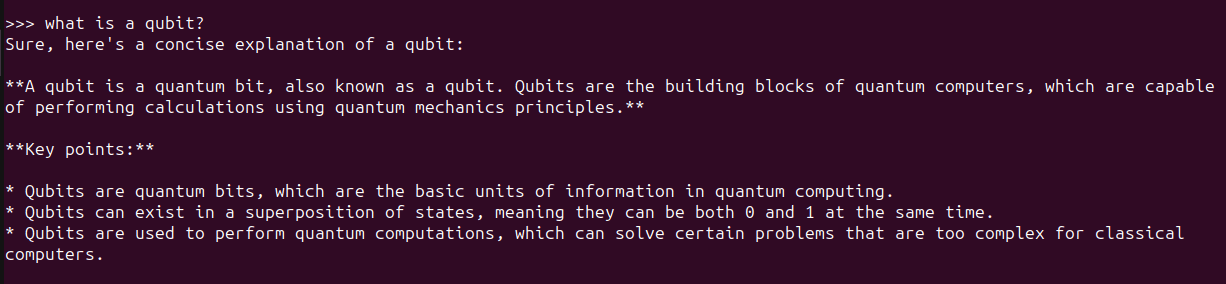

import ollama

response = ollama.generate(mannequin="gemma:2b",

immediate="what is a qubit?")

print(response['response'])

Utilizing LangChain

One other method to make use of Ollama with Python is utilizing LangChain. When you have current tasks utilizing LangChain it is simple to combine or swap to Ollama.

Be sure you have LangChain put in. If not, set up it utilizing pip:

Here is an instance:

from langchain_community.llms import Ollama

llm = Ollama(mannequin="llama2")

llm.invoke("tell me about partial functions in python")

Utilizing LLMs like this in Python apps makes it simpler to modify between totally different LLMs relying on the appliance.

Wrapping Up

With Ollama you possibly can run giant language fashions regionally and construct LLM-powered apps with only a few strains of Python code. Right here we explored how you can work together with LLMs on the Ollama REPL in addition to from inside Python functions.

Subsequent we’ll strive constructing an app utilizing Ollama and Python. Till then, in case you’re seeking to dive deep into LLMs try 7 Steps to Mastering Giant Language Fashions (LLMs).

Bala Priya C is a developer and technical author from India. She likes working on the intersection of math, programming, knowledge science, and content material creation. Her areas of curiosity and experience embody DevOps, knowledge science, and pure language processing. She enjoys studying, writing, coding, and low! At the moment, she’s engaged on studying and sharing her data with the developer neighborhood by authoring tutorials, how-to guides, opinion items, and extra. Bala additionally creates participating useful resource overviews and coding tutorials.