Google DeepMind researchers developed NATURAL PLAN, a benchmark for evaluating the aptitude of LLMs to plan real-world duties primarily based on pure language prompts.

The following evolution of AI is to have it depart the confines of a chat platform and tackle agentic roles to finish duties throughout platforms on our behalf. However that’s more durable than it sounds.

Planning duties like scheduling a gathering or compiling a vacation itinerary may appear easy for us. People are good at reasoning by a number of steps and predicting whether or not a plan of action will accomplish the specified goal or not.

You would possibly discover that simple, however even one of the best AI fashions wrestle with planning. Might we benchmark them to see which LLM is greatest at planning?

The NATURAL PLAN benchmark checks LLMs on 3 planning duties:

- Journey planning – Planning a visit itinerary beneath flight and vacation spot constraints

- Assembly planning – Scheduling conferences with a number of associates in several places

- Calendar scheduling – Scheduling work conferences between a number of folks given current schedules and numerous constraints

The experiment started with few-shot prompting the place the fashions had been supplied with 5 examples of prompts and corresponding appropriate solutions. They had been then prompted with planning prompts of various problem.

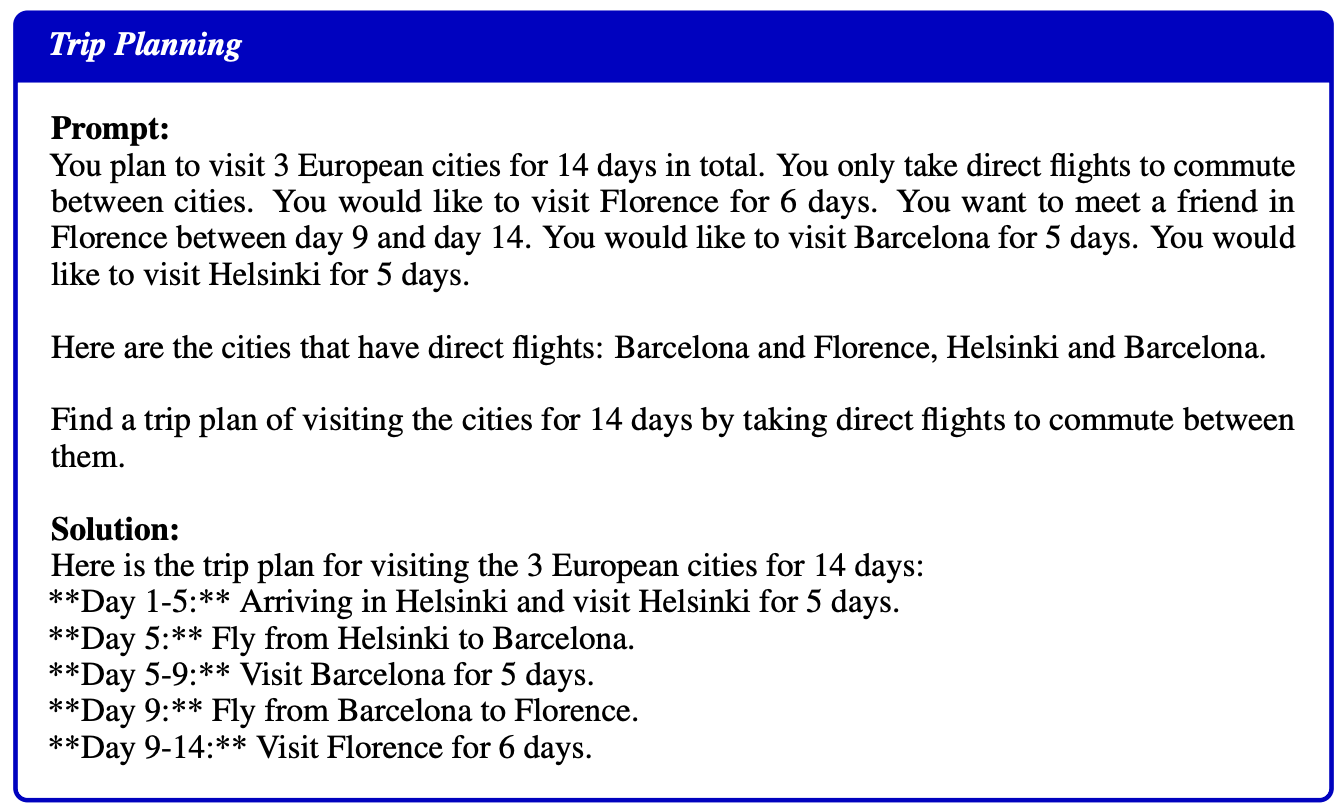

Right here’s an instance of a immediate and resolution supplied for instance to the fashions:

Outcomes

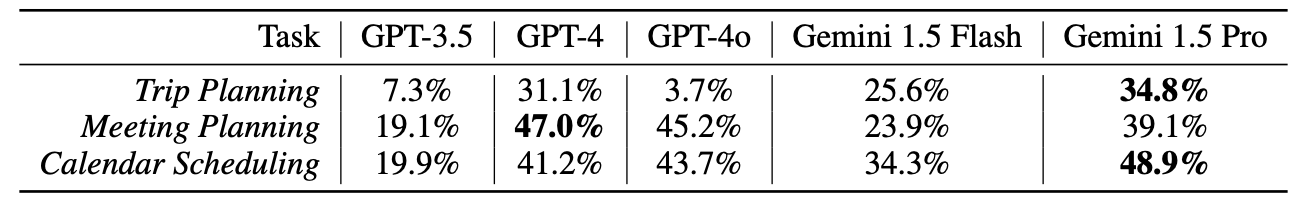

The researchers examined GPT-3.5, GPT-4, GPT-4o, Gemini 1.5 Flash, and Gemini 1.5 Professional, none of which carried out very properly on these checks.

The outcomes should have gone down properly within the DeepMind workplace although as Gemini 1.5 Professional got here out on high.

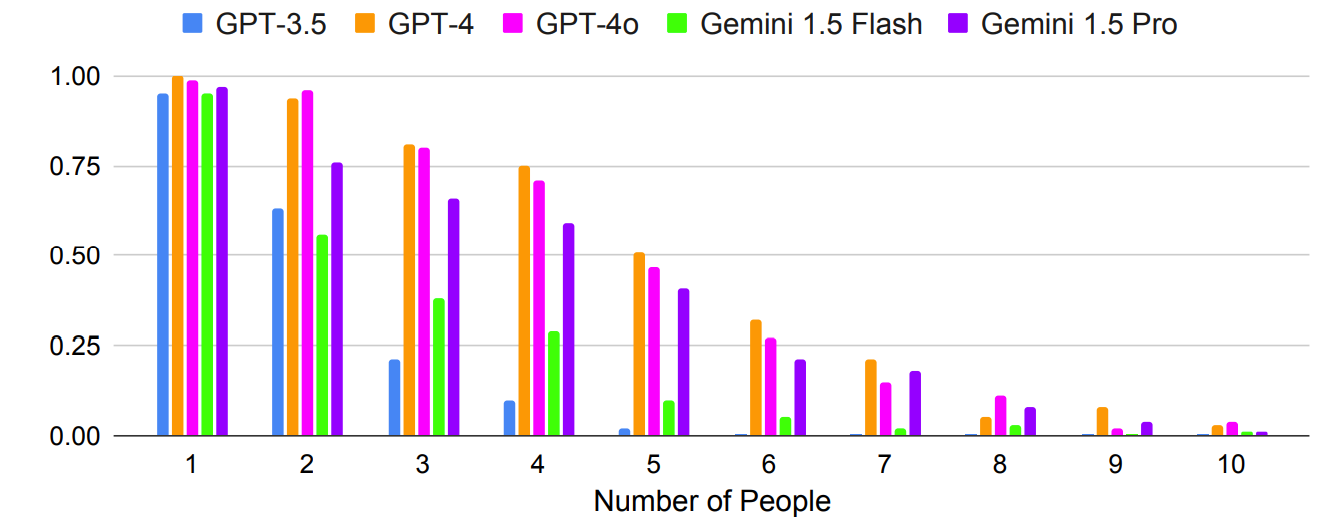

As anticipated, the outcomes acquired exponentially worse with extra advanced prompts the place the variety of folks or cities was elevated. For instance, take a look at how shortly the accuracy suffered as extra folks had been added to the assembly planning take a look at.

Might multi-shot prompting lead to improved accuracy? The outcomes of the analysis point out that it may well, however provided that the mannequin has a big sufficient context window.

Gemini 1.5 Professional’s bigger context window allows it to leverage extra in-context examples than the GPT fashions.

The researchers discovered that in Journey Planning, growing the variety of photographs from 1 to 800 improves the accuracy of Gemini Professional 1.5 from 2.7% to 39.9%.

The paper famous, “These results show the promise of in-context planning where the long-context capabilities enable LLMs to leverage further context to improve Planning.”

An odd outcome was that GPT-4o was actually unhealthy at Journey Planning. The researchers discovered that it struggled “to understand and respect the flight connectivity and travel date constraints.”

One other unusual end result was that self-correction led to a big mannequin efficiency drop throughout all fashions. When the fashions had been prompted to test their work and make corrections they made extra errors.

Curiously, the stronger fashions, corresponding to GPT-4 and Gemini 1.5 Professional, suffered greater losses than GPT-3.5 when self-correcting.

Agentic AI is an thrilling prospect and we’re already seeing some sensible use circumstances in Microsoft Copilot brokers.

However the outcomes of the NATURAL PLAN benchmark checks present that we’ve acquired some solution to go earlier than AI can deal with extra advanced planning.

The DeepMind researchers concluded that “NATURAL PLAN is very hard for state-of-the-art models to solve.”

It appears AI gained’t be changing journey brokers and private assistants fairly but.