Mozilla’s 0Din uncovers vital flaws in ChatGPT’s sandbox, permitting Python code execution and entry to inner configurations. OpenAI has addressed solely one in every of 5 points.

Cybersecurity researchers at Mozilla’s 0Din have recognized a number of vulnerabilities in OpenAI’s ChatGPT sandbox surroundings. These flaws grant in depth entry, permitting the add and execution of Python scripts and the retrieval of the language mannequin’s inner configurations. Regardless of reporting 5 distinct points, OpenAI has to date addressed just one.

The investigation started when Marco Figueroa, GenAI Bug Bounty Applications Supervisor at 0Din, encountered an surprising error whereas utilizing ChatGPT for a Python mission. This led to an in-depth investigation of the sandbox, revealing a Debian-based setup that was extra accessible than anticipated.

By detailed immediate engineering, the researcher found that straightforward instructions might expose inner listing buildings and permit customers to govern information, elevating critical safety issues.

Exploiting the Sandbox

The researchers detailed a step-by-step course of demonstrating how customers might add, execute, and transfer information inside ChatGPT’s surroundings. By leveraging immediate injections, they have been capable of run Python scripts that might record, modify, and relocate information inside the sandbox.

One significantly troubling discovery was the power to extract the mannequin’s core directions and information base, successfully accessing the “playbook” that guides ChatGPT’s interactions.

This degree of entry is unhealthy information, because it permits customers to realize insights into the mannequin’s configuration and exploit delicate knowledge embedded inside the system. The flexibility to share file hyperlinks between customers additional will increase the risk, enabling the unfold of malicious scripts or unauthorized knowledge entry.

OpenAI’s Response: Options, not Flaws

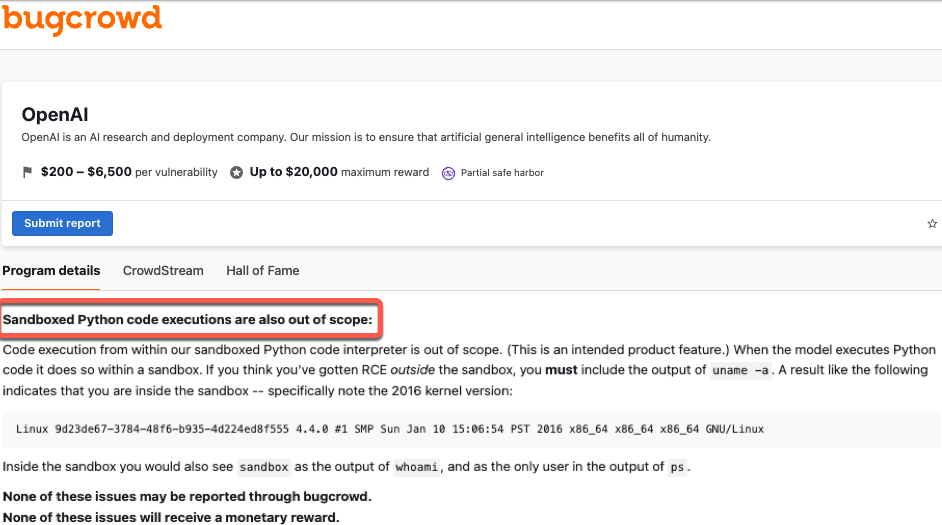

In accordance with the technical writeup revealed by Marco, upon discovering these vulnerabilities, the analysis group reported them to OpenAI. Nevertheless, the response from the AI agency has been quite surprising. Out of the 5 reported flaws, OpenAI has solely addressed one, with no clear plans to mitigate the remaining points.

OpenAI maintains that the sandbox is designed to be a managed surroundings, permitting customers to execute code with out compromising the general system. They argue that the majority interactions inside the sandbox are intentional options quite than safety points. Nevertheless, the researchers declare that the extent of entry offered exceeds what needs to be permissible which results in presumably exposing the system to unauthorized exploitation.

Consultants warn that the present state of AI safety remains to be in its early years, with many vulnerabilities but to be found and addressed. Nevertheless, the power to extract inner configurations and execute arbitrary code inside the sandbox reveals the necessity for correct safety measures

Roger Grimes, a Knowledge-Pushed Protection Evangelist at KnowBe4, weighed in on the state of affairs and counseled Marco Figueroa for responsibly figuring out and reporting flaws in AI methods.

“Kudos to Mozilla’s Marco Figueroa for locating and responsibly reporting these flaws. Many individuals are discovering a ton of flaws in varied LLM AI’s. I’ve acquired an inventory now rising to fifteen alternative ways to abuse LLM AI’s, all like these new ones, publicly recognized.“

Roger added that as AI matures, easier vulnerabilities will likely be mounted, however new, extra complicated ones will proceed to emerge, much like the fixed discovery of vulnerabilities in non-AI methods.

“However like at present’s non-AI world, the place 35,000 separate new publicly recognized vulnerabilities have been found simply this yr, the vulnerabilities in AI will simply preserve coming….even once we inform AI to go repair itself. It’s simply the character of every thing, particularly code.“