Picture by Writer

Mistral AI, one of many world’s main AI analysis corporations, has just lately launched the bottom mannequin for Mistral 7B v0.2.

This open-source language mannequin was unveiled in the course of the firm’s hackathon occasion on March 23, 2024.

The Mistral 7B fashions have 7.3 billion parameters, making them extraordinarily highly effective. They outperform Llama 2 13B and Llama 1 34B on virtually all benchmarks. The newest V0.2 mannequin introduces a 32k context window amongst different developments, enhancing its capacity to course of and generate textual content.

Moreover, the model that was just lately introduced is the bottom mannequin of the instruction-tuned variant, “Mistral-7B-Instruct-V0.2,” which was launched earlier final 12 months.

On this tutorial, I’ll present you find out how to entry and fine-tune this language mannequin on Hugging Face.

We shall be fine-tuning the Mistral 7B-v0.2 base mannequin utilizing Hugging Face’s AutoTrain performance.

Hugging Face is famend for democratizing entry to machine studying fashions, permitting on a regular basis customers to develop superior AI options.

AutoTrain, a function of Hugging Face, automates the method of mannequin coaching, making it accessible and environment friendly.

It helps customers choose the very best parameters and coaching strategies when fine-tuning fashions, which is a activity that may in any other case be daunting and time-consuming.

Listed below are 5 steps to fine-tuning your Mistral-7B mannequin:

1. Organising the atmosphere

You have to first create an account with Hugging Face, after which create a mannequin repository.

To attain this, merely observe the steps supplied on this hyperlink and are available again to this tutorial.

We shall be coaching the mannequin in Python. In relation to deciding on a pocket book atmosphere for coaching, you need to use Kaggle Notebooks or Google Colab, each of which offer free entry to GPUs.

If the coaching course of takes too lengthy, you would possibly wish to swap to a cloud platform like AWS Sagemaker or Azure ML.

Lastly, carry out the next pip installs earlier than you begin coding alongside to this tutorial:

!pip set up -U autotrain-advanced

!pip set up datasets transformers

2. Making ready your dataset

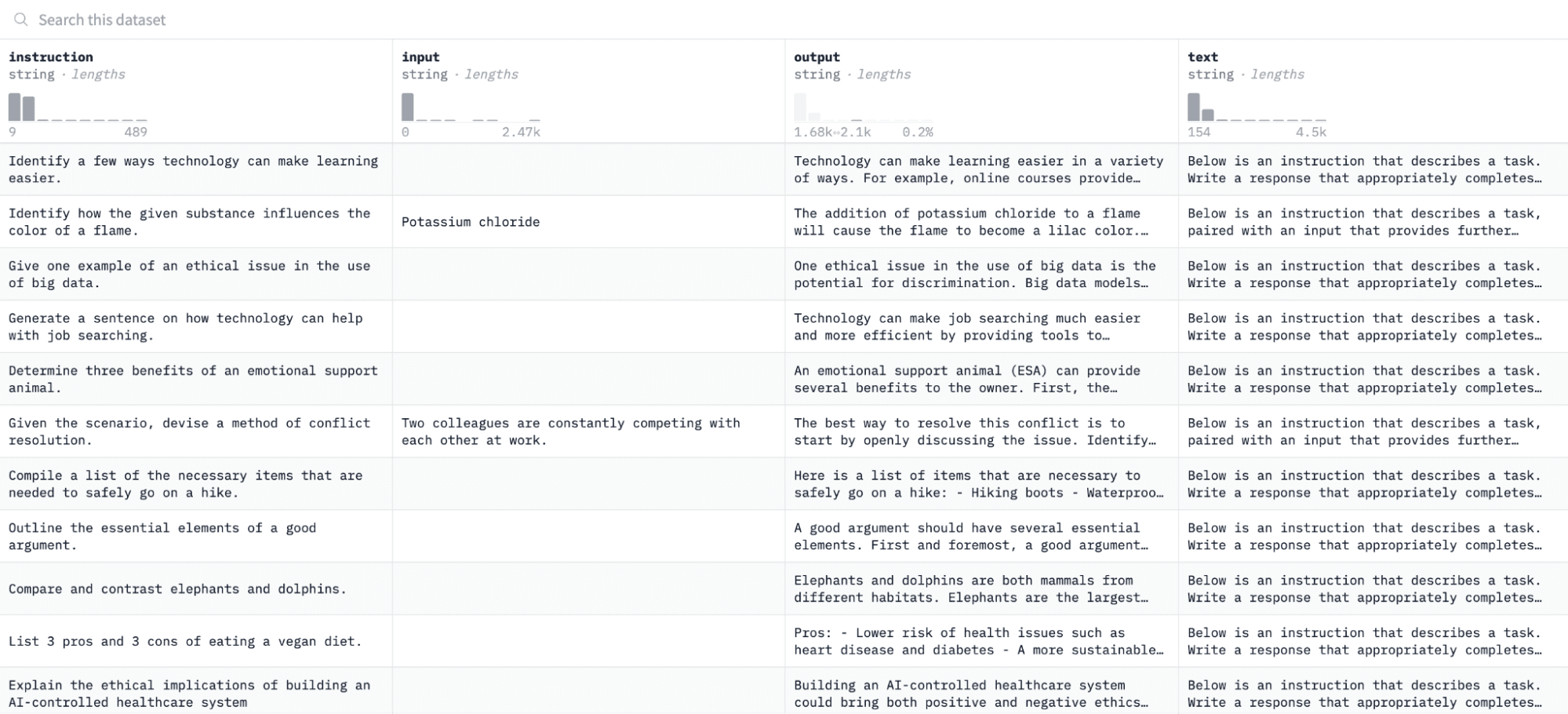

On this tutorial, we shall be utilizing the Alpaca dataset on Hugging Face, which appears to be like like this:

We are going to fine-tune the mannequin on pairs of directions and outputs and assess its capacity to reply to the given instruction within the analysis course of.

To entry and put together this dataset, run the next traces of code:

import pandas as pd

from datasets import load_dataset

# Load and preprocess dataset

def preprocess_dataset(dataset_name, split_ratio='practice[:10%]', input_col="input", output_col="output"):

dataset = load_dataset(dataset_name, cut up=split_ratio)

df = pd.DataFrame(dataset)

chat_df = df[df[input_col] == ''].reset_index(drop=True)

return chat_df

# Formatting in response to AutoTrain necessities

def format_interaction(row):

formatted_text = f"[Begin] {row['instruction']} [End] {row['output']} [Close]"

return formatted_text

# Course of and save the dataset

if __name__ == "__main__":

dataset_name = "tatsu-lab/alpaca"

processed_data = preprocess_dataset(dataset_name)

processed_data['formatted_text'] = processed_data.apply(format_interaction, axis=1)

save_path="formatted_data/training_dataset"

os.makedirs(save_path, exist_ok=True)

file_path = os.path.be a part of(save_path, 'formatted_train.csv')

processed_data[['formatted_text']].to_csv(file_path, index=False)

print("Dataset formatted and saved.")The primary operate will load the Alpaca dataset utilizing the “datasets” library and clear it to make sure that we aren’t together with any empty directions. The second operate constructions your knowledge in a format that AutoTrain can perceive.

After working the above code, the dataset shall be loaded, formatted, and saved within the specified path. While you open your formatted dataset, it’s best to see a single column labeled “formatted_text.”

3. Organising your coaching atmosphere

Now that you simply’ve efficiently ready the dataset, let’s proceed to arrange your mannequin coaching atmosphere.

To do that, you need to outline the next parameters:

project_name="mistralai"

model_name="alpindale/Mistral-7B-v0.2-hf"

push_to_hub = True

hf_token = 'your_token_here'

repo_id = 'your_repo_here.'Here’s a breakdown of the above specs:

- You possibly can specify any project_name. That is the place all of your mission and coaching recordsdata shall be saved.

- The model_name parameter is the mannequin you’d prefer to fine-tune. On this case, I’ve specified a path to the Mistral-7B v0.2 base mannequin on Hugging Face.

- The hf_token variable should be set to your Hugging Face token, which will be obtained by navigating to this hyperlink.

- Your repo_id should be set to the Hugging Face mannequin repository that you simply created in step one of this tutorial. For instance, my repository ID is NatasshaS/Model2.

4. Configuring mannequin parameters

Earlier than fine-tuning our mannequin, we should outline the coaching parameters, which management facets of mannequin habits corresponding to coaching period and regularization.

These parameters affect key facets like how lengthy the mannequin trains, the way it learns from the info, and the way it avoids overfitting.

You possibly can set the next parameters on your mannequin:

use_fp16 = True

use_peft = True

use_int4 = True

learning_rate = 1e-4

num_epochs = 3

batch_size = 4

block_size = 512

warmup_ratio = 0.05

weight_decay = 0.005

lora_r = 8

lora_alpha = 16

lora_dropout = 0.01

5. Setting atmosphere variables

Let’s now put together our coaching atmosphere by setting some atmosphere variables.

This step ensures that the AutoTrain function makes use of the specified settings to fine-tune the mannequin, corresponding to our mission identify and coaching preferences:

os.environ["PROJECT_NAME"] = project_name

os.environ["MODEL_NAME"] = model_name

os.environ["LEARNING_RATE"] = str(learning_rate)

os.environ["NUM_EPOCHS"] = str(num_epochs)

os.environ["BATCH_SIZE"] = str(batch_size)

os.environ["BLOCK_SIZE"] = str(block_size)

os.environ["WARMUP_RATIO"] = str(warmup_ratio)

os.environ["WEIGHT_DECAY"] = str(weight_decay)

os.environ["USE_FP16"] = str(use_fp16)

os.environ["LORA_R"] = str(lora_r)

os.environ["LORA_ALPHA"] = str(lora_alpha)

os.environ["LORA_DROPOUT"] = str(lora_dropout)

6. Provoke mannequin coaching

Lastly, let’s begin coaching the mannequin utilizing the autotrain command. This step entails specifying your mannequin, dataset, and coaching configurations, as displayed beneath:

!autotrain llm

--train

--model "${MODEL_NAME}"

--project-name "${PROJECT_NAME}"

--data-path "formatted_data/training_dataset/"

--text-column "formatted_text"

--lr "${LEARNING_RATE}"

--batch-size "${BATCH_SIZE}"

--epochs "${NUM_EPOCHS}"

--block-size "${BLOCK_SIZE}"

--warmup-ratio "${WARMUP_RATIO}"

--lora-r "${LORA_R}"

--lora-alpha "${LORA_ALPHA}"

--lora-dropout "${LORA_DROPOUT}"

--weight-decay "${WEIGHT_DECAY}"

$( [[ "$USE_FP16" == "True" ]] && echo "--mixed-precision fp16" )

$( [[ "$USE_PEFT" == "True" ]] && echo "--use-peft" )

$( [[ "$USE_INT4" == "True" ]] && echo "--quantization int4" )

$( [[ "$PUSH_TO_HUB" == "True" ]] && echo "--push-to-hub --token ${HF_TOKEN} --repo-id ${REPO_ID}" )

Ensure to vary the data-path to the place your coaching dataset is situated.

7. Evaluating the mannequin

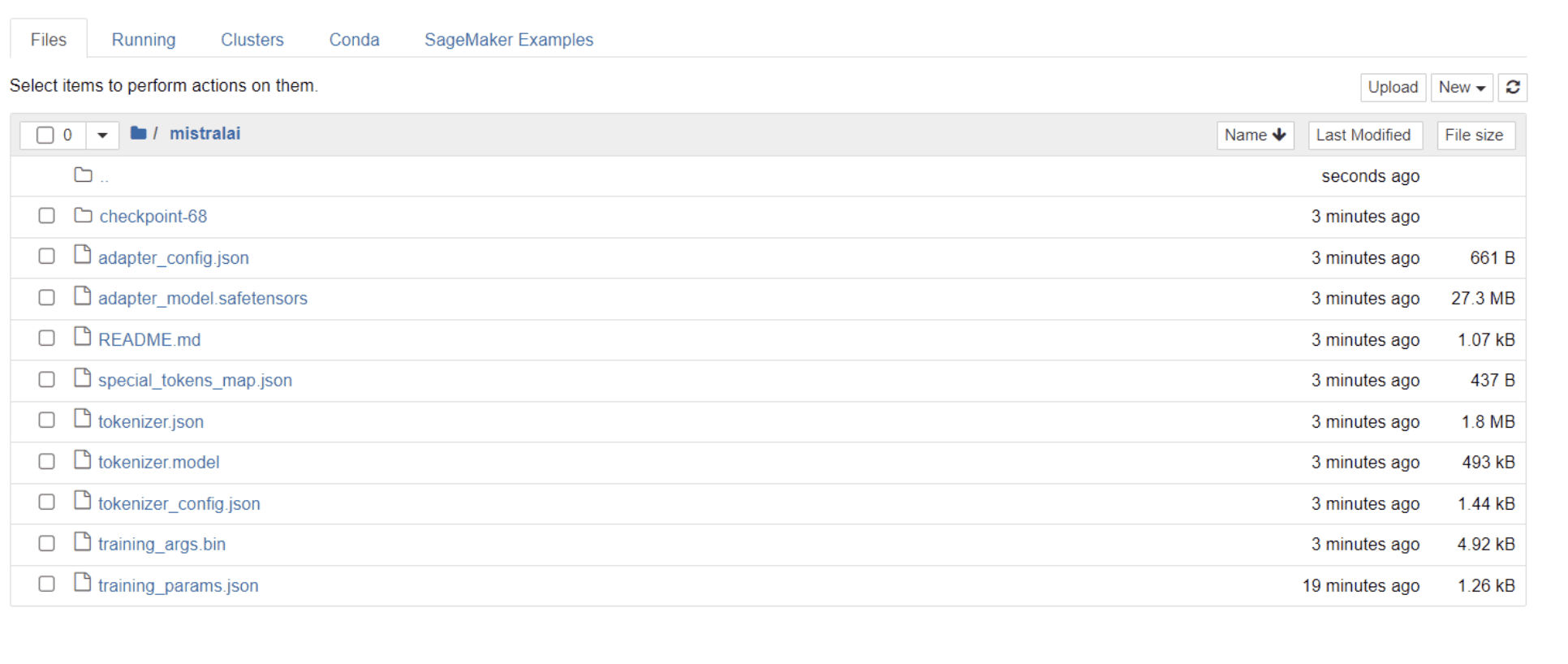

As soon as your mannequin has completed coaching, it’s best to see a folder seem in your listing with the identical title as your mission identify.

In my case, this folder is titled “mistralai,” as seen within the picture beneath:

Inside this folder, yow will discover recordsdata that embody your mannequin weights, hyperparameters, and structure particulars.

Let’s now test whether or not this fine-tuned mannequin is ready to reply precisely to a query in our dataset. To attain this, we first must run the next traces of code to generate 5 pattern inputs and outputs from our dataset:

# Print out 5 pattern inputs and outputs from our dataset

for i, instance in enumerate(dataset):

if i >= 5:

break

print(f"Instruction: {example['instruction']}")

print(f"Output: {example['output']}n---")

It’s best to see a response that appears like this, showcasing 5 pattern knowledge factors:

Instruction: Give three suggestions for staying wholesome.

Output: 1.Eat a balanced eating regimen and ensure to incorporate loads of vegatables and fruits.

2. Train often to maintain your physique energetic and powerful.

3. Get sufficient sleep and preserve a constant sleep schedule.

---

Instruction: What are the three main colours?

Output: The three main colours are purple, blue, and yellow.

---

Instruction: Describe the construction of an atom.

Output: An atom is made up of a nucleus, which accommodates protons and neutrons, surrounded by electrons that journey in orbits across the nucleus. The protons and neutrons have a constructive cost, whereas the electrons have a damaging cost, leading to an total impartial atom. The variety of every particle determines the atomic quantity and the kind of atom.

---

Instruction: How can we scale back air air pollution?

Output: There are a selection of the way to scale back air air pollution, corresponding to shifting to renewable vitality sources, encouraging using public transportation, prohibiting the burning of fossil fuels, implementing insurance policies to scale back emissions from industrial sources, and implementing car emissions requirements. Moreover, people can do their half to scale back air air pollution by decreasing automotive use, avoiding burning supplies corresponding to wooden, and altering to vitality environment friendly home equipment.

---

Instruction: Describe a time whenever you needed to make a troublesome choice.

Output: I needed to make a troublesome choice once I was working as a mission supervisor at a development firm. I used to be accountable for a mission that wanted to be accomplished by a sure date to be able to meet the shopper's expectations. Nevertheless, as a consequence of surprising delays, we weren't capable of meet the deadline and so I needed to make a troublesome choice. I made a decision to increase the deadline, however I needed to stretch the workforce's assets even additional and improve the funds. Though it was a dangerous choice, I in the end determined to go forward with it to make sure that the mission was accomplished on time and that the shopper's expectations have been met. The mission was finally efficiently accomplished and this was seen as a testomony to my management and decision-making talents.

We’re going to kind one of many above directions into the mannequin and test if it generates correct output. Here’s a operate to supply an instruction to the mannequin and get a response from it:

# Operate to supply an instruction

def ask(mannequin, tokenizer, query, max_length=128):

inputs = tokenizer.encode(query, return_tensors="pt")

outputs = mannequin.generate(inputs, max_length=max_length, num_return_sequences=1)

reply = tokenizer.decode(outputs[0], skip_special_tokens=True)

return reply

Lastly, enter a query into this operate as displayed beneath:

query = "Describe a time when you had to make a difficult decision."

reply = ask(mannequin, tokenizer, query)

print(reply)

Your mannequin ought to generate a response that’s equivalent to its corresponding output within the coaching dataset, as displayed beneath:

Describe a time whenever you needed to make a troublesome choice.

What did you do? How did it prove?

[/INST] I keep in mind a time once I needed to make a troublesome choice about

my profession. I had been working in the identical job for a number of years and had

grown uninterested in it. I knew that I wanted to make a change, however I used to be not sure of what to do. I weighed my choices rigorously and finally determined to take a leap of religion and begin my very own enterprise. It was a dangerous transfer, however it paid off in the long run. I'm now the proprietor of a profitable enterprise and

Please observe that the response could seem incomplete or reduce off due to the variety of tokens we’ve specified. Be at liberty to regulate the “max_length” worth to permit for a extra prolonged response.

In case you’ve come this far, congratulations!

You’ve got efficiently fine-tuned a state-of-the-art language mannequin, leveraging the facility of Mistral 7B v-0.2 alongside Hugging Face’s capabilities.

However the journey doesn’t finish right here.

As a subsequent step, I like to recommend experimenting with completely different datasets or tweaking sure coaching parameters to optimize mannequin efficiency. High quality-tuning fashions on a bigger scale will improve their utility, so strive experimenting with larger datasets or various codecs, corresponding to PDFs and textual content recordsdata.

Such expertise turns into invaluable when working with real-world knowledge in organizations, which is usually messy and unstructured.

Natassha Selvaraj is a self-taught knowledge scientist with a ardour for writing. Natassha writes on every part knowledge science-related, a real grasp of all knowledge matters. You possibly can join along with her on LinkedIn or take a look at her YouTube channel.