Microsoft launched Phi-3 Mini, a tiny language mannequin that’s a part of the corporate’s technique to develop light-weight, function-specific AI fashions.

The development of language fashions has seen ever bigger parameters, coaching datasets, and context home windows. Scaling the scale of those fashions delivered extra highly effective capabilities however at a value.

The standard method to coaching an LLM is to have it eat large quantities of information which requires enormous computing sources. Coaching an LLM like GPT-4, for instance, is estimated to have taken round 3 months and to have price over $21m.

GPT-4 is a good answer for duties that require advanced reasoning however overkill for less complicated duties like content material creation or a gross sales chatbot. It’s like utilizing a Swiss Military knife when all you want is a straightforward letter opener.

At solely 3.8B parameters, Phi-3 Mini is tiny. Nonetheless, Microsoft says it is a perfect light-weight, low-cost answer for duties like summarizing a doc, extracting insights from reviews, and writing product descriptions or social media posts.

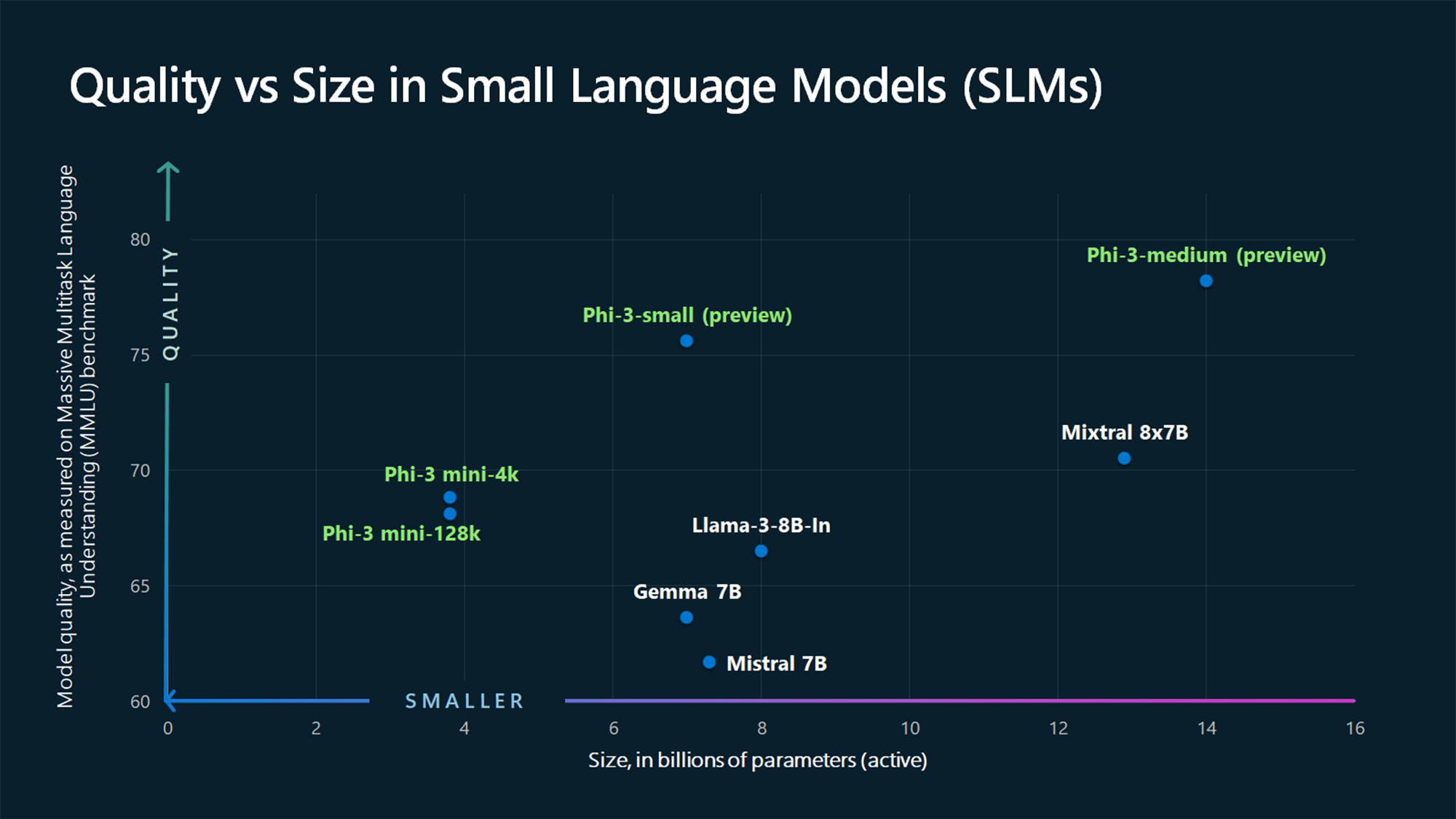

The MMLU benchmark figures present Phi-3 Mini and the yet-to-be-released bigger Phi fashions beating bigger fashions like Mistral 7B and Gemma 7B.

Microsoft says Phi-3-small (7B parameters) and Phi-3-medium (14B parameters) will likely be out there within the Azure AI Mannequin Catalog “shortly”.

Bigger fashions like GPT-4 are nonetheless the gold customary and we are able to in all probability count on that GPT-5 will likely be even larger.

SLMs like Phi-3 Mini supply some essential advantages that bigger fashions don’t. SLMs are cheaper to fine-tune, require much less compute, and will run on-device even in conditions the place no web entry is out there.

Deploying an SLM on the edge ends in much less latency and most privateness as a result of there’s no have to ship knowledge forwards and backwards to the cloud.

Right here’s Sebastien Bubeck, VP of GenAI analysis at Microsoft AI with a demo of Phi-3 Mini. It’s tremendous quick and spectacular for such a small mannequin.

phi-3 is right here, and it’s … good :-).

I made a rapid brief demo to provide you a really feel of what phi-3-mini (3.8B) can do. Keep tuned for the open weights launch and extra bulletins tomorrow morning!

(And ofc this wouldn’t be full with out the standard desk of benchmarks!) pic.twitter.com/AWA7Km59rp

— Sebastien Bubeck (@SebastienBubeck) April 23, 2024

Curated artificial knowledge

Phi-3 Mini is a results of discarding the concept enormous quantities of information are the one strategy to prepare a mannequin.

Sebastien Bubeck, Microsoft vice chairman of generative AI analysis requested “Instead of training on just raw web data, why don’t you look for data which is of extremely high quality?”

Microsoft Analysis machine studying professional Ronen Eldan was studying bedtime tales to his daughter when he questioned if a language mannequin may be taught utilizing solely phrases a 4-year-old may perceive.

This led to an experiment the place they created a dataset beginning with 3,000 phrases. Utilizing solely this restricted vocabulary they prompted an LLM to create tens of millions of brief youngsters’s tales which had been compiled right into a dataset known as TinyStories.

The researchers then used TinyStories to coach a particularly small 10M parameter mannequin which was subsequently capable of generate “fluent narratives with perfect grammar.”

They continued to iterate and scale this artificial knowledge technology method to create extra superior, however rigorously curated and filtered artificial datasets that had been ultimately used to coach Phi-3 Mini.

The result’s a tiny mannequin that will likely be extra reasonably priced to run whereas providing efficiency corresponding to GPT-3.5.

Smaller however extra succesful fashions will see firms transfer away from merely defaulting to massive LLMs like GPT-4. We may additionally quickly see options the place an LLM handles the heavy lifting however delegates easier duties to light-weight fashions.