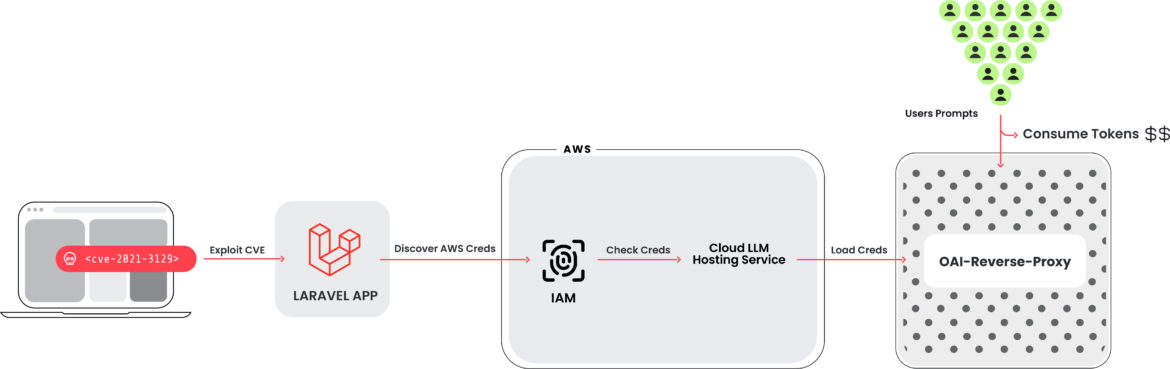

The Sysdig Risk Analysis Workforce (TRT) not too long ago noticed a brand new assault that leveraged stolen cloud credentials with a view to goal ten cloud-hosted giant language mannequin (LLM) providers, generally known as LLMjacking. The credentials had been obtained from a preferred goal, a system working a weak model of Laravel (CVE-2021-3129). Assaults in opposition to LLM-based Synthetic Intelligence (AI) techniques have been mentioned typically, however principally round immediate abuse and altering coaching knowledge. On this case, attackers intend to promote LLM entry to different cybercriminals whereas the cloud account proprietor pays the invoice.

As soon as preliminary entry was obtained, they exfiltrated cloud credentials and gained entry to the cloud atmosphere, the place they tried to entry native LLM fashions hosted by cloud suppliers: on this occasion, a neighborhood Claude (v2/v3) LLM mannequin from Anthropic was focused. If undiscovered, such a assault might end in over $46,000 of LLM consumption prices per day for the sufferer.

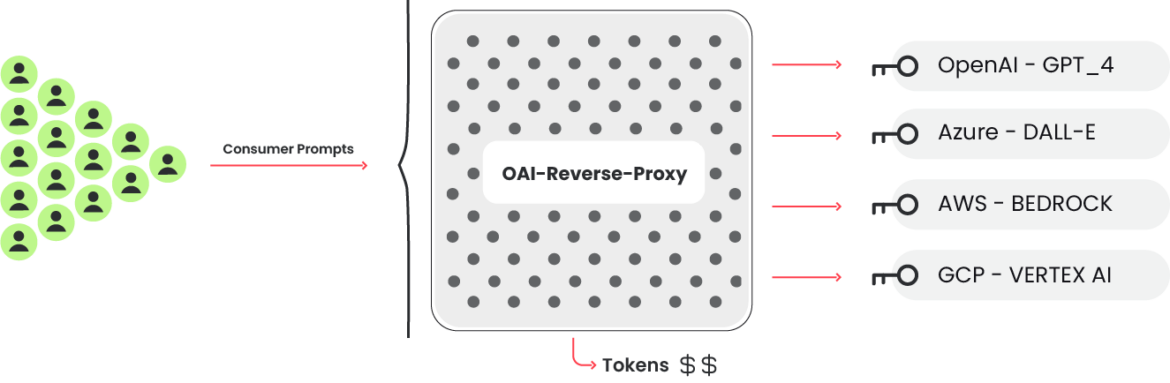

Sysdig researchers found proof of a reverse proxy for LLMs getting used to supply entry to the compromised accounts, suggesting a monetary motivation. Nonetheless, one other attainable motivation is to extract LLM coaching knowledge.

Breadth of Targets

We had been in a position to uncover the instruments that had been producing the requests used to invoke the fashions throughout the assault. This revealed a broader script that was in a position to examine credentials for ten completely different AI providers with a view to decide which had been helpful for his or her functions. These providers embrace:

- AI21 Labs, Anthropic, AWS Bedrock, Azure, ElevenLabs, MakerSuite, Mistral, OpenAI, OpenRouter, and GCP Vertex AI

The attackers want to acquire entry to a considerable amount of LLM fashions throughout completely different providers. No reliable LLM queries had been truly run throughout the verification part. As a substitute, simply sufficient was accomplished to determine what the credentials had been able to and any quotas. As well as, logging settings are additionally queried the place attainable. That is accomplished to keep away from detection when utilizing the compromised credentials to run their prompts.

Background

Hosted LLM Fashions

All main cloud suppliers, together with Azure Machine Studying, GCP’s Vertex AI, and AWS Bedrock, now host giant language mannequin (LLM) providers. These platforms present builders with easy accessibility to varied widespread fashions utilized in LLM-based AI. As illustrated within the screenshot under, the consumer interface is designed for simplicity, enabling builders to begin constructing purposes shortly.

These fashions, nonetheless, will not be enabled by default. As a substitute, a request must be submitted to the cloud vendor with a view to run them. For some fashions, it’s an automated approval; for others, like third-party fashions, a small kind have to be crammed out. As soon as a request is made, the cloud vendor normally permits entry fairly shortly. The requirement to make a request is usually extra of a velocity bump for attackers somewhat than a blocker, and shouldn’t be thought of a safety mechanism.

Cloud distributors have simplified the method of interacting with hosted cloud-based language fashions by utilizing simple CLI instructions. As soon as the required configurations and permissions are in place, you possibly can simply have interaction with the mannequin utilizing a command much like this:

aws bedrock-runtime invoke-model –model-id anthropic.claude-v2 –physique ‘{“prompt”: “nnHuman: story of two dogsnnAssistant:”, “max_tokens_to_sample” : 300}’ –cli-binary-format raw-in-base64-out invoke-model-output.txt

LLM Reverse Proxy

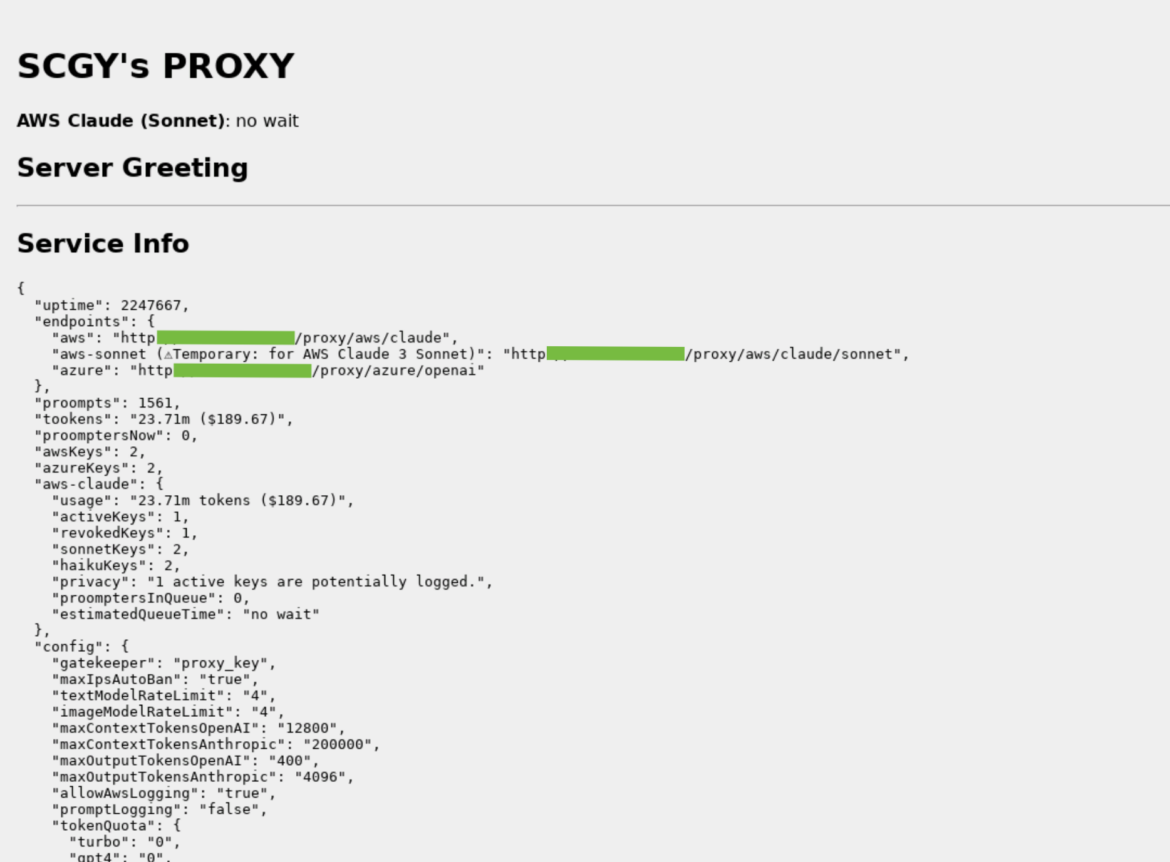

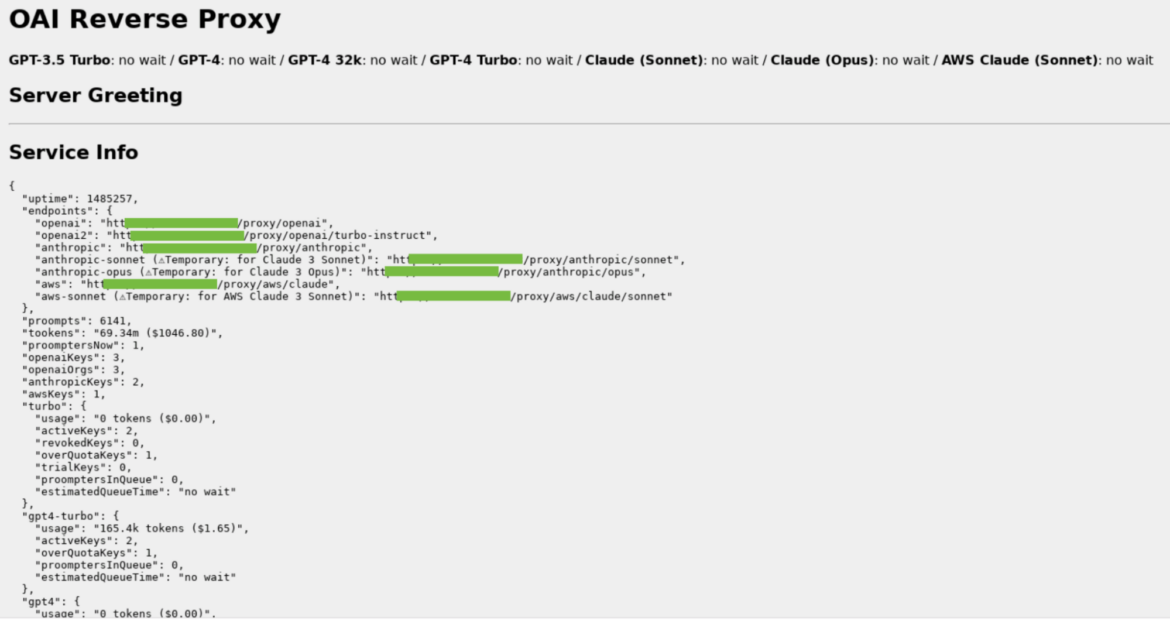

The important thing checking code that verifies if credentials are ready to make use of focused LLMs additionally makes reference to a different mission: OAI Reverse Proxy. This open supply mission acts as a reverse proxy for LLM providers. Utilizing software program resembling this is able to permit an attacker to centrally handle entry to a number of LLM accounts whereas not exposing the underlying credentials, or on this case, the underlying pool of compromised credentials. Through the assault utilizing the compromised cloud credentials, a user-agent that matches OAI Reverse Proxy was seen trying to make use of LLM fashions.

The picture above is an instance of an OAI Reverse Proxy we discovered working on the Web. There isn’t any proof that this occasion is tied to this assault in any manner, nevertheless it does present the sort of data it collects and shows. Of particular word are the token counts (“tookens”), prices, and keys that are probably logging.

This instance reveals an OAI reverse proxy occasion, which is setup to make use of a number of sorts of LLMs. There isn’t any proof that this occasion is concerned with the assault.

If the attackers had been gathering a listing of helpful credentials and needed to promote entry to the accessible LLM fashions, a reverse proxy like this might permit them to monetize their efforts.

Technical Evaluation

On this technical breakdown, we discover how the attackers navigated a cloud atmosphere to hold out their intrusion. By using seemingly reliable API requests inside the cloud atmosphere, they cleverly examined the boundaries of their entry with out instantly triggering alarms. The instance under demonstrates a strategic use of the InvokeModel API name logged by CloudTrail. Though the attackers issued a legitimate request, they deliberately set the max_tokens_to_sample parameter to -1. This uncommon parameter, usually anticipated to set off an error, as a substitute served a twin objective. It confirmed not solely the existence of entry to the LLMs but additionally that these providers had been energetic, as indicated by the ensuing ValidationException. A unique final result, resembling an AccessDenied error, would have advised restricted entry. This delicate probing reveals a calculated strategy to uncover what actions their stolen credentials permitted inside the cloud account.

InvokeModel

The InvokeModel name is logged by CloudTrail and an instance malicious occasion could be seen under. They despatched a reliable request however specified “max_tokens_to_sample” to be -1. That is an invalid error which causes the “ValidationException” error, however it’s helpful data for the attacker to have as a result of it tells them the credentials have entry to the LLMs they usually have been enabled. In any other case, they’d have obtained an “AccessDenied” error.

{

"eventVersion": "1.09",

"userIdentity": {

"type": "IAMUser",

"principalId": "[REDACTED]",

"arn": "[REDACTED]",

"accountId": "[REDACTED]",

"accessKeyId": "[REDACTED]",

"userName": "[REDACTED]"

},

"eventTime": "[REDACTED]",

"eventSource": "bedrock.amazonaws.com",

"eventName": "InvokeModel",

"awsRegion": "us-east-1",

"sourceIPAddress": "83.7.139.184",

"userAgent": "Boto3/1.29.7 md/Botocore#1.32.7 ua/2.0 os/windows#10 md/arch#amd64 lang/python#3.12.1 md/pyimpl#CPython cfg/retry-mode#legacy Botocore/1.32.7",

"errorCode": "ValidationException",

"errorMessage": "max_tokens_to_sample: range: 1..1,000,000",

"requestParameters": {

"modelId": "anthropic.claude-v2"

},

"responseElements": null,

"requestID": "d4dced7e-25c8-4e8e-a893-38c61e888d91",

"eventID": "419e15ca-2097-4190-a233-678415ed9a4f",

"readOnly": true,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "[REDACTED]",

"eventCategory": "Management",

"tlsDetails": {

"tlsVersion": "TLSv1.3",

"cipherSuite": "TLS_AES_128_GCM_SHA256",

"clientProvidedHostHeader": "bedrock-runtime.us-east-1.amazonaws.com"

}

}Code language: Perl (perl)Instance Cloudtrail log

AWS Bedrock just isn’t supported in all areas so the attackers referred to as “InvokeModel” solely within the supported areas. Presently, Bedrock is supported in us-east-1, us-west-2, ap-southeast-1, ap-northeast-1, eu-central-1, eu-west-3, and us-gov-west-1, as proven right here. Totally different fashions can be found relying on the area; right here is the listing of fashions supported by AWS Area.

GetModelInvocationLoggingConfiguration

Apparently, the attackers confirmed curiosity in how the service was configured. This may be accomplished by calling “GetModelInvocationLoggingConfiguration,” which returns S3 and Cloudwatch logging configuration if enabled. In our setup, we used each S3 and Cloudwatch to collect as a lot knowledge in regards to the assault as attainable.

{

"loggingConfig": {

"cloudWatchConfig": {

"logGroupName": "[REDACTED]",

"roleArn": "[REDACTED]",

"largeDataDeliveryS3Config": {

"bucketName": "[REDACTED]",

"keyPrefix": "[REDACTED]"

}

},

"s3Config": {

"bucketName": "[REDACTED]",

"keyPrefix": ""

},

"textDataDeliveryEnabled": true,

"imageDataDeliveryEnabled": true,

"embeddingDataDeliveryEnabled": true

}

}Code language: Perl (perl)Instance GetModelInvocationLoggingConfiguration response

Details about the prompts being run and their outcomes will not be saved in Cloudtrail. As a substitute, extra configuration must be accomplished to ship that data to Cloudwatch and S3. This examine is finished to cover the small print of their actions from any detailed observations. OAI Reverse Proxy states it is not going to use any AWS key that has logging enabled for the sake of “privacy.” This makes it not possible to examine the prompts and responses if they’re utilizing the AWS Bedrock vector.

Influence

In an LLMjacking assault, the injury comes within the type of elevated prices to the sufferer. It shouldn’t be stunning to study that utilizing an LLM isn’t low cost and that value can add up in a short time. Contemplating the worst-case state of affairs the place an attacker abuses Anthropic Claude 2.x and reaches the quota restrict in a number of areas, the associated fee to the sufferer could be over $46,000 per day.

In response to the pricing and the preliminary quota restrict for Claude 2:

- 1000 enter tokens value $0.008, 1000 output tokens value $0.024.

- Max 500,000 enter and output tokens could be processed per minute based on AWS Bedrock. We will take into account the common value between enter and output tokens, which is $0.016 for 1000 tokens.

Resulting in the overall value: (500K tokens/1000 * $0.016) * 60 minutes * 24 hours * 4 areas = $46,080 / day

By maximizing the quota limits, attackers can even block the compromised group from utilizing fashions legitimately, disrupting enterprise operations.

Detection

The power to detect and reply swiftly to potential threats could make all of the distinction in sustaining a sturdy protection. Drawing insights from latest suggestions and trade greatest practices, we’ve distilled key methods to raise your detection capabilities:

- Cloud Logs Detections: Instruments like Falco, Sysdig Safe, and CloudWatch Alerts are indispensable allies. Organizations can proactively determine suspicious conduct by monitoring runtime exercise and analyzing cloud logs, together with reconnaissance ways resembling these employed inside AWS Bedrock.

- Detailed Logging: Complete logging, together with verbose logging, gives invaluable visibility into the internal workings of your cloud atmosphere. Verbose details about mannequin invocations and different essential actions offers organizations a nuanced understanding about exercise of their cloud environments.

Cloud Log Detections

Monitoring cloud logs can reveal suspicious or unauthorized exercise. Utilizing Falco or Sysdig Safe, the reconnaissance strategies used throughout the assault could be detected, and a response could be began. For Sysdig Safe clients, this rule could be discovered within the Sysdig AWS Notable Occasions coverage.

Falco rule:

- rule: Bedrock Mannequin Recon Exercise

desc: Detect reconaissance makes an attempt to examine if Amazon Bedrock is enabled, primarily based on the error code. Attackers can leverage this to find the standing of Bedrock, and then abuse it if enabled.

situation: jevt.worth[/eventSource]="bedrock.amazonaws.com" and jevt.worth[/eventName]="InvokeModel" and jevt.worth[/errorCode]="ValidationException"

output: A reconaissance try on Amazon Bedrock has been made (requesting consumer=%aws.consumer, requesting IP=%aws.sourceIP, AWS area=%aws.area, arn=%jevt.worth[/userIdentity/arn], userAgent=%jevt.worth[/userAgent], modelId=%jevt.worth[/requestParameters/modelId])

precedence: WARNINGCode language: Perl (perl)As well as, CloudWatch alerts could be configured to deal with suspicious behaviors. A number of runtime metrics for Bedrock could be monitored to set off alerts.

Detailed Logging

Monitoring your group’s use of language mannequin (LLM) providers is essential, and numerous cloud distributors present services to streamline this course of. This usually includes organising mechanisms to log and retailer knowledge about mannequin invocations.

For AWS Bedrock particularly, customers can leverage CloudWatch and S3 for enhanced monitoring capabilities. CloudWatch could be arrange by making a log group and assigning a task with the required permissions. Equally, to log into S3, a delegated bucket is required as a vacation spot. It is very important word that the CloudTrail log of the InvokeModel command doesn’t seize particulars in regards to the immediate enter and output. Nonetheless, Bedrock settings permit for simple activation of mannequin invocation logging. Moreover, for mannequin enter or output knowledge bigger than 100kb or in binary format, customers should explicitly specify an S3 vacation spot to deal with giant knowledge supply. This consists of enter and output photographs, that are saved within the logs as Base64 strings. Such complete logging mechanisms make sure that all points of mannequin utilization are monitored and archived for additional evaluation and compliance.

The logs comprise extra details about the tokens processed, as proven within the following instance:

{

"schemaType": "ModelInvocationLog",

"schemaVersion": "1.0",

"timestamp": "[REDACTED]",

"accountId": "[REDACTED]",

"identity": {

"arn": "[REDACTED]"

},

"region": "us-east-1",

"requestId": "bea9d003-f7df-4558-8823-367349de75f2",

"operation": "InvokeModel",

"modelId": "anthropic.claude-v2",

"input": {

"inputContentType": "application/json",

"inputBodyJson": {

"prompt": "nnHuman: Write a story of a young wizardnnAssistant:",

"max_tokens_to_sample": 300

},

"inputTokenCount": 16

},

"output": {

"outputContentType": "application/json",

"outputBodyJson": {

"completion": " Here is a story about a young wizard:nnMartin was an ordinary boy living in a small village. He helped his parents around their modest farm, tending to the animals and working in the fields. [...] Martin's favorite subject was transfiguration, the art of transforming objects from one thing to another. He mastered the subject quickly, amazing his professors by turning mice into goblets and stones into fluttering birds.nnMartin",

"stop_reason": "max_tokens",

"stop": null

},

"outputTokenCount": 300

}

}Code language: Perl (perl)Instance S3 log

Suggestions

This assault might have been prevented in quite a lot of methods, together with:

- Vulnerability administration to forestall preliminary entry.

- Secrets and techniques administration to make sure credentials will not be saved within the clear the place they are often stolen.

- CSPM/CIEM to make sure the abused account had the least quantity of permissions it wanted.

As highlighted by latest analysis, cloud distributors supply a spread of instruments and greatest practices designed to mitigate the dangers of cloud assaults. These instruments assist organizations construct and preserve a safe cloud atmosphere from the outset.

As an illustration, AWS supplies a number of strong safety measures. The AWS Safety Reference Structure outlines greatest practices for securely developing your cloud atmosphere. Moreover, AWS recommends utilizing Service Management Insurance policies (SCP) to centrally handle permissions, which helps reduce the danger related to over-permissioned accounts that would probably be abused. These tips and instruments are a part of AWS’s dedication to enhancing safety and offering clients with the sources to guard their cloud infrastructure successfully. Different cloud distributors supply related frameworks and instruments, making certain that customers have entry to important safety measures to safeguard their knowledge and providers whatever the platform.

Conclusion

Stolen cloud and SaaS credentials proceed to be a typical assault vector. This development will solely improve in reputation as attackers study the entire methods they will leverage their new entry for monetary acquire. The usage of LLM providers could be costly, relying on the mannequin and the quantity of tokens being fed to it. Usually, this is able to trigger a developer to try to be environment friendly — sadly, attackers shouldn’t have the identical incentive. Detection and response is essential to cope with any points shortly.

IoCs

IP Addresses

83.7.139.184

83.7.157.76

73.105.135.228

83.7.135.97