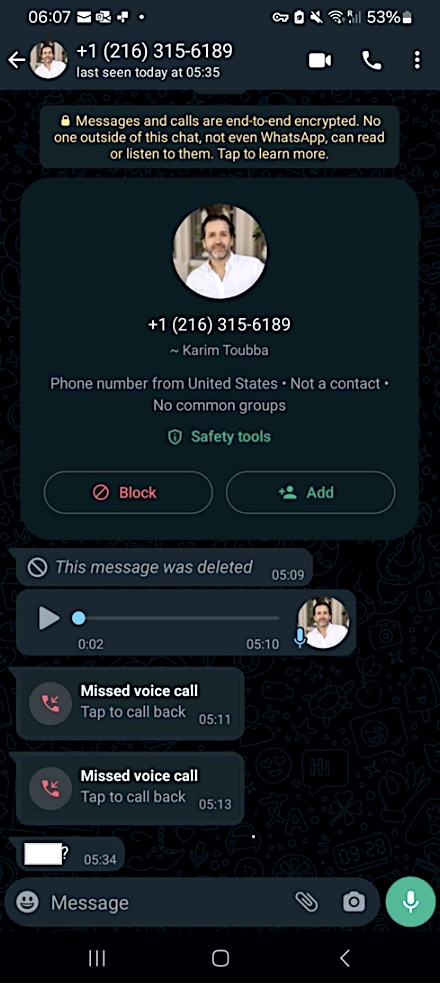

LastPass revealed this week that risk actors focused considered one of its staff in a voice phishing assault, utilizing deepfake audio to impersonate Karim Toubba, the corporate’s Chief Govt Officer.

Nevertheless, whereas 25% of individuals have been on the receiving finish of an AI voice impersonation rip-off or know somebody who has, in keeping with a latest international examine, the LastPass worker did not fall for it as a result of the attacker used WhatsApp, which is a really unusual enterprise channel.

“In our case, an employee received a series of calls, texts, and at least one voicemail featuring an audio deepfake from a threat actor impersonating our CEO via WhatsApp,” LastPass intelligence analyst Mike Kosak stated.

“As the attempted communication was outside of normal business communication channels and due to the employee’s suspicion regarding the presence of many of the hallmarks of a social engineering attempt (such as forced urgency), our employee rightly ignored the messages and reported the incident to our internal security team so that we could take steps to both mitigate the threat and raise awareness of the tactic both internally and externally.”

Kosak added the assault failed and had no influence on LastPass. Nevertheless, the corporate nonetheless selected to share particulars of the incident to warn different corporations that AI-generated deepfakes are already being utilized in government impersonation fraud campaigns.

The deepfake audio used on this assault was seemingly generated utilizing deepfake audio fashions skilled on publicly out there audio recordings of LastPass’ CEO, seemingly this one out there on YouTube.

Deepfake assaults on the rise

LastPass’ warning follows a U.S. Division of Well being and Human Providers (HHS) alert issued final week concerning cybercriminals focusing on IT assist desks utilizing social engineering ways and AI voice cloning instruments to deceive their targets.

The usage of audio deepfakes additionally permits risk actors to make it a lot tougher to confirm the caller’s identification remotely, rendering assaults the place they impersonate executives and firm staff very onerous to detect.

Whereas the HHS shared recommendation particular to assaults focusing on IT assist desks of organizations within the well being sector, the next additionally very a lot applies to CEO impersonation fraud makes an attempt:

- Require callbacks to confirm staff requesting password resets and new MFA gadgets.

- Monitor for suspicious ACH modifications.

- Revalidate all customers with entry to payer web sites.

- Take into account in-person requests for delicate issues.

- Require supervisors to confirm requests.

- Practice assist desk workers to establish and report social engineering methods and confirm callers’ identities.

In March 2021, the FBI additionally issued a Personal Business Notification (PIN) [PDF] cautioning that deepfakes—together with AI-generated or manipulated audio, textual content, photos, or video—had been changing into more and more subtle and would seemingly be extensively employed by hackers in “cyber and foreign influence operations.”

Moreover, Europol warned in April 2022 that deepfakes might quickly develop into a instrument that cybercriminal teams routinely use in CEO fraud, proof tampering, and non-consensual pornography creation.