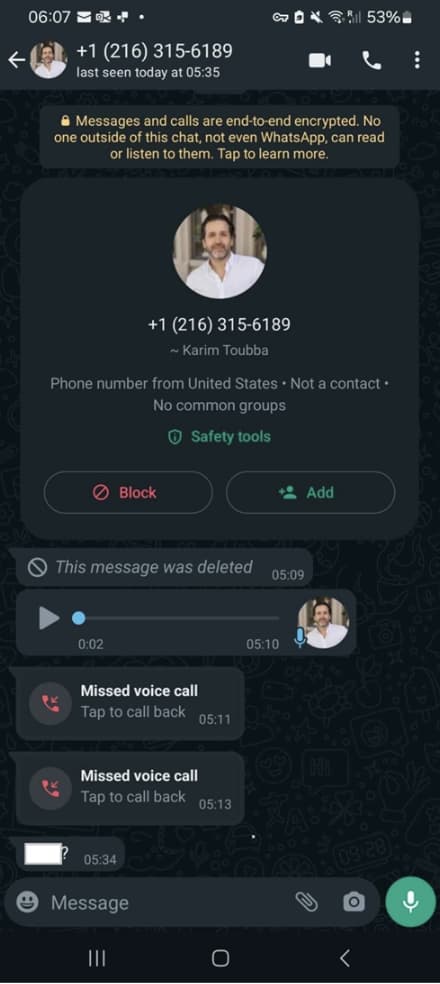

Password administration large LastPass narrowly prevented a possible safety breach after an organization worker was focused by a deepfake rip-off. The incident, detailed in a weblog submit by LastPass, concerned an audio deepfake impersonating CEO Karim Toubba making an attempt to contact the worker through WhatsApp.

Deepfake expertise, which might manipulate audio and video to create practical forgeries, is more and more being utilized by cybercriminals in elaborate social engineering schemes. On this occasion, the scammer used a voice-altering program to impersonate Toubba’s voice, doubtless aiming to create a way of urgency or belief with the worker.

Nevertheless, not everyone seems to be as lucky as LastPass. In February 2024, an worker of a multinational firm’s Hong Kong department was tricked into paying out HK$200 million (roughly US$25.6 million) after scammers utilized an AI-generated CFO with deepfake expertise.

In one other incident reported in August 2022, scammers utilized an AI-generated deepfake hologram of Binance’s chief communications officer, Patrick Hillmann, to deceive customers into taking part in on-line conferences and to focus on Binance shoppers’ crypto tasks.

As for LastPass, the corporate recommended the worker’s vigilance in recognizing the crimson flags of the state of affairs. The bizarre use of WhatsApp, a platform not generally used for official communication inside the firm, coupled with the impersonation try, inspired the worker to report the incident to LastPass safety. The corporate confirmed that the assault didn’t influence its general safety posture.

Toby Lewis, International Head of Menace Evaluation at Darktrace commented on the difficulty highlighting the dangers of Generative-AI, “The prevalence of AI as we speak represents new and extra dangers. however arguably, the extra appreciable threat is the usage of generative AI to provide deepfake audio, imagery, and video, which may be launched at scale to control and affect the citizens’s pondering.“

“While the use of AI for deepfake generation is now very real, the risk of image and media manipulation is not new, with “photoshop” current as a verb because the Nineties,” Toby defined. “The problem now could be that AI can be utilized to decrease the ability barrier to entry and pace up manufacturing to a better high quality. Defence towards AI deepfakes is essentially about sustaining a cynical view of fabric you see, particularly on-line, or unfold through social media,“ he suggested.

Nonetheless, this tried rip-off goes on to point out how subtle cybercriminals have develop into of their assaults. However, LastPass emphasised the significance of worker consciousness coaching in mitigating such assaults. Social engineering techniques typically depend on creating a way of urgency or panic, pressuring victims into making rushed choices.

The incident additionally highlights the potential risks deepfakes pose within the company area. Because the expertise continues to develop, creating ever-more convincing fakes, corporations might want to spend money on sturdy safety protocols and worker coaching to remain forward of those subtle scams.

Whereas deepfake scams concentrating on companies are nonetheless comparatively unusual, the LastPass incident stresses the rising menace. The corporate’s determination to publicize the try serves as a invaluable cautionary story for different organizations, urging them to intensify consciousness and implement preventative measures.

RELATED TOPICS

- AI Generated Faux Obituary Web sites Hit Grieving Customers

- Deepfakes are Circumventing Facial Recognition Programs

- Faux Lockdown Mode Exposes iOS Customers to Malware Assaults

- Deepfake Assault Hits Russia: Faux Putin Message Broadcasted

- QR Code Rip-off: Faux Voicemails Hit Customers, 1000 Assaults in 14 Days