Foundationally, the OWASP High 10 for Massive Language Mannequin (LLMs) purposes was designed to teach software program builders, safety architects, and different hands-on practitioners about how you can harden LLM safety and implement safer AI workloads.

The framework specifies the potential safety dangers related to deploying and managing LLM purposes by explicitly naming essentially the most crucial vulnerabilities seen in LLMs to this point and how you can mitigate them.

There are a plethora of assets on the net that doc the necessity for and advantages of an open supply threat administration mission like OWASP High 10 for LLMs.

Nonetheless, many practitioners wrestle to discern how cross-functional groups can align to higher handle the rollout of Generative AI (GenAI) applied sciences inside their organizations. There’s additionally the requirement for complete safety controls to help within the safe rollout of GenAI workloads.

And at last, there’s an academic want round how these initiatives may also help safety management, just like the CISO, higher perceive the distinctive variations between OWASP High 10 for LLMs and the varied business risk mapping frameworks, equivalent to MITRE ATT&CK and MITRE ATLAS.

Understanding the variations between AI, ML, & LLMs

Synthetic Intelligence (AI) has undergone monumental progress over the previous few a long time. If we expect as far again as1951, a yr after Isaac Asimov printed his science fiction idea, “Three Laws of Robotics,” the first AI program was written by Christopher Strachey to play checkers (or draughts, because it’s identified within the UK).

The place AI is only a broad time period that ecompasses all fields of laptop science permitting machines to perform duties much like human habits, Machine Studying (ML) and GenAI are two clearly-defined subcategories of AI.

ML was not changed by GenAI, however relatively outlined by its personal particular use circumstances. ML algorithms are typically educated on a set of information, will study from that knowledge, and sometimes find yourself getting used for making predictions. These statistical fashions can be utilized to foretell the climate or detect anomalous habits. They’re nonetheless a key a part of our monetary/banking techniques and used often in cybersecurity to detect undesirable habits.

GenAI, however, is a kind of ML that creates new knowledge. GenAI usually makes use of LLMs to synthesize present knowledge and use it to make one thing new. Examples embody companies like ChatGPT and Sysdig SageTM. Because the AI ecosystem quickly evolves, organizations are more and more deploying GenAI options — equivalent to Llama 2, Midjourney, and ElevenLabs — into their cloud-native and Kubernetes environments to leverage advantages of excessive scalability and seamless orchestration within the cloud.

This shift is accelerating the necessity for strong cloud-native safety frameworks able to safeguarding AI workloads. On this context, the distinctions between AI, machine studying (ML), and LLMs are crucial to understanding the safety implications and the governance fashions required to handle them successfully.

OWASP High 10 and Kubernetes

As companies combine instruments like Llama into cloud-native environments, they usually depend on platforms like Kubernetes to handle these AI workloads effectively. This transition to cloud-native infrastructure introduces a brand new layer of complexity, as highlighted within the OWASP High 10 for Kubernetes and the broader OWASP High 10 for Cloud-Native techniques steerage.

The flexibleness and scalability provided by Kubernetes make it simpler to deploy and scale GenAI fashions, however these fashions additionally introduce an entire new assault floor to your group — that’s the place safety management must heed warning! A containerized AI mannequin working on a cloud platform is topic to a a lot completely different set of safety considerations than a standard on-premises deployment, and even different cloud-native containerized environments, underscoring the necessity for complete safety tooling to supply correct visibility into the dangers related to this speedy AI adoption.

Who’s answerable for reliable AI?

Newer GenAI advantages will proceed to be offered within the years forward, and for every of those proposed advantages there will probably be new safety challenges to handle. A reliable AI will must be dependable, resilient, and answerable for the securing inside knowledge in addition to delicate buyer knowledge.

Proper now, many organizations are ready on authorities laws, such because the EU AI Act, to be enforced earlier than they begin taking severe duty for belief in LLM techniques. From a regulatory perspective, the EU AI Act is de facto the primary complete AI Legislation, however will solely come into power 2025 — except there are some unexpected delays with its implementation. For the reason that EU’s Normal Information Safety Regulation (GDPR) was by no means devised with LLM utilization in thoughts, its broad protection solely applies to AI techniques within the type of generalized rules of information assortment, knowledge safety, equity and transparency, accuracy and reliability, and accountability.

Whereas these GPDR rules assist preserve organizations considerably answerable for correct GenAI utilization, there’s a clear evolving race for official AI Governance that we’re all watching and ready for solutions. Finally, duty for reliable AI lies inside shared duty of the builders, safety engineering groups, and management, as they need to proactively be sure that their AI techniques are dependable, safe, and moral, relatively than ready for presidency laws just like the EU AI Act to implement compliance.

Incorporate LLM safety & governance

Not like the plans within the EU, within the US, AI laws are included inside the broader, present client privateness legal guidelines. So, whereas we’re ready on formally outlined governance requirements for AI, what can we do within the meantime? The recommendation is easy; we must always implement present, established practices and controls. Whereas GenAI provides a brand new dimension to cybersecurity, resilience, privateness, and assembly authorized and regulatory necessities, the most effective practices which were round for a very long time are nonetheless one of the best ways to establish points, discover vulnerabilities, repair them, and mitigate potential safety points.

AI asset stock

It’s vital to grasp that an AI asset stock ought to apply to each internally developed AND exterior or third-party AI options. As such, there’s a clear have to catalog present AI companies, instruments, and homeowners by designating a tag in asset administration for particular AI stock. Sysdig’s method additionally helps organizations to seamlessly embody AI elements within the Software program Invoice of Materials (SBOM), permitting safety groups to generate a complete checklist of all of the software program elements, dependencies, and metadata related to their GenAI workloads. By cataloging AI knowledge sources into arbitrary Sysdig Zones based mostly on the sensitivity of the info (protected, confidential, public), safety groups can higher prioritize these AI workloads based mostly on their threat severity stage.

Posture administration

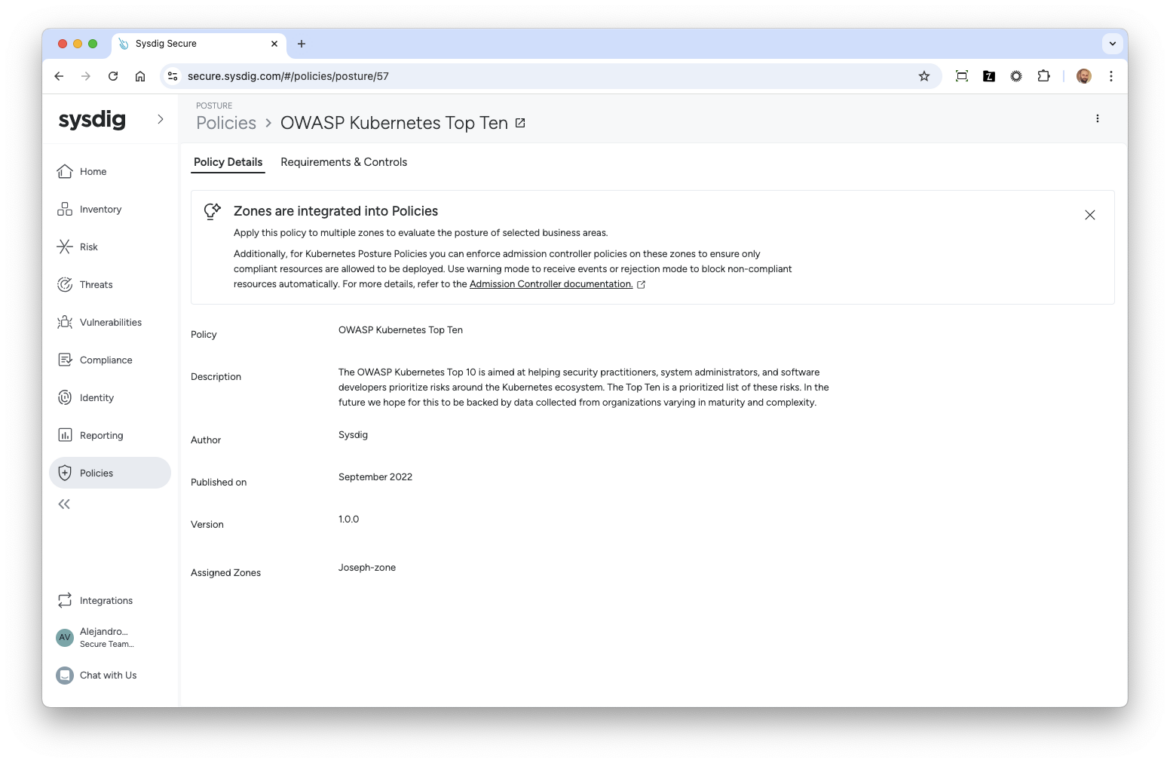

From a posture perspective, it is best to have a device that appropriately reviews on the findings of OWASP High 10. With Sysdig, these reviews come pre-packaged in order that there isn’t any want for customized configuration from end-users, rushing up reviews and guaranteeing extra correct context. Since we’re referring to LLM-based workloads working in Kubernetes, it’s nonetheless as very important as ever to make sure you are adhering to the varied safety posture controls highlighted within the OWASP High 10 for Kubernetes.

Moreover, the coordination and mapping of a companies LLM safety technique to the MITRE ATLAS can even enable that very same group to higher decide the place its LLM safety is roofed by present processes, equivalent to API Safety Requirements, and the place further safety holes could exist. MITRE ATLAS, which stands for “Adversarial Threat Landscape for Artificial intelligence Systems,” is a data base powered by real-life examples of assaults on ML techniques by identified dangerous actors. Whereas OWASP High 10 for LLMs can present steerage on the place to harden your proactive LLM safety technique, MITRE ATLAS findings will be aligned together with your risk detection guidelines in Falco or Sysdig to higher perceive the Techniques, Methods, & Procedures (TTPs) based mostly on the well-known MITRE ATT&CK structure.

Conclusion

Introducing LLM-based workloads into your cloud-native setting expands the prevailing assault floor for your online business. Naturally, as highlighted within the official launch of the OWASP High 10 for LLM Purposes, this presents new challenges that require particular techniques and defenses from frameworks such because the MITRE ATLAS.

AI workloads working in Kubernetes additionally pose issues which might be much like identified points, and the place there are already established cybersecurity publish reporting, procedures, and mitigation methods that may be utilized, equivalent to OWASP High 10 for Kubernetes. Integrating the OWASP High 10 for LLM in your present cloud safety controls, processes, and procedures ought to enable your online business to significantly scale back its publicity to evolving threats.

If you happen to assume this data was useful and need to study extra about GenAI safety, take a look at our CTO, Loris Degioanni, talking to The Cyberwire’s Date Bittner about all issues Good vs. Evil on this planet of Generative AI.