Google researchers developed a way referred to as Infini-attention, which permits LLMs to deal with infinitely lengthy textual content with out rising compute and reminiscence necessities.

The Transformer structure of an LLM is what permits it to offer consideration to the entire tokens in a immediate. The advanced dot-product and matrix multiplications it performs are quadratic in complexity.

Which means that doubling the tokens in your immediate ends in a requirement of 4 instances extra reminiscence and processing energy. Because of this it’s so difficult to make LLMs with giant context home windows with out having reminiscence and compute necessities skyrocket.

In a “standard” LLM, info firstly of the immediate content material is misplaced as soon as the immediate turns into bigger than the context window. Google’s analysis paper explains how Infini-attention can retain knowledge past the context window.

Google presents Depart No Context Behind: Environment friendly Infinite Context Transformers with Infini-attention

1B mannequin that was fine-tuned on as much as 5K sequence size passkey situations solves the 1M size downsidehttps://t.co/zyHMt3inhi pic.twitter.com/ySYEMET9Ef

— Aran Komatsuzaki (@arankomatsuzaki) April 11, 2024

How does Infini-attention work?

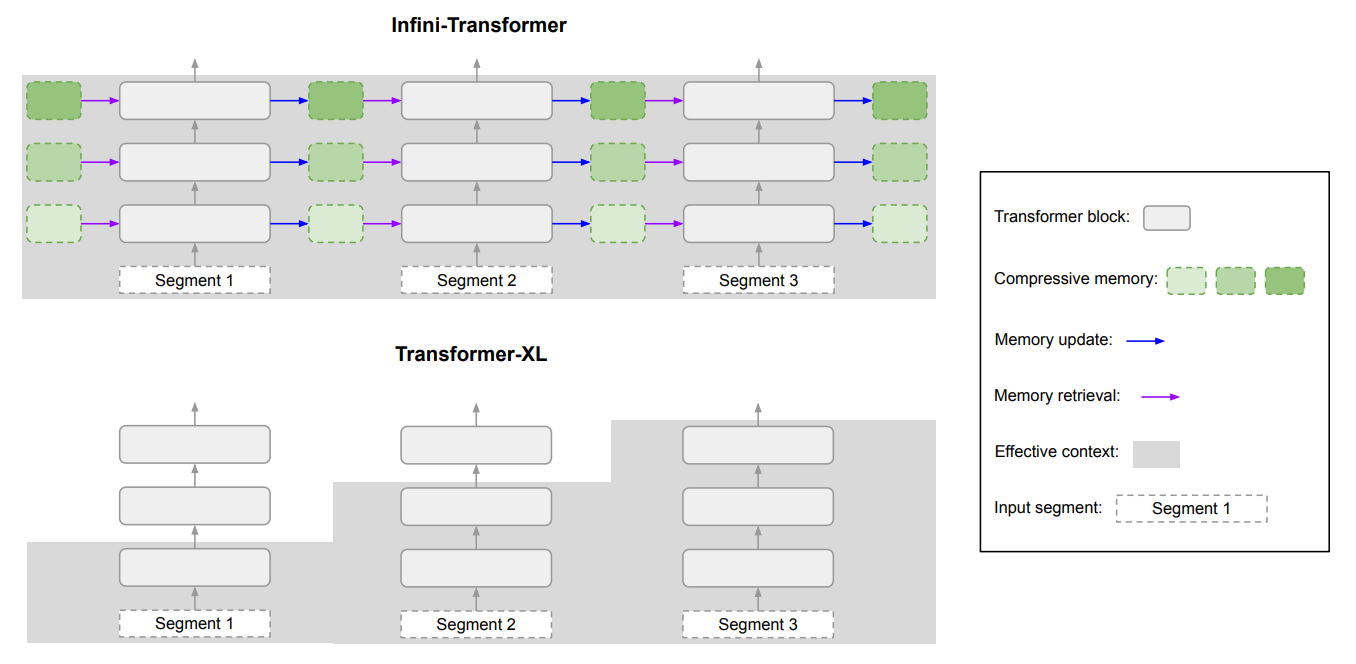

Infini-attention combines compressive reminiscence strategies with modified consideration mechanisms in order that related older info isn’t misplaced.

As soon as the enter immediate grows past the context size of the mannequin, the compressive reminiscence shops info in a compressed format reasonably than discarding it.

This enables for older, much less instantly related info to be saved with out reminiscence and compute necessities rising indefinitely because the enter grows.

As a substitute of making an attempt to retain all of the older enter info, Infini-attention’s compressive reminiscence weighs and summarizes info that’s deemed related and value retaining.

Infini-attention then takes a “vanilla” consideration mechanism however reuses the important thing worth (KV) states from every subsequent phase within the mannequin reasonably than discarding them.

Right here’s a diagram that reveals the distinction between Infini-attention and one other prolonged context mannequin Transformer XL.

The result’s an LLM that offers native consideration to current enter knowledge but additionally carries constantly distilled compressed historic knowledge to which it may well apply long-term consideration.

The paper notes that “This refined however essential modification to the eye layer allows LLMs to course of infinitely lengthy contexts with bounded reminiscence and computation assets.“

How good is it?

Google ran benchmarking checks utilizing smaller 1B and 8B parameter Infini-attention fashions. These had been in contrast towards different prolonged context fashions like Transformer-XL and Memorizing Transformers.

The Infini-Transformer achieved considerably decrease perplexity scores than the opposite fashions when processing long-context content material. A decrease perplexity rating means the mannequin is extra sure of its output predictions.

Within the “passkey retrieval” checks the Infini-attention fashions persistently discovered the random quantity hidden in textual content of as much as 1M tokens.

Different fashions typically handle to retrieve the passkey in the direction of the top of the enter however wrestle to seek out it within the center or starting of lengthy content material. Infini-attention had no hassle with this take a look at.

The benchmarking checks are very technical however the quick story is that Infini-attention outperformed the baseline fashions in summarizing and dealing with lengthy sequences whereas sustaining context over prolonged intervals.

Considerably, it retained this superior retention functionality whereas requiring 114x much less reminiscence.

The benchmark outcomes persuade the researchers that Infini-attention could possibly be scaled to deal with extraordinarily lengthy enter sequences preserving the reminiscence and computational assets bounded.

The plug-and-play nature of Infini-attention means it could possibly be used for continuous pre-training and fine-tuning of current Transformer fashions. This might successfully lengthen their context home windows with out requiring full retraining of the mannequin.

Context home windows will continue to grow, however this strategy reveals that an environment friendly reminiscence could possibly be a greater answer than a big library.