Picture by Writer

Massive Language Fashions (LLMs) are a brand new sort of synthetic intelligence that’s educated on large quantities of textual content information. Their important potential is to generate human-like textual content in response to a variety of prompts and requests.

I guess you will have already had some expertise with widespread LLM options like ChatGPT or Google Gemini.

However have you ever ever questioned how these highly effective fashions ship such lightning-fast responses?

The reply lies in a specialised discipline referred to as LLMOps.

Earlier than diving in, let’s attempt to visualize the significance of this discipline.

Think about you are having a dialog with a pal. The traditional factor you’ll anticipate is that if you ask a query, they provide you a solution straight away, and the dialogue flows effortlessly.

Proper?

This clean alternate is what customers anticipate as properly when interacting with Massive Language Fashions (LLMs). Think about chatting with ChatGPT and having to attend for a of couple minutes each time we ship a immediate, no one would use it in any respect, not less than I wouldn’t for certain.

This is the reason LLMs are aiming to realize this dialog circulation and effectiveness of their digital options with the LLMOps discipline. This information goals to be your companion in your first steps on this brand-new area.

LLMOps, brief for Massive Language Mannequin Operations, is the behind-the-scenes magic that ensures LLMs operate effectively and reliably. It represents an development from the acquainted MLOps, particularly designed to handle the distinctive challenges posed by LLMs.

Whereas MLOps focuses on managing the lifecycle of common machine studying fashions, LLMOps offers particularly with the LLM-specific necessities.

When utilizing fashions from entities like OpenAI or Anthropic by net interfaces or API, LLMOps work behind the scenes, making these fashions accessible as companies. Nonetheless, when deploying a mannequin for a specialised software, LLMOps accountability depends on us.

So consider it like a moderator caring for a debate’s circulation. Similar to the moderator retains the dialog operating easily and aligned to the controversy’s subject, at all times ensuring there aren’t any dangerous phrases and attempting to keep away from faux information, LLMOps ensures that LLMs function at peak efficiency, delivering seamless person experiences and checking the security of the output.

Creating purposes with Massive Language Fashions (LLMs) introduces challenges distinct from these seen with standard machine studying. To navigate these, progressive administration instruments and methodologies have been crafted, giving rise to the LLMOps framework.

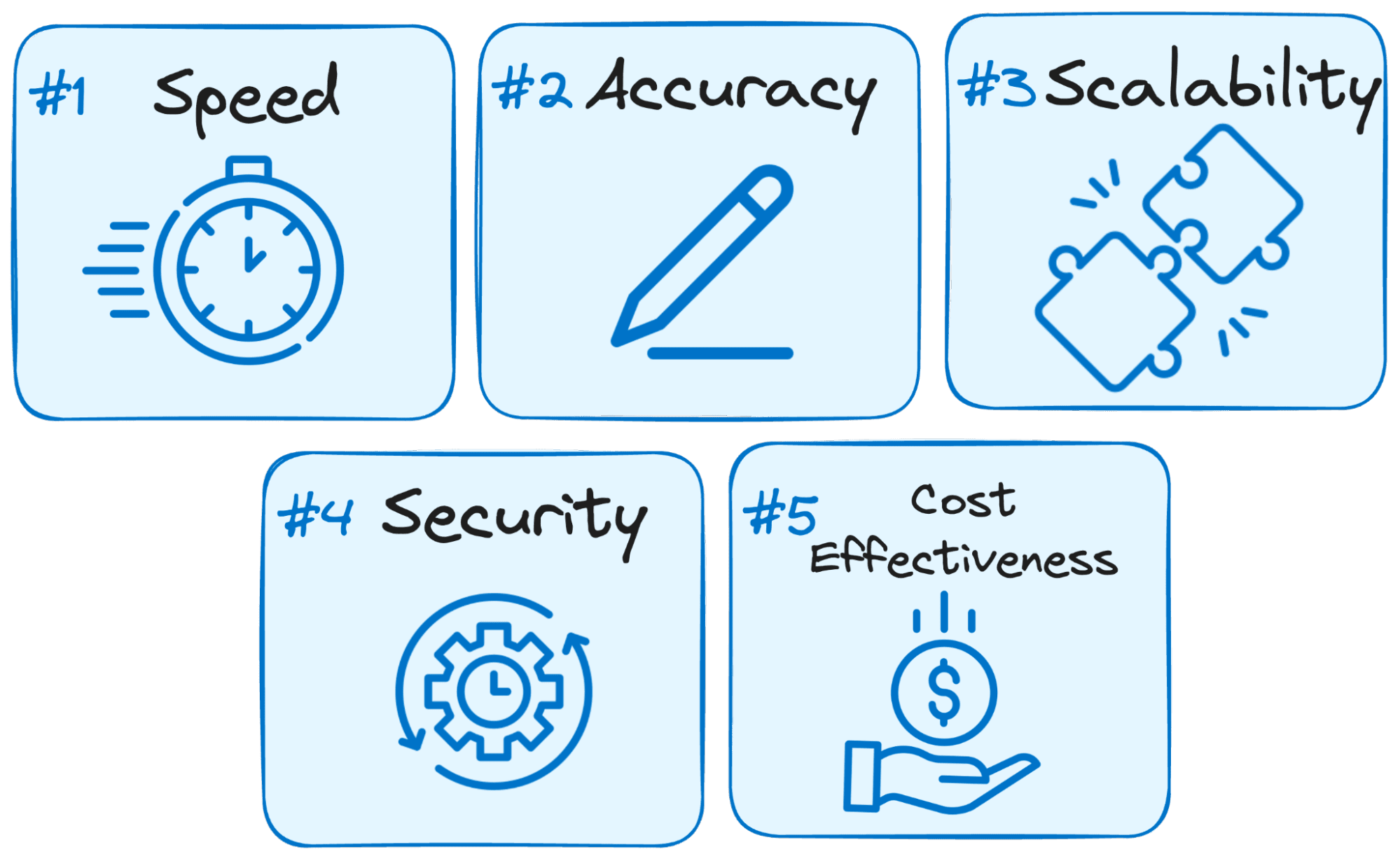

This is why LLMOps is essential for the success of any LLM-powered software:

Picture by Writer

- Pace is Key: Customers anticipate instant responses when interacting with LLMs. LLMOps optimizes the method to attenuate latency, making certain you get solutions inside an inexpensive timeframe.

- Accuracy Issues: LLMOps implements varied checks and controls to ensure the accuracy and relevance of the LLM’s responses.

- Scalability for Progress: As your LLM software positive aspects traction, LLMOps helps you scale sources effectively to deal with growing person hundreds.

- Safety is Paramount: LLMOps safeguards the integrity of the LLM system and protects delicate information by implementing strong safety measures.

- Price-effectiveness: Working LLMs might be financially demanding as a result of their important useful resource necessities. LLMOps brings into play economical strategies to maximise useful resource utilization effectively, making certain peak efficiency is not sacrificed.

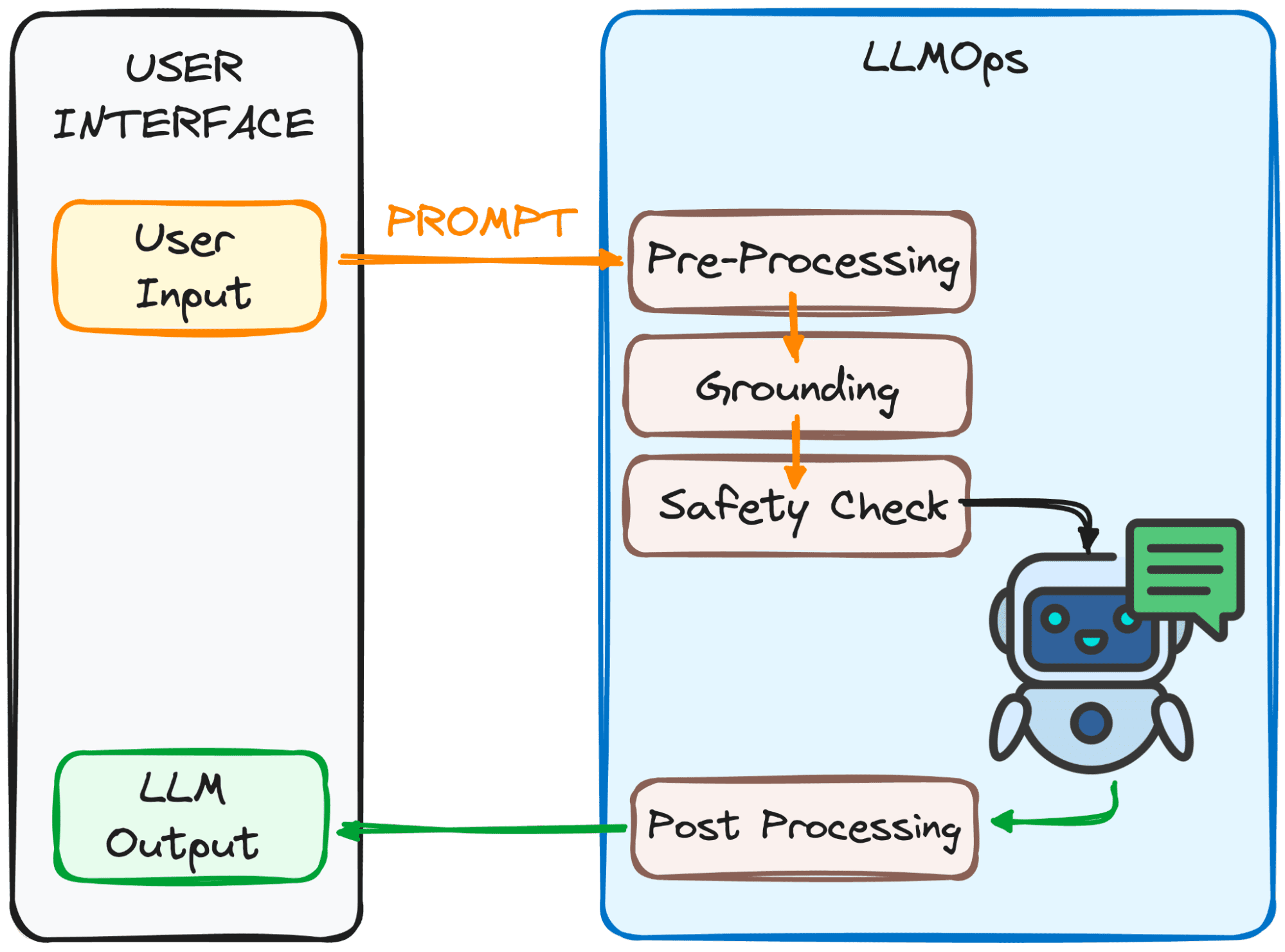

LLMOps makes certain your immediate is prepared for the LLM and its response comes again to you as quick as doable. Nonetheless, this isn’t straightforward in any respect.

This course of includes a number of steps, primarily 4, that may be noticed within the picture beneath.

Picture by Writer

The aim of those steps?

To make the immediate clear and comprehensible for the mannequin.

This is a breakdown of those steps:

1. Pre-processing

The immediate goes by a primary processing step. First, it is damaged down into smaller items (tokens). Then, any typos or bizarre characters are cleaned up, and the textual content is formatted constantly.

Lastly, the tokens are embedded into numerical information so the LLM understands.

2. Grounding

Earlier than the mannequin processes our immediate, we have to ensure that the mannequin understands the larger image. This would possibly contain referencing previous conversations you have had with the LLM or utilizing exterior data.

Moreover, the system identifies essential issues talked about within the immediate (like names or locations) to make the response much more related.

3. Security Verify:

Similar to having security guidelines on set, LLMOps makes certain the immediate is used appropriately. The system checks for issues like delicate data or probably offensive content material.

Solely after passing these checks is the immediate prepared for the principle act – the LLM!

Now we’ve got our immediate able to be processed by the LLM. Nonetheless, its output must be analyzed and processed as properly. So earlier than you see it, there are a number of extra changes carried out within the fourth step:

3. Submit-Processing

Bear in mind the code the immediate was transformed into? The response must be translated again into human-readable textual content. Afterwards, the system polishes the response for grammar, model, and readability.

All these steps occur seamlessly due to LLMOps, the invisible crew member making certain a clean LLM expertise.

Spectacular, proper?

Listed below are among the important constructing blocks of a well-designed LLMOps setup:

- Selecting the Proper LLM: With an enormous array of LLM fashions obtainable, LLMOps helps you choose the one which greatest aligns along with your particular wants and sources.

- Effective-Tuning for Specificity: LLMOps empowers you to fine-tune present fashions or practice your personal, customizing them in your distinctive use case.

- Immediate Engineering: LLMOps equips you with methods to craft efficient prompts that information the LLM towards the specified end result.

- Deployment and Monitoring: LLMOps streamlines the deployment course of and repeatedly screens the LLM’s efficiency, making certain optimum performance.

- Safety Safeguards: LLMOps prioritizes information safety by implementing strong measures to guard delicate data.

As LLM expertise continues to evolve, LLMOps will play a important position within the coming technological developments. Most a part of the success of the most recent widespread options like ChatGPT or Google Gemini is their potential to not solely reply any requests but in addition present a great person expertise.

This is the reason, by making certain environment friendly, dependable, and safe operation, LLMOps will pave the way in which for much more progressive and transformative LLM purposes throughout varied industries that can arrive to much more individuals.

With a strong understanding of LLMOps, you are well-equipped to make the most of the facility of those LLMs and create groundbreaking purposes.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is at present working within the information science discipline utilized to human mobility. He’s a part-time content material creator centered on information science and expertise. Josep writes on all issues AI, protecting the applying of the continuing explosion within the discipline.