Picture by Writer

Anthropic has lately launched a brand new collection of AI fashions which have outperformed each GPT-4 and Gemini in benchmark checks. With the AI trade rising and evolving quickly, Claude 3 fashions are making vital strides as the subsequent large factor in Massive Language Fashions (LLMs).

On this weblog publish, we are going to discover the efficiency benchmarks of Claude’s 3 fashions. We may even study concerning the new Python API that helps easy, asynchronous, and stream response era, together with its enhanced imaginative and prescient capabilities.

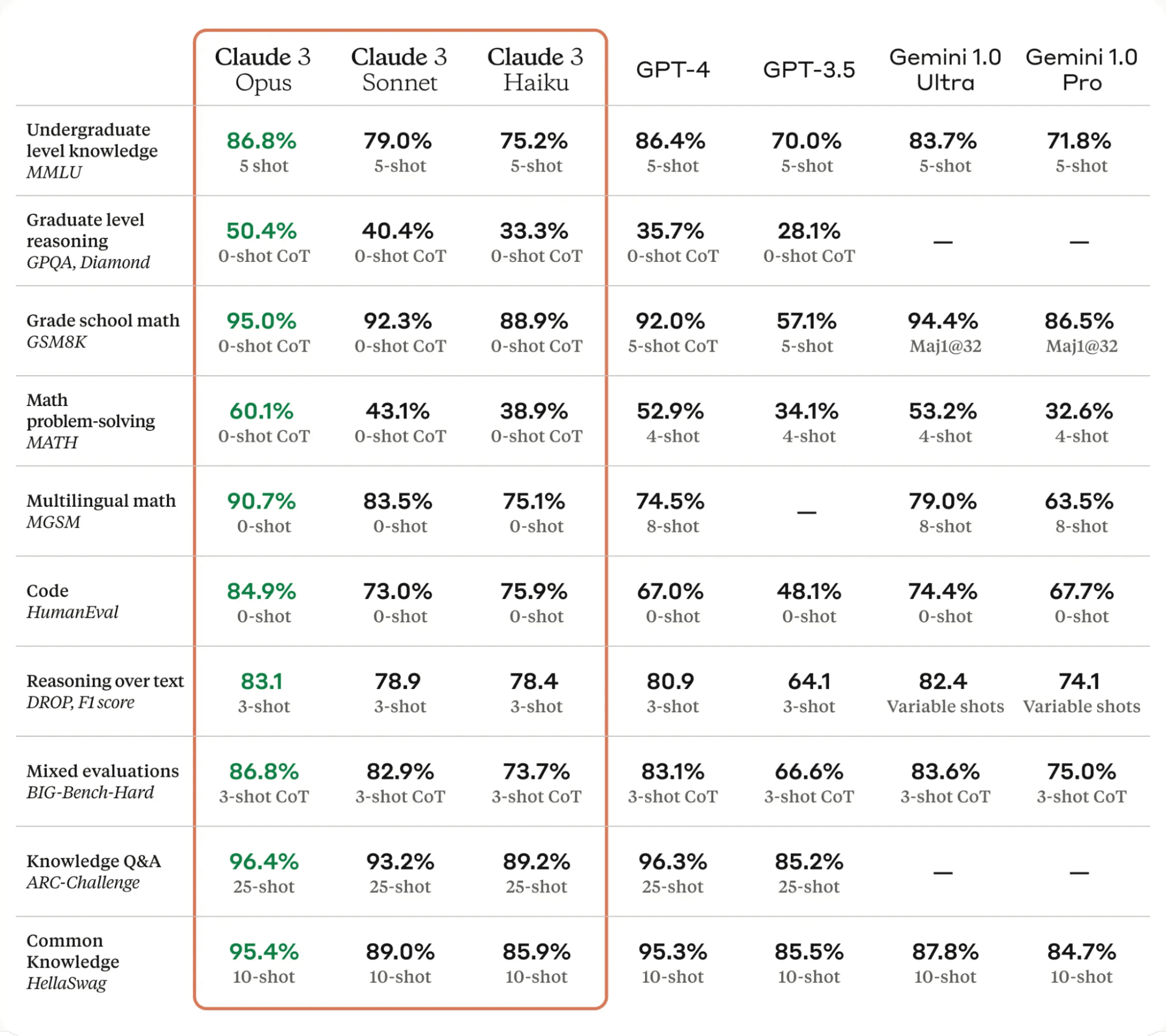

Claude 3, is a big leap ahead within the subject of AI expertise. It outperforms state-of-the-art language fashions on numerous analysis benchmarks, together with MMLU, GPQA, and GSM8K, demonstrating near-human ranges of comprehension and fluency in advanced duties.

The Claude 3 fashions are available three variants: Haiku, Sonnet, and Opus, every with its distinctive capabilities and strengths.

- Haiku is the quickest and most cost-effective mannequin, able to studying and processing information-dense analysis papers in lower than three seconds.

- Sonnet is 2x quicker than Claude 2 and a couple of.1, excelling at duties demanding speedy responses, like information retrieval or gross sales automation.

- Opus delivers related speeds to Claude 2 and a couple of.1 however with a lot increased ranges of intelligence.

In line with the desk under, Claude 3 Opus outperformed GPT-4 and Gemini Extremely on all LLMs benchmarks, making it the brand new chief within the AI world.

Desk from Claude 3

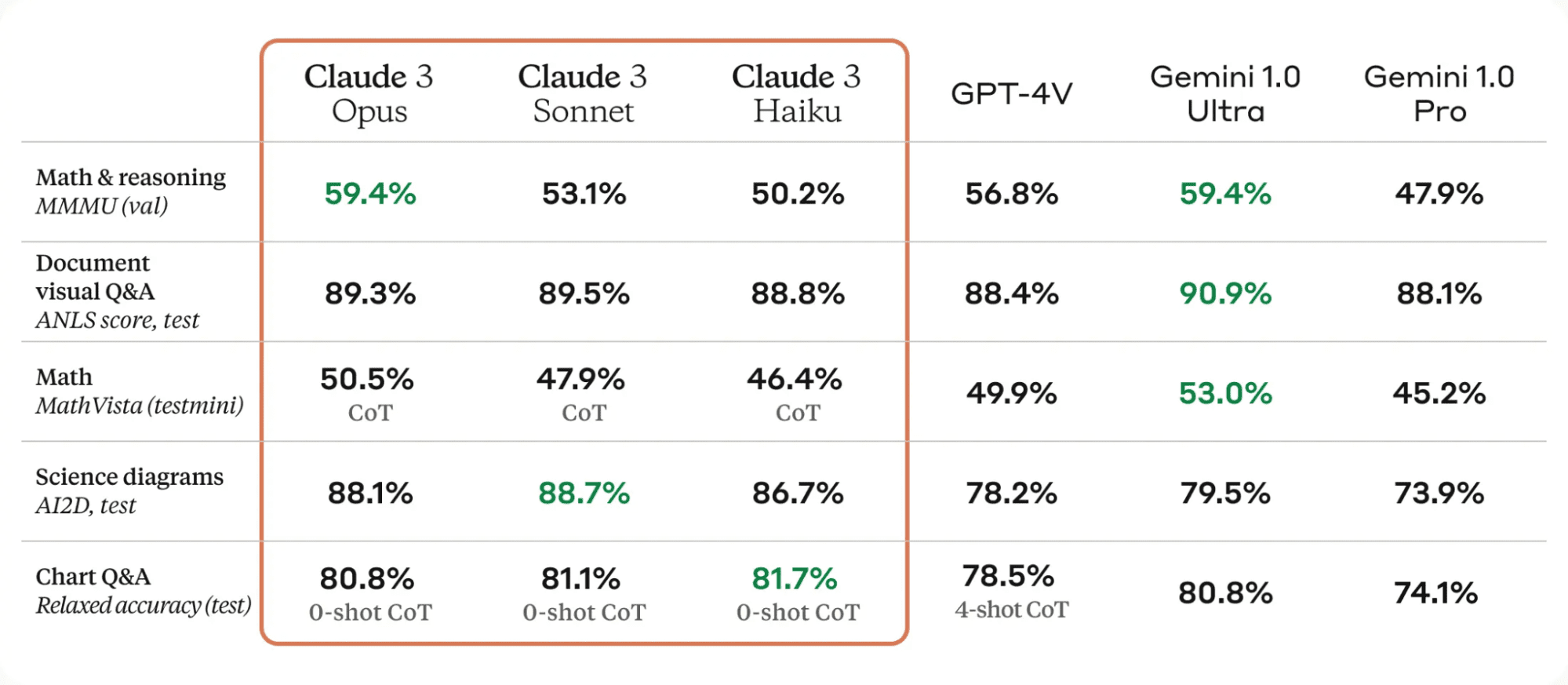

One of many vital enhancements within the Claude 3 fashions is their robust imaginative and prescient capabilities. They will course of numerous visible codecs, together with photographs, charts, graphs, and technical diagrams.

Desk from Claude 3

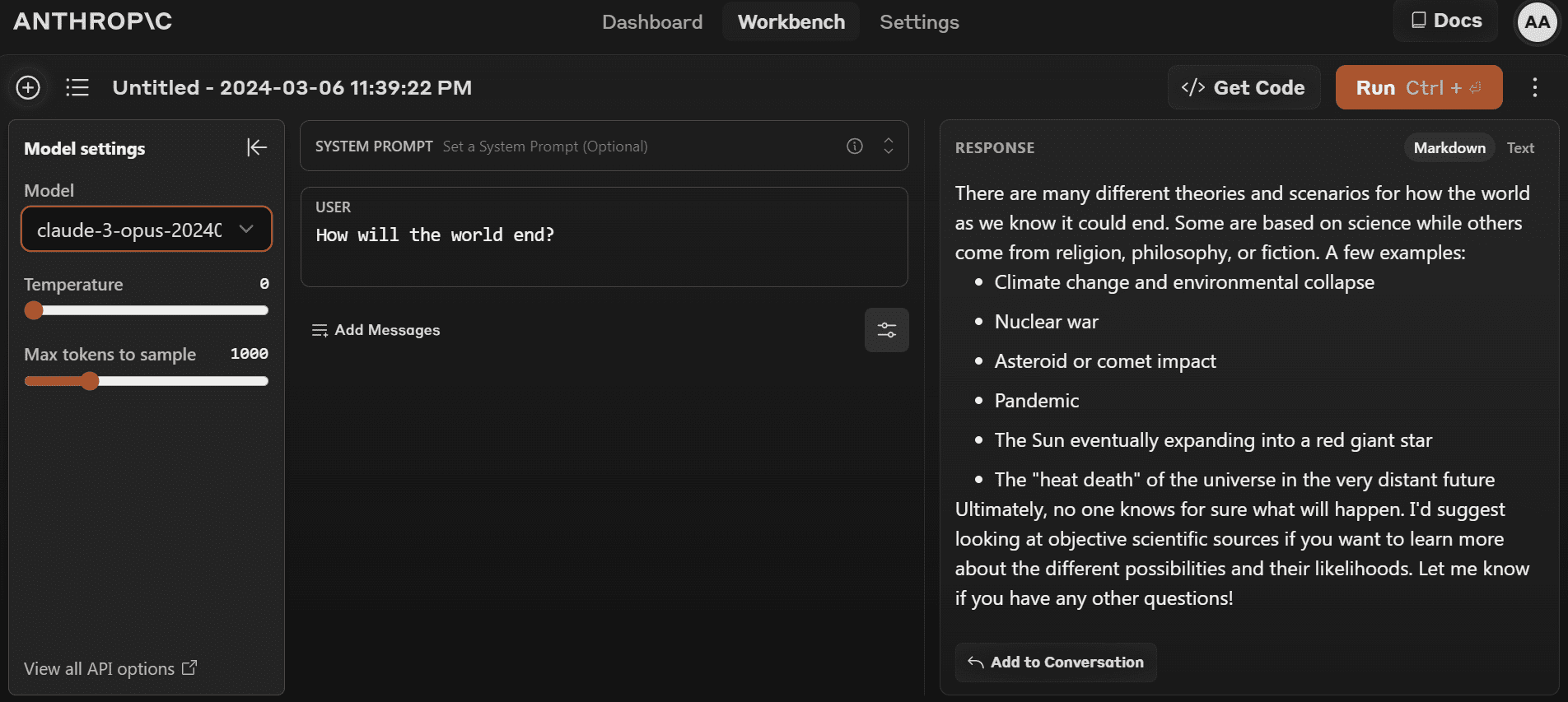

You can begin utilizing the most recent mannequin by going to https://www.anthropic.com/claude and creating a brand new account. It’s fairly easy in comparison with the OpenAI playground.

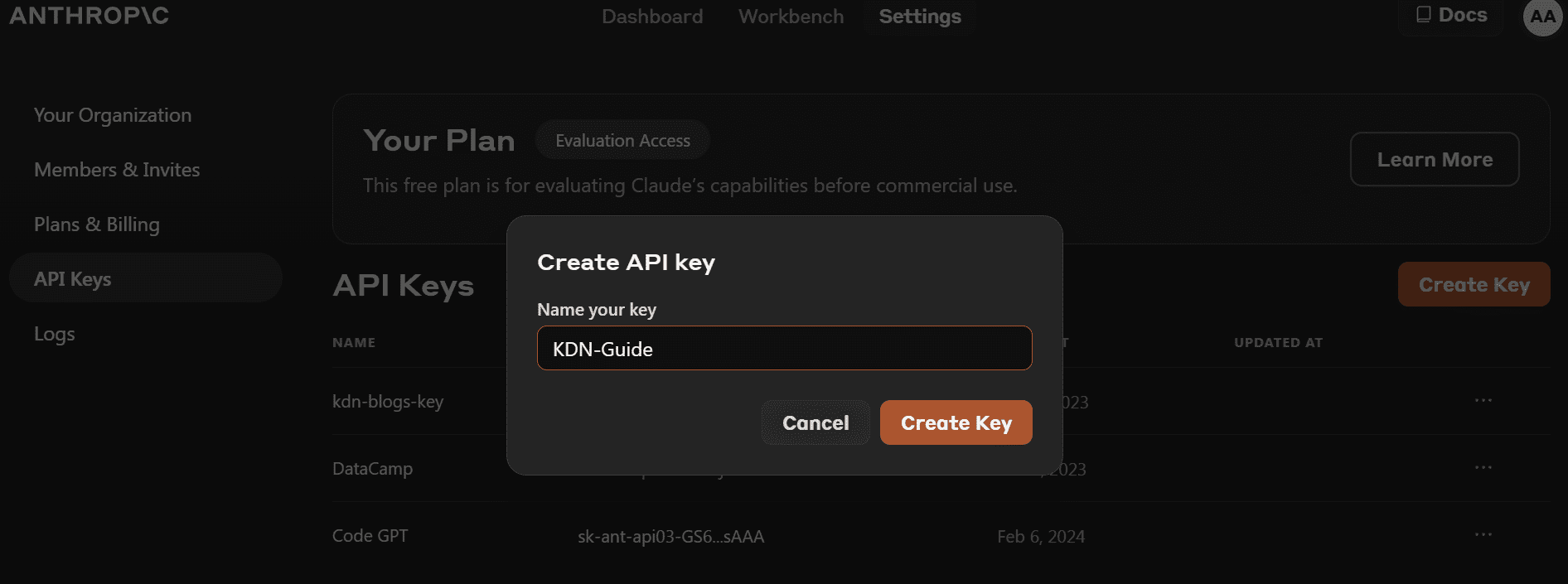

- Earlier than we set up the Python Bundle, we have to go to https://console.anthropic.com/dashboard and get the API key.

- As a substitute of offering the API key immediately for creating the shopper object, you may set the `ANTHROPIC_API_KEY` atmosphere variable and supply it as the important thing.

- Set up the `anthropic` Python bundle utilizing PIP.

- Create the shopper object utilizing the API key. We’ll use the shopper for textual content era, entry imaginative and prescient functionality, and streaming.

import os

import anthropic

from IPython.show import Markdown, show

shopper = anthropic.Anthropic(

api_key=os.environ["ANTHROPIC_API_KEY"],

)

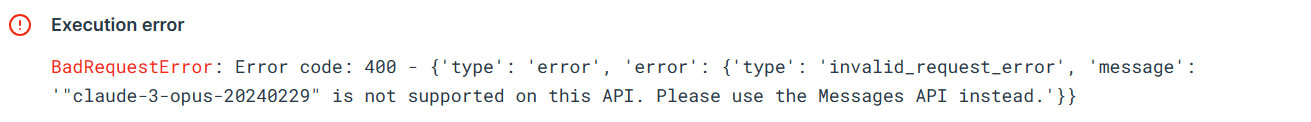

Let’s attempt the outdated Python API to check if it nonetheless works or not. We’ll present the completion API with the mannequin title, max token size, and immediate.

from anthropic import HUMAN_PROMPT, AI_PROMPT

completion = shopper.completions.create(

mannequin="claude-3-opus-20240229",

max_tokens_to_sample=300,

immediate=f"{HUMAN_PROMPT} How do I cook a original pasta?{AI_PROMPT}",

)

Markdown(completion.completion)

The error exhibits that we can not use the outdated API for the `claude-3-opus-20240229` mannequin. We have to use the Messages API as an alternative.

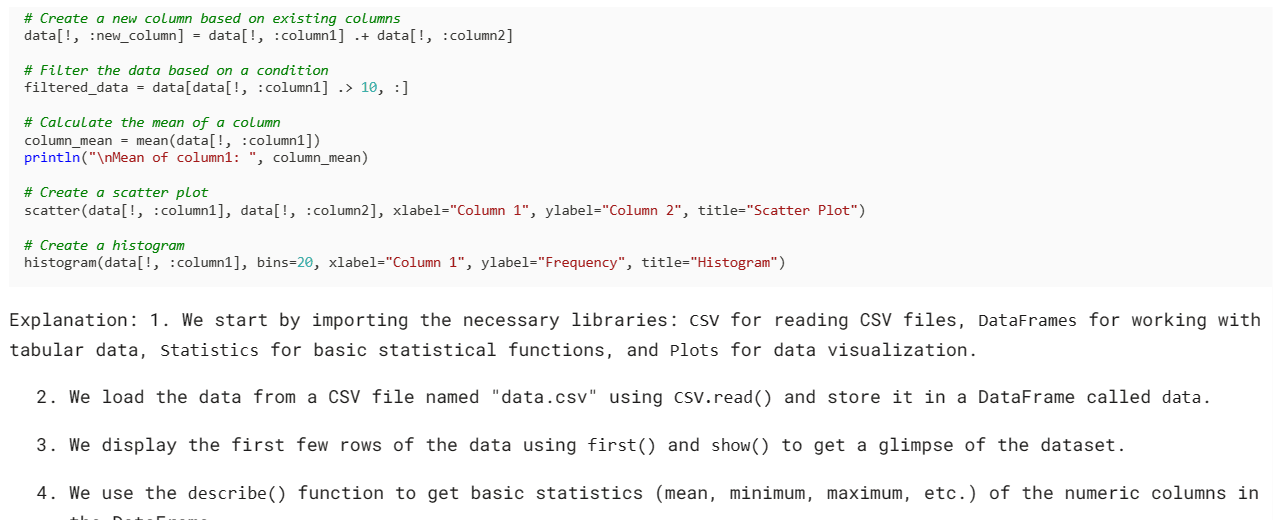

Let’s use the Messages API to generate the response. As a substitute of immediate, we have now to offer the messages argument with a listing of dictionaries containing the position and content material.

Immediate = "Write the Julia code for the simple data analysis."

message = shopper.messages.create(

mannequin="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{"role": "user", "content": Prompt}

]

)

Markdown(message.content material[0].textual content)

Utilizing IPython Markdown will show the response as Markdown format. Which means it can present bullet factors, code blocks, headings, and hyperlinks in a clear manner.

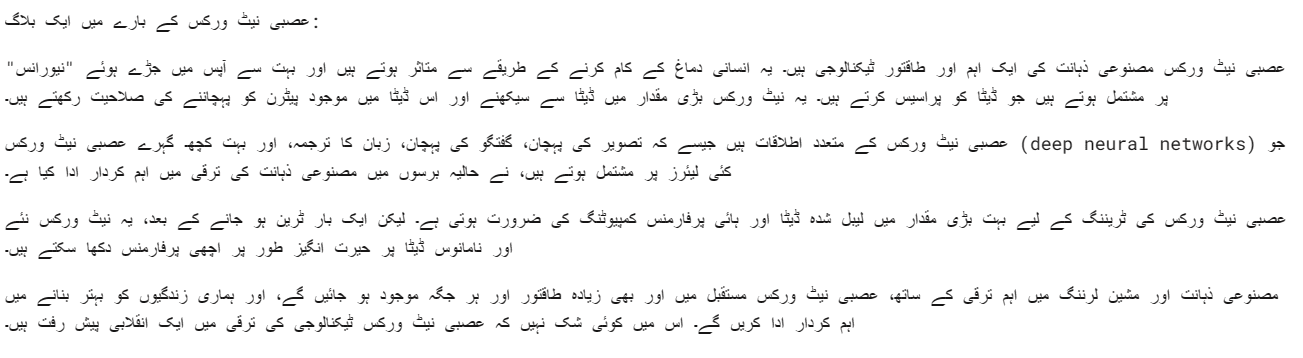

We will additionally present a system immediate to customise your response. In our case we’re asking Claude 3 Opus to reply in Urdu language.

shopper = anthropic.Anthropic(

api_key=os.environ["ANTHROPIC_API_KEY"],

)

Immediate = "Write a blog about neural networks."

message = shopper.messages.create(

mannequin="claude-3-opus-20240229",

max_tokens=1024,

system="Respond only in Urdu.",

messages=[

{"role": "user", "content": Prompt}

]

)

Markdown(message.content material[0].textual content)

The Opus mannequin is kind of good. I imply I can perceive it fairly clearly.

Synchronous APIs execute API requests sequentially, blocking till a response is acquired earlier than invoking the subsequent name. Asynchronous APIs, then again, permit a number of concurrent requests with out blocking, making them extra environment friendly and scalable.

- Now we have to create an Async Anthropic shopper.

- Create the primary operate with async.

- Generate the response utilizing the await syntax.

- Run the primary operate utilizing the await syntax.

import asyncio

from anthropic import AsyncAnthropic

shopper = AsyncAnthropic(

api_key=os.environ["ANTHROPIC_API_KEY"],

)

async def foremost() -> None:

Immediate = "What is LLMOps and how do I start learning it?"

message = await shopper.messages.create(

max_tokens=1024,

messages=[

{

"role": "user",

"content": Prompt,

}

],

mannequin="claude-3-opus-20240229",

)

show(Markdown(message.content material[0].textual content))

await foremost()

Be aware: In case you are utilizing async within the Jupyter Pocket book, attempt utilizing await foremost(), as an alternative of asyncio.run(foremost())

Streaming is an method that allows processing the output of a Language Mannequin as quickly because it turns into out there, with out ready for the entire response. This methodology minimizes the perceived latency by returning the output token by token, as an alternative of abruptly.

As a substitute of `messages.create`, we are going to use `messages.stream` for response streaming and use a loop to show a number of phrases from the response as quickly as they’re out there.

from anthropic import Anthropic

shopper = anthropic.Anthropic(

api_key=os.environ["ANTHROPIC_API_KEY"],

)

Immediate = "Write a mermaid code for typical MLOps workflow."

completion = shopper.messages.stream(

max_tokens=1024,

messages=[

{

"role": "user",

"content": Prompt,

}

],

mannequin="claude-3-opus-20240229",

)

with completion as stream:

for textual content in stream.text_stream:

print(textual content, finish="", flush=True)

As we are able to see, we’re producing the response fairly quick.

We will use an async operate with streaming as nicely. You simply have to be inventive and mix them.

import asyncio

from anthropic import AsyncAnthropic

shopper = AsyncAnthropic()

async def foremost() -> None:

completion = shopper.messages.stream(

max_tokens=1024,

messages=[

{

"role": "user",

"content": Prompt,

}

],

mannequin="claude-3-opus-20240229",

)

async with completion as stream:

async for textual content in stream.text_stream:

print(textual content, finish="", flush=True)

await foremost()

Claude 3 Imaginative and prescient has gotten higher over time, and to get the response, you simply have to offer the base64 kind of picture to the messages API.

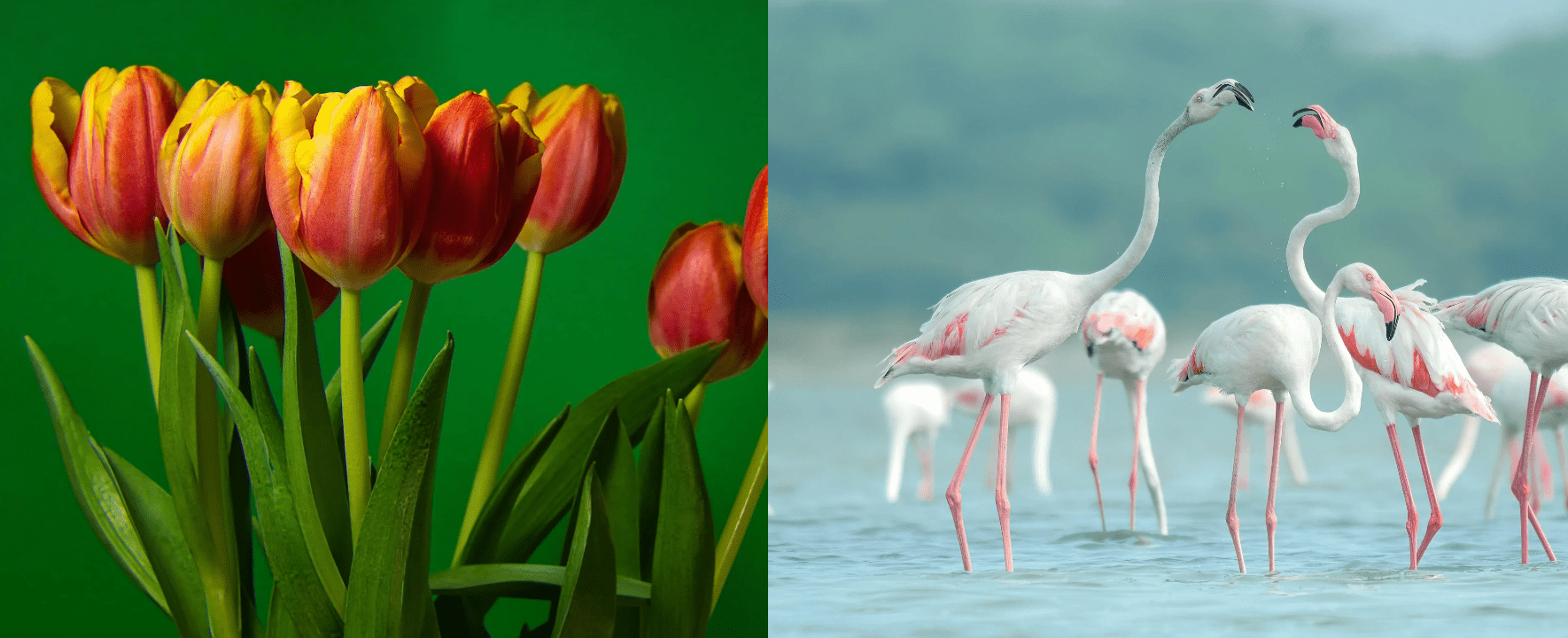

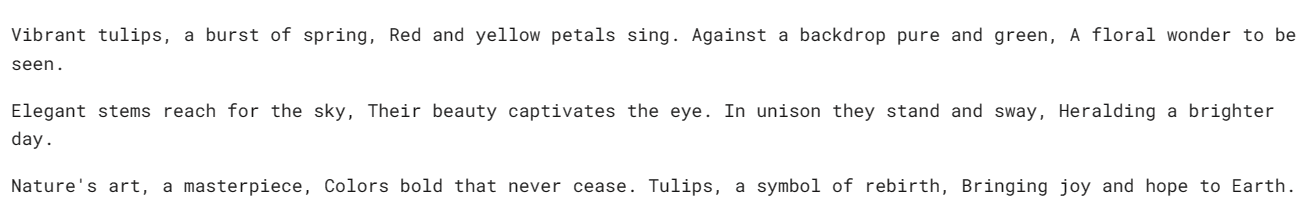

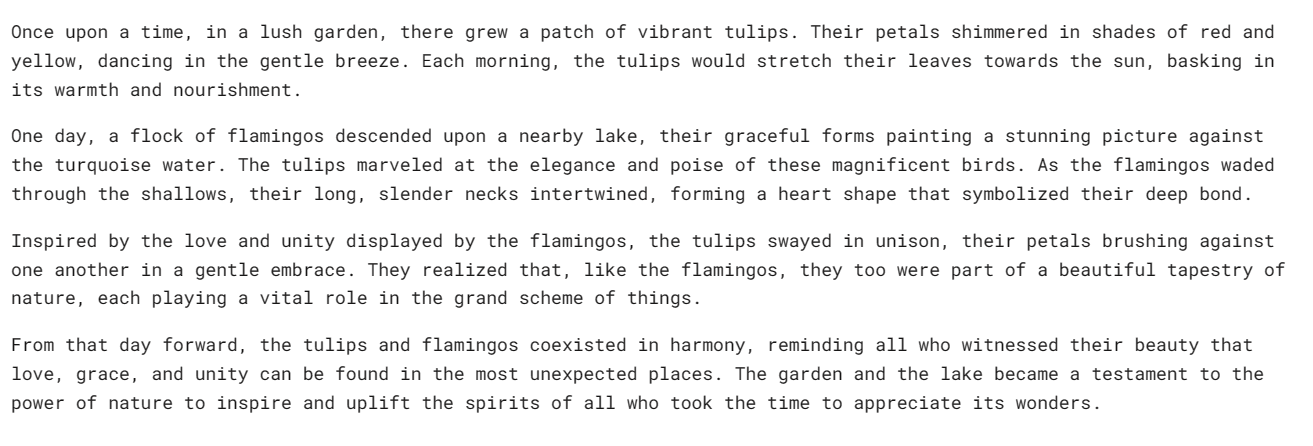

On this instance, we will probably be utilizing Tulips (Picture 1) and Flamingos (Picture 2) photographs from Pexel.com to generate the response by asking questions concerning the picture.

We’ll use the `httpx` library to fetch each photos from pexel.com and convert them to base64 encoding.

import anthropic

import base64

import httpx

shopper = anthropic.Anthropic()

media_type = "image/jpeg"

img_url_1 = "https://images.pexels.com/photos/20230232/pexels-photo-20230232/free-photo-of-tulips-in-a-vase-against-a-green-background.jpeg"

image_data_1 = base64.b64encode(httpx.get(img_url_1).content material).decode("utf-8")

img_url_2 = "https://images.pexels.com/photos/20255306/pexels-photo-20255306/free-photo-of-flamingos-in-the-water.jpeg"

image_data_2 = base64.b64encode(httpx.get(img_url_2).content material).decode("utf-8")

We offer base64-encoded photos to the messages API in picture content material blocks. Please comply with the coding sample proven under to efficiently generate the response.

message = shopper.messages.create(

mannequin="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": media_type,

"data": image_data_1,

},

},

{

"type": "text",

"text": "Write a poem using this image."

}

],

}

],

)

Markdown(message.content material[0].textual content)

We received a fantastic poem concerning the Tulips.

Let’s attempt loading a number of photos to the identical Claude 3 messages API.

message = shopper.messages.create(

mannequin="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Image 1:"

},

{

"type": "image",

"source": {

"type": "base64",

"media_type": media_type,

"data": image_data_1,

},

},

{

"type": "text",

"text": "Image 2:"

},

{

"type": "image",

"source": {

"type": "base64",

"media_type": media_type,

"data": image_data_2,

},

},

{

"type": "text",

"text": "Write a short story using these images."

}

],

}

],

)

Markdown(message.content material[0].textual content)

Now we have a brief story a couple of Backyard of Tulips and Flamingos.

When you’re having bother working the code, here is a Deepnote workspace the place you may evaluate and run the code your self.

I feel the Claude 3 Opus is a promising mannequin, although it will not be as quick as GPT-4 and Gemini. I imagine paid customers might have higher speeds.

On this tutorial, we discovered concerning the new mannequin collection from Anthropic known as Claude 3, reviewed its benchmark, and examined its imaginative and prescient capabilities. We additionally discovered to generate easy, async, and stream responses. It is too early to say if it is one of the best LLM on the market, but when we have a look at the official check benchmarks, we have now a brand new king on the throne of AI.

Abid Ali Awan (@1abidaliawan) is an authorized knowledge scientist skilled who loves constructing machine studying fashions. Presently, he’s specializing in content material creation and writing technical blogs on machine studying and knowledge science applied sciences. Abid holds a Grasp’s diploma in expertise administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students fighting psychological sickness.