Enterprises must be being attentive to rising applied sciences — however in addition they have to strategize on methods to exploit these applied sciences in keeping with their capacity to deal with unproven applied sciences, Gartner stated.

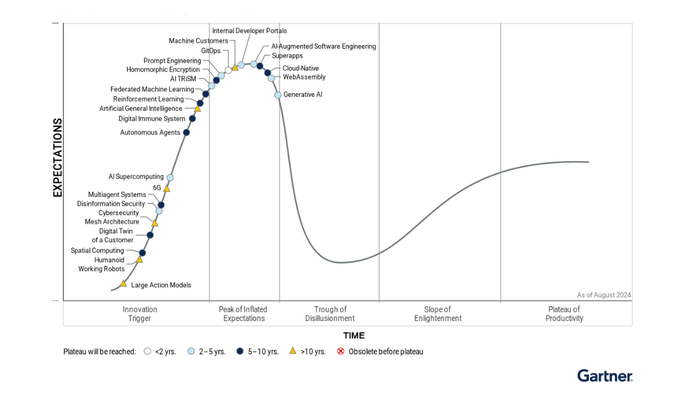

Gartner’s “Hype Cycle for Emerging Technologies, 2024” launched this week, covers autonomous synthetic intelligence (AI), developer productiveness, whole expertise, and human-centric safety and privateness. Cybersecurity leaders can profit most by realizing their organizations — and their strengths and weaknesses — earlier than deciding methods to incorporate these applied sciences into the enterprise.

Gartner positioned generative AI expertise over the “Peak of Inflated Expectations,” highlighting the necessity for enterprises to contemplate what return on funding these techniques present. Simply final yr, organizations had been leaping on something that included generative AI. Now organizations are slowing down to guage these applied sciences towards their particular environments and necessities.

“First and foremost, you have to gauge your maturity before you deploy technology,” says Arun Chandrasekaran, distinguished VP analyst at Gartner. “A technology may work very well in one organization but may not work well in another organization.”

Within the cybersecurity enviornment, Gartner calls out human-centric safety and privateness, urging organizations to develop resilience by making a tradition of mutual belief and shared threat. Safety controls typically depend on the premise that people behave securely, when the fact is that workers will bypass too-stringent safety controls as a way to full their enterprise duties.

Getting people concerned early within the expertise deployment life cycle and giving groups enough coaching may also help them work in a extra synchronous method with safety expertise, Chandrasekaran says .

Rising applied sciences supporting human-centric safety and privateness embrace AI TRiSM, cybersecurity mesh structure, digital immune system, disinformation safety, federated machine studying, and homomorphic encryption, in keeping with Gartner.

AI Hype Is Sky Excessive

On the subject of autonomous AI applied sciences that may function with minimal human oversight — similar to multiagent techniques, massive motion fashions, machine clients, humanoid working robots, autonomous brokers, and reinforcement studying — expertise leaders ought to mood their expectations.

“While the technologies are advancing very rapidly, the expectations and hype around these technologies is also sky-high, which means that there’s going to be some level of unhappiness. There’s going to be some level of disillusionment. That’s inevitable, not because the technology is bad but because of our expectations around it,” Chandrasekaran says. “In the near term, we’re going to see some recalibration in terms of expectations, and some failures in that domain are inevitable.”

Gartner’s Hype Cycle additionally focuses on instruments that may assist increase developer productiveness, together with AI-augmented software program engineering, cloud-native, GitOps, inner developer portals, immediate engineering, and WebAssembly.

“We cannot deploy technology for technology’s sake,” Chandrasekaran says. “We have to really deploy it in a manner where the technologies are functioning in a more harmonious way with human beings, and the human beings are trained on the adequate and the appropriate usage of those technologies.”

The “Hype Cycle for Emerging Technologies” is culled from the evaluation of greater than 2,000 applied sciences that Gartner says have the potential to ship “transformational benefits” over the subsequent two to 10 years.