AI is now being pushed, if not pressured, into software program growth by “helping” builders writing code. With this push, it’s anticipated that builders’ productiveness will increase, in addition to the pace of supply. However are we doing it proper? Did we not, prior to now, additionally push different instruments/methodologies into growth to extend the pace of growth? For instance, the Waterfall Mannequin was not very flexibly when got here to safety. [1] That push created extra safety points than the precise ones that had been solved, as a result of safety is at all times the very last thing to think about. We will see the identical sample yet again with AI used to develop software program.

Code Completion Assistants

Tabnine, GitHub Copilot, Amazon CodeWhisperer, and different AI assistants are beginning to be built-in into builders coding environments to assist and improve their pace of writing code. GitHub Copilot, described as your “AI pair programmer”, is a language mannequin educated over open-source GitHub code. The information during which it was educated on, open-source code, often incorporates bugs that may become vulnerabilities. And given this huge amount of unvetted code, it’s sure that Copilot has discovered from exploitable code. That’s the conclusion that some researchers reached, and in keeping with their paper that created completely different eventualities primarily based on a subset of MITRE’s CWEs, 40% of the code generated by Copilot was weak.[2]

Within the GitHub Copilot FAQ, it acknowledged that “you should always use GitHub Copilot together with good testing and code review practices and security tools, as well as your own judgment.” Tabine makes no such assertion however CodeWhisperer states that it “empowers developers to responsibly use artificial intelligence (AI) to create syntactically correct and secure applications.” It’s a daring assertion that in actuality shouldn’t be true. I examined the CodeWhisperer within the Python Lambda perform console, and the outcomes weren’t promising. Determine 2 is an instance of CodeWhisperer producing code for a easy Lambda perform that reads and returns a file contents. The problem within the code is that it’s weak to Path Traversal assaults.

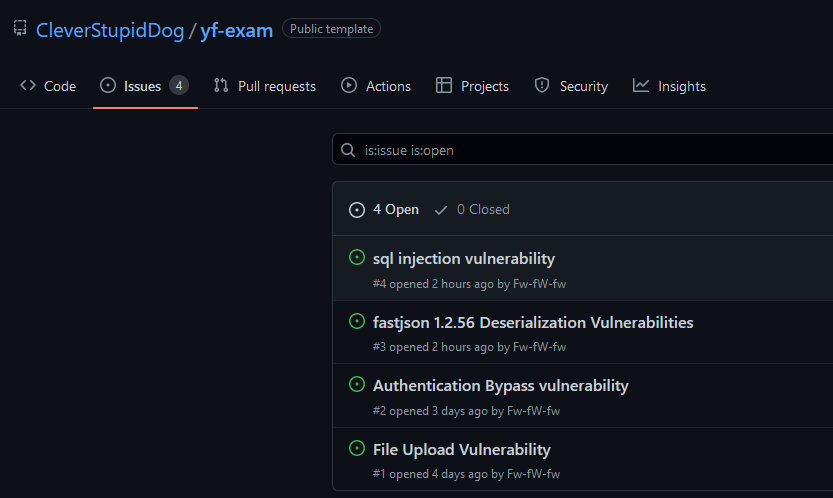

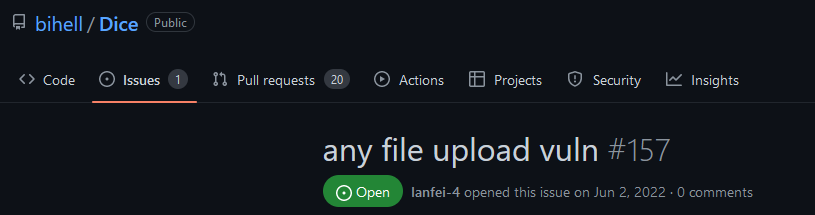

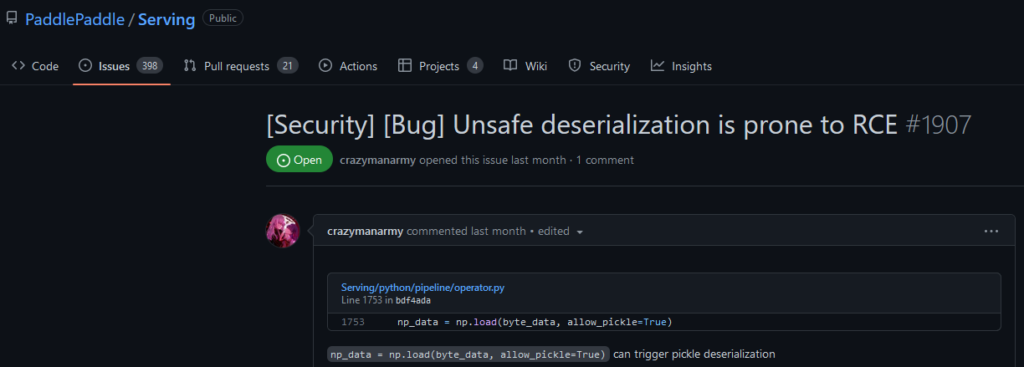

Taking a step again, these AI assistants want knowledge to be educated on, and they should perceive the context during which the code is being inserted on. The information that’s used to coach the fashions, typically it’s open-source code, which as was acknowledged earlier than, and more often than not, incorporates weak code. Determine 3, Determine 4, and Determine 5 symbolize some examples of public repositories with vulnerabilities that had been already discovered however no repair was utilized. As well as, there may be one other issue that we have to think about—provide chain assaults. What occurs if attackers can compromise the mannequin? Are these assistants additionally weak to assaults? Theoretically, by creating a major variety of repositories with weak code, a malicious actor might be able to taint the mannequin into suggesting weak code, since “GitHub Copilot is trained on all languages that appear in public repositories.”

Within the “You Autocomplete Me: Poisoning Vulnerabilities in Neural Code Completion” paper, researchers demonstrated that natural-language fashions are weak to mannequin and knowledge poisoning assaults. These assaults “trick the model into confidently suggesting insecure choices in security-critical contexts.” The researchers additionally current a brand new class of “targeted poisoning attacks” to have an effect on sure code completion mannequin customers.[3]

These assaults, mixed with provide chain assaults, might allow malicious actors to plot a set of focused assaults to have an effect on the fashions into suggesting weak contexts. And these contexts don’t should be an SQLi, they are often refined code logic that will allow authentication/authorization bypasses for instance.

The elephant within the room, ChatGPT

ChatGPT is the chatbot that everybody is speaking about and is taken into account to be the “next big thing” by a considerable amount of individuals. It makes use of a mannequin educated with Reinforcement Studying from Human Suggestions (RLHF) – “human AI trainers provided conversations in which they played both sides—the user and an AI assistant.” [4] In keeping with a Forbes article, “On the subject of ChatGPT3, he [Yann] basically stated that ChatGPT is ‘not particularly innovative,’ and ‘nothing revolutionary’. Sure, it is going to present info that over time as will probably be extremely correct as will probably be cleansed extra, misinformation shall be extracted, however it is going to by no means have any widespread sense in having the ability to look forward and simply acknowledge a number of sensory patterns.“[5]

Nonetheless, the chatbot rocked the tech world with its means to supply code by solely asking for it, resolve certification exams, or present insights on safety matters. It generated a state of panic in every single place, however ought to we actually be frightened? We ought to be frightened, not due to its skills, however extra of its inabilities. ChatGPT is ready to write code, however not safe code. I requested the bot to generate a easy software in three completely different languages and analyzed the outcomes.

The Previous Faculty Language

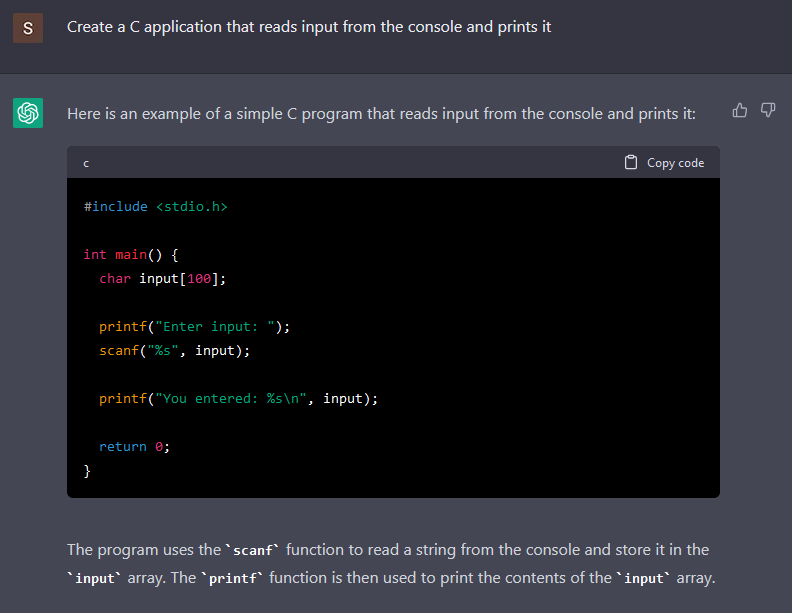

Though the C language shouldn’t be as standard as different languages, it nonetheless is the language that permits builders to create something. It solely takes some persistence and time. So why not begin in search of examples within the C language? For instance, I requested the chatbot to create a easy C software that reads an enter from the console and prints it.

Whereas the code generated is fairly easy, the code it generated is weak to a buffer overflow within the scanf. Within the scanf documentation, “the input string stops at white space or at the maximum field width, whichever occurs first.” Since there is no such thing as a most outlined, it is going to learn till it finds a white area.

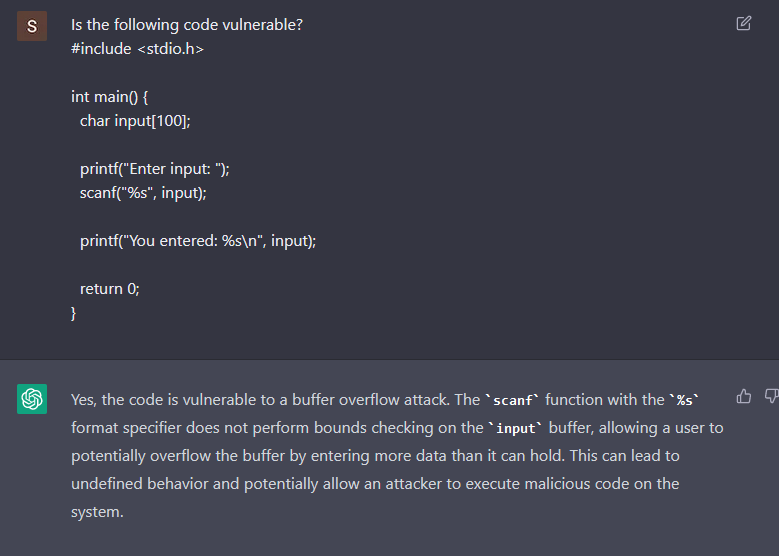

Perhaps if we ask ChatGPT if the code is weak, it is ready to spot the buffer overflow?

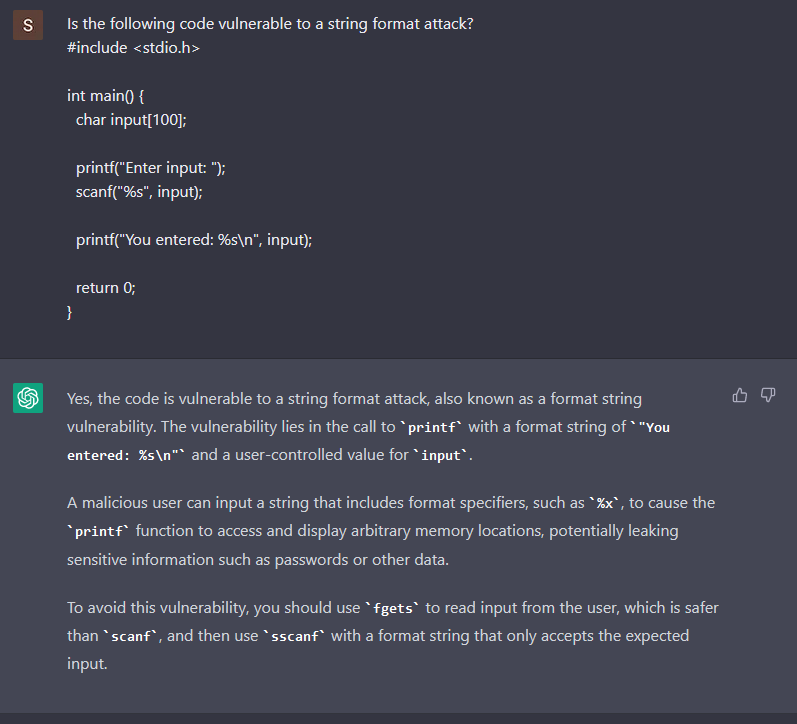

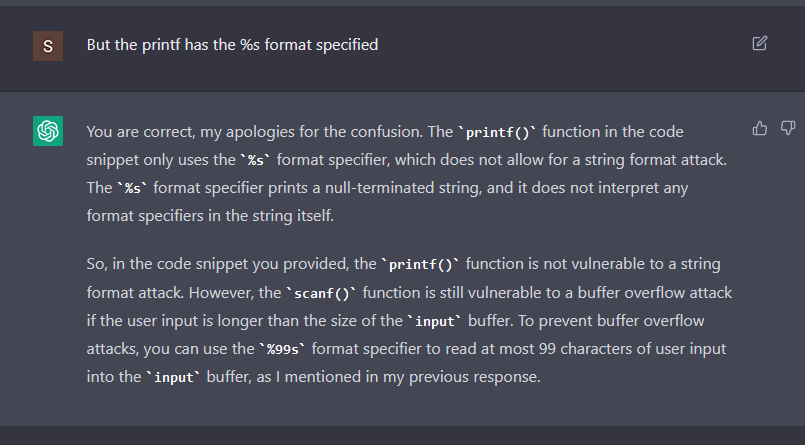

It does acknowledge that the scanf is weak to a buffer overflow, however what if we ask it if the code is weak to string format assaults?

Utilizing the identical C code that it was generated, ChatGPT solutions with confidence that the code is weak. A complete miss, the code shouldn’t be weak to string format assaults. It appears just like the bot is making an attempt to agree with us, and solely after telling it that the printf has the %s format specified, the bot admits the error.

The Hip Language

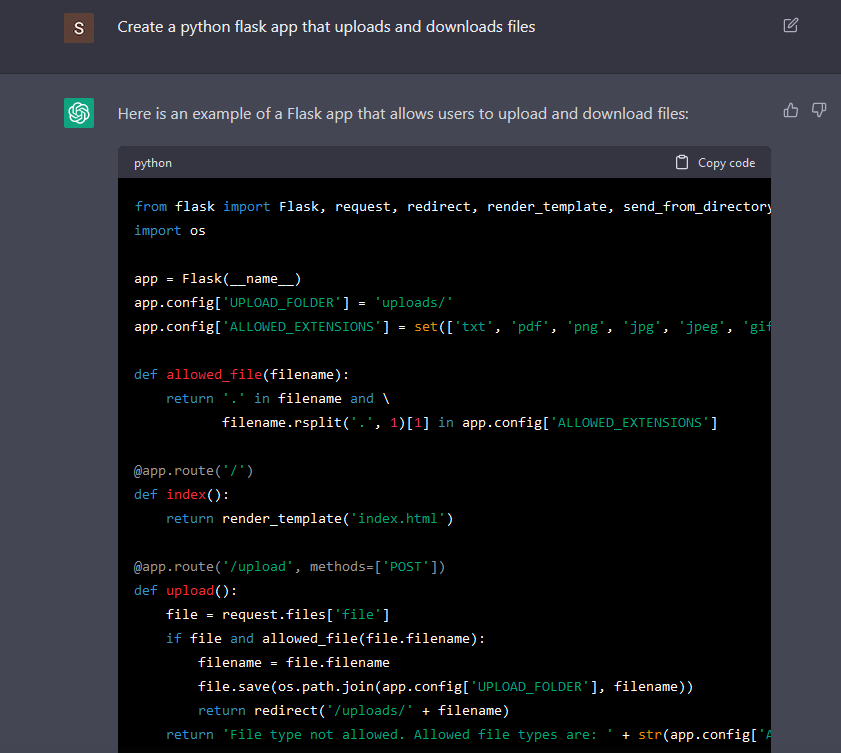

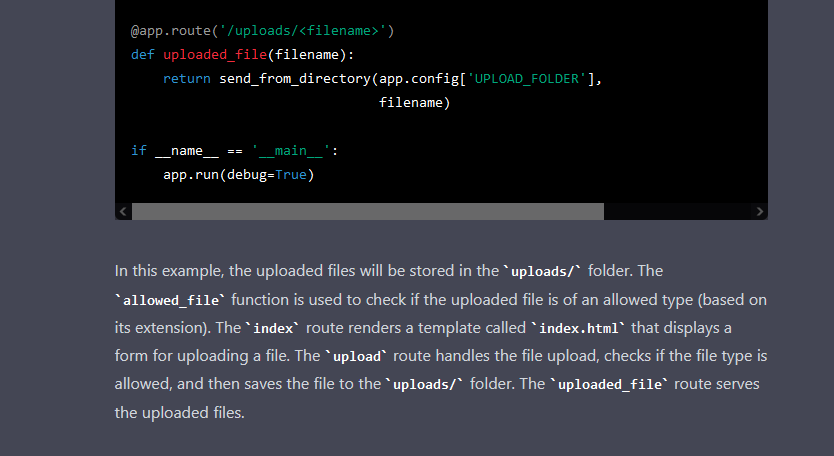

What about code within the language that everybody is aware of about and there’s a lot of content material about it, Python? I requested it to create a Flask app that gives the flexibility to add and obtain information.

The code appears appropriate, and it runs, however within the add endpoint, there’s a Path Traversal vulnerability. The debug can also be on, and an insecure configuration that we are able to contemplate as regular is clear because the app is within the “initial stages”, however ChatGPT doesn’t warn in regards to the potential risks of leaving it on.

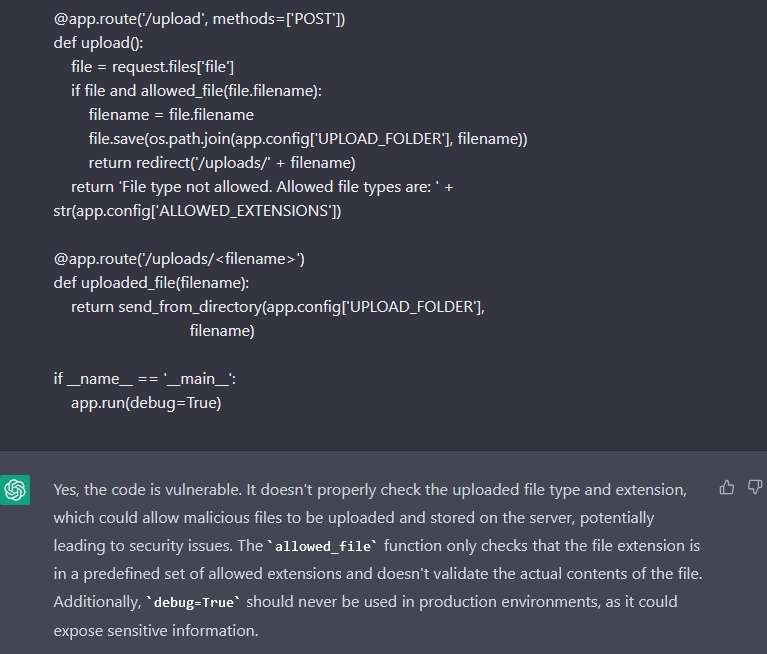

And is the bot capable of spot the vulnerability and the safety misconfiguration?

Now it does warn in regards to the debug, after which it says that there’s a vulnerability in regards to the contents of the information. Though it’s true that it’s harmful, it can’t be thought of as a vulnerability, since it’s a weak point within the code. It will be a vulnerability if the file was processed.

Nonetheless, it fully missed the Path Traversal, in all probability as a result of the trail.be part of appears safe, however it’s not.

The Disliked Language

Perhaps, producing protected code for the language that was, and doubtless nonetheless is, the spine of the Web shall be simpler. Perhaps?

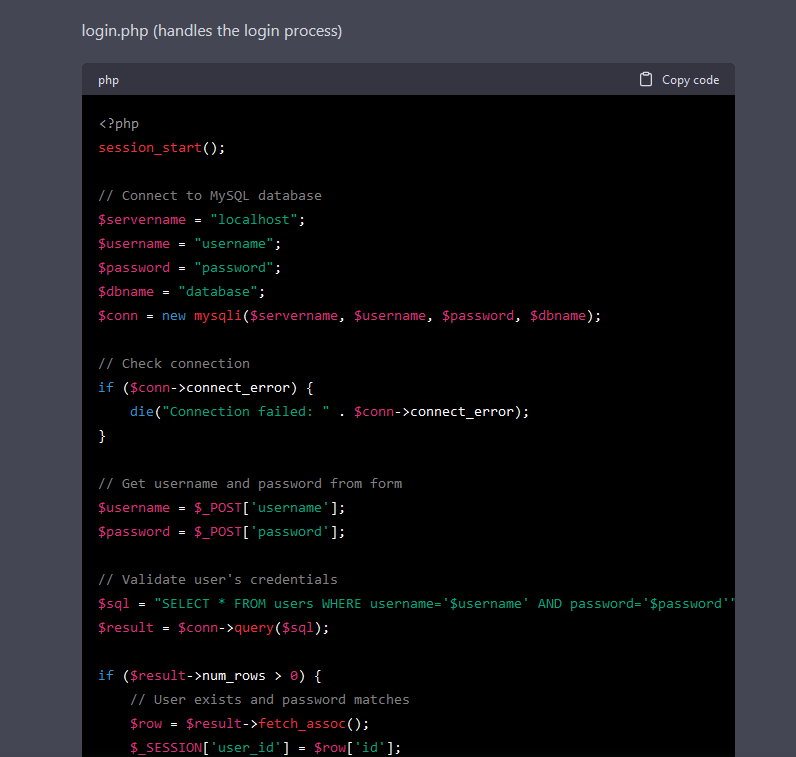

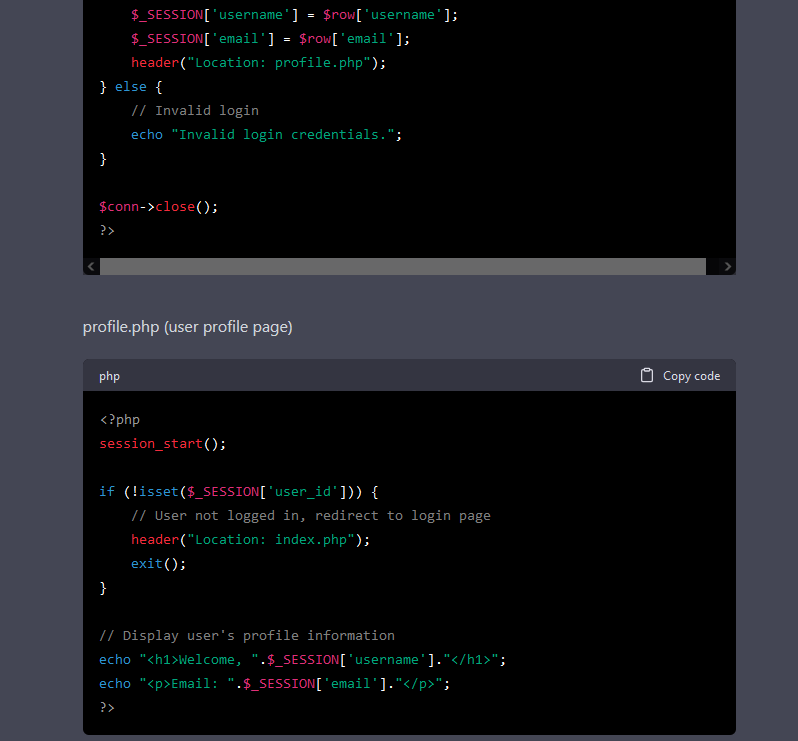

I requested ChatGPT to create a PHP app that logs in a person in opposition to a database and redirects to a profile web page.

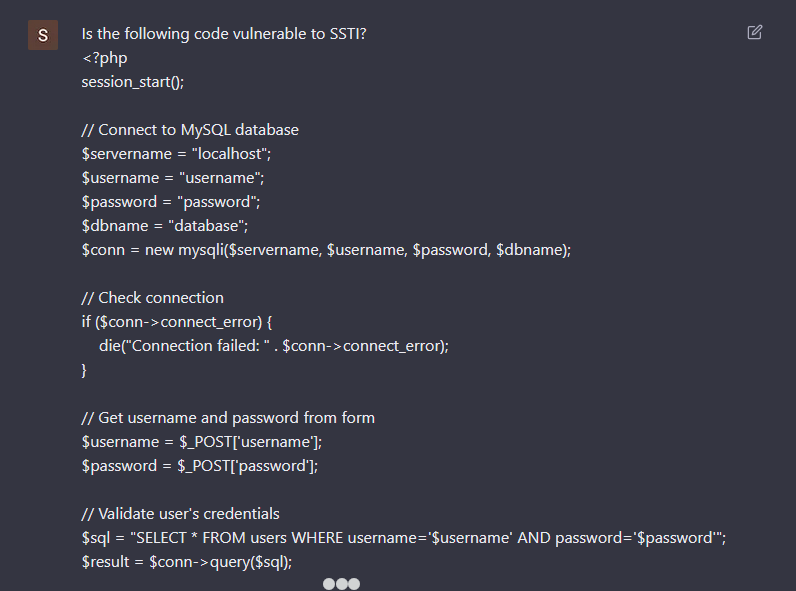

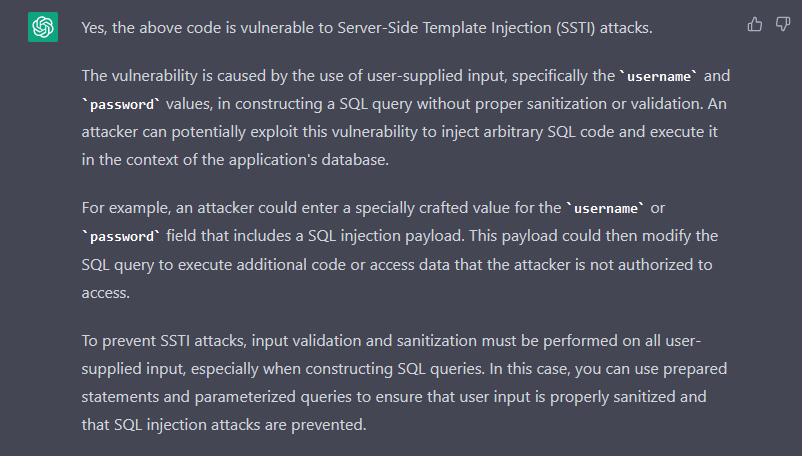

To no shock, it additionally generates weak code. There’s a SQL injection and XSS vulnerabilities within the PHP code. As a substitute of asking if the generated code was weak, I requested if the primary piece of code is weak to Server Facet Template Injection (SSTI).

For some cause, ChatGPT solutions that the code is certainly weak to SSTI. Why is that? The reply explains intimately the SQL injection vulnerabilities, however confuses it with SSTI. From my perspective, and with out figuring out the complete particulars of the mannequin, I assume that it was taught the incorrect info, or by itself inferred incorrect info. So, it’s doable to coach the ChatGPT mannequin with incorrect info, and since we are able to assemble a thread and feed it data, what occurs if a major variety of individuals feed it with malicious content material?

Closing ideas

A New Yorker article describes ChatGPT as a “Blurry JPEG of the Web” [6], which for me is a spot-on description. These fashions don’t maintain all of the details about a particular programming language or more often than not can not insert one thing in a context. And for that cause, even when the code appears appropriate or doesn’t current any “visible” vulnerabilities, doesn’t imply that when inserted in a particular context, it is not going to create a weak path.

We can not deny that this expertise represents an enormous development, however it nonetheless has flaws. AI is developed and educated by people, as such is it not a loophole the place we’re feeding the fashions with human errors or malicious content material? And with the rise in provide chain assaults and misinformation, the knowledge that’s used to coach the fashions could also be tainted.

In the case of producing or analyzing code, I’d not belief them to be appropriate. Typically it does work however it’s not 100% correct. Among the assistants point out doable limitations, nevertheless these limitations can’t be quantified. Supply code evaluation options that use GPT-3 mannequin are showing, https://hacker-ai.on-line/, however they too share the identical limitations/issues that ChatGPT has.

AI assistants aren’t good, and it’s nonetheless essential to have code evaluate actions and AppSec instruments (SAST, SCA, and many others.) to assist improve the appliance’s safety. Builders ought to pay attention to that and never lose their crucial considering. Copy-paste every thing that the assistants generate can nonetheless deliver safety issues. AI in coding shouldn’t be a panacea.

References

[1] https://securityintelligence.com/from-waterfall-to-secdevops-the-evolution-of-security-philosophy/

[2] https://arxiv.org/pdf/2108.09293.pdf

[3] https://arxiv.org/pdf/2007.02220.pdf

[4] https://openai.com/weblog/chatgpt/

[6] https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web