Something is a Nail When Your Exploit’s a Hammer

Beforehand…

In earlier blogs we’ve mentioned HOW to use weak configurations and develop primary exploits for weak mannequin protocols. Now it’s time to focus all of this info – protocols, fashions and Hugging Face itself – right into a viable assault Proof-of-Idea in opposition to varied libraries.

“Free Hugs” – What to be Cautious of in Hugging Face – Half 2

“Free Hugs” – What To Be Cautious of in Hugging Face – Half 1

Hugging Face Hub

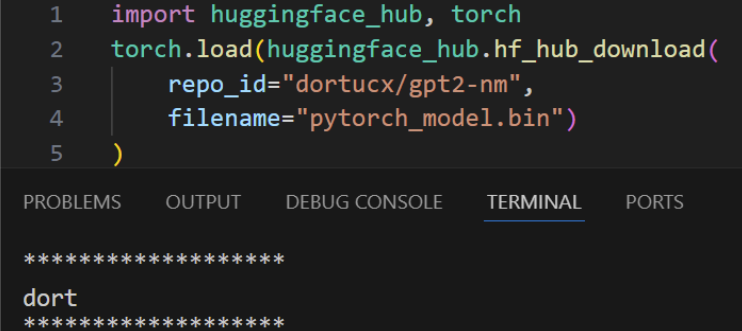

huggingface-hub (HFH) is the Python hub consumer library for Hugging Face. It’s helpful for importing and downloading fashions from the hub and has some performance for loading fashions.

Pulling and Loading a Malicious TF Mannequin from HFH

As soon as a malicious mannequin is created it may be uploaded to Hugging Face after which loaded by unsuspecting customers.

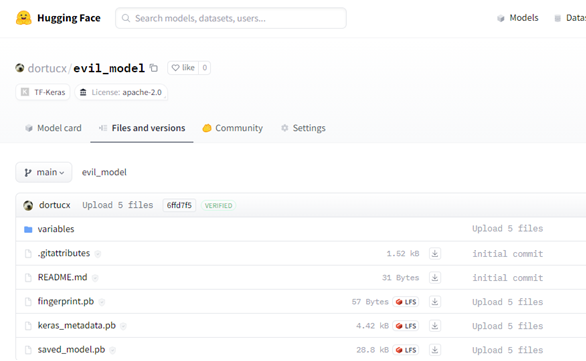

This mannequin was uploaded to Hugging Face:

Determine 1 – TF1 File Construction

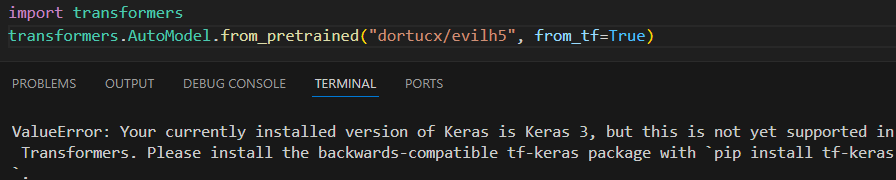

You would possibly bear in mind from our earlier blogpost referring to Tensorflow, the place an older model may generate an exploit even newer variations would eat. tf-keras additionally permits the usage of legacy codecs for Keras throughout the Hugging Face ecosystem. It even will get really helpful when attempting to open the legacy codecs:

Would possibly desire a extra stern safety warning for that there, buddy

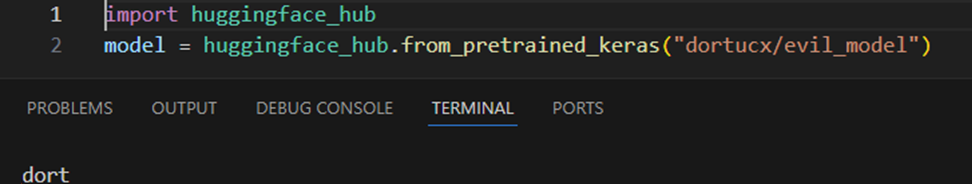

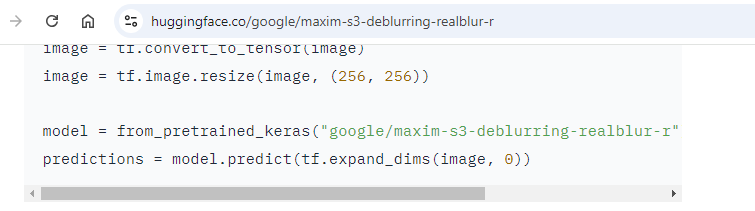

This TensorFlow mannequin will be retrieved by the weak strategies:

- huggingface_hub.from_pretrained_keras

- huggingface_hub.KerasModelHubMixin.from_pretrained

Loading an older TF1 malicious mannequin with these strategies will end in code execution:

You’d be forgiven for asking,“Yeah, sure, but odds are I won’t be using these methods at all, right?”. Effectively, keep in mind that this can be a belief ecosystem, and model-loading code seems in ReadMe guides.

For instance – right here’s that weak methodology from earlier, utilized by a Google mannequin.

This does have a caveat – the tf-keras from the Keras crew (which is utilized in TensorFlow) dependency is required, in any other case a ValueError is raised.

The difficulty was reported to HuggingFace on 14/08/2024 in order that these strategies could possibly be mounted, eliminated or at the least flagged as harmful. The response solely arrived in early September, and skim as follows:

“Hi Dor Tumarkin and team,

Thanks for reaching out and taking the time to report. We have a security scanner in place – users should not load models from repositories they don’t know or trust. Please note that from_pretrained_keras is a deprecated method only compatible with keras 2, with very low usage for very old models. New models and users will default to the keras 3 integration implemented in the keras library. Thanks again for taking the time to send us this.”

On the time of writing, these strategies are usually not formally deprecated in documentation or code, neither is there a reference to their weak nature. Make of this what you’ll.

The newer TensorFlow codecs don’t serialize lambdas, and on the time of writing no newer exploits exist. Nonetheless, the failure to outright reject (or at the least block with some flag in opposition to legacy fashions) nonetheless leaves Hugging Face code doubtlessly weak, and so validating the model of the mannequin can provide some extent of safety.

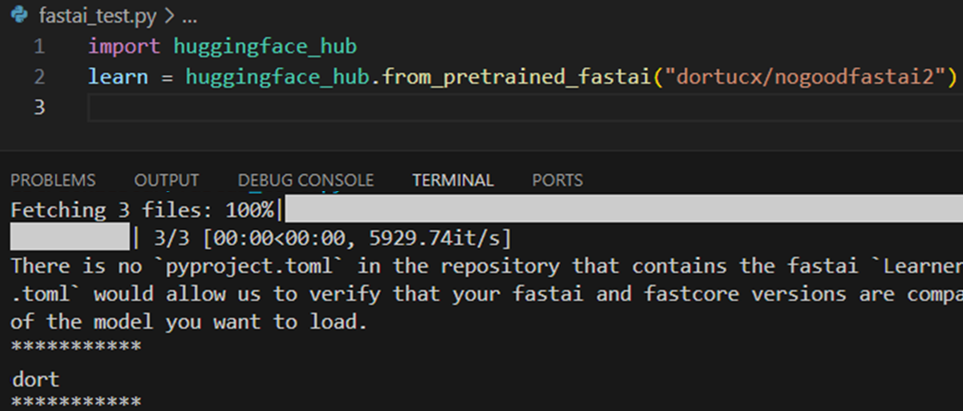

Pulling and Loading a Malicious FastAI Pickle from HFH

On second thought perhaps don’t pip set up huggingface_hub[fastai] and use it with some random mannequin

We are going to talk about FastAI quickly, as its personal factor amongst the various built-in libraries HF helps.

Built-in Libraries Lightning Spherical Bonanza!

Going past HFH, Hugging Face has related documentation and library assist for a lot of different ML frameworks.“Integrated Libraries” presents some interplay with HF.

There wasn’t sufficient time (or curiosity – this was getting painfully repetitive sooner or later) to discover all of them. What did turn out to be clear was that an over-reliance on the Torch format continues to be very a lot alive and properly, and that the usage of known-vulnerable Torch calls is each a typical apply and an open secret. This, in fact, implies that they’re weak.

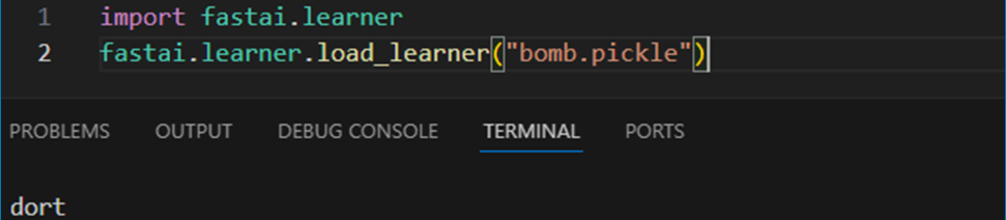

FastAI

fastai is a deep studying library. Its load_learner methodology is how FastAI objects are learn. Sadly, it’s simply wrapped torch.load with out safety flags, so it may solely unpickle a plain malicious payload:

As talked about beforehand, FastAI learners can be invoked from Hugging Face utilizing the huggingface_hub.from_pretrained_fastai methodology (requires the huggingface_hub[fastai] additional).

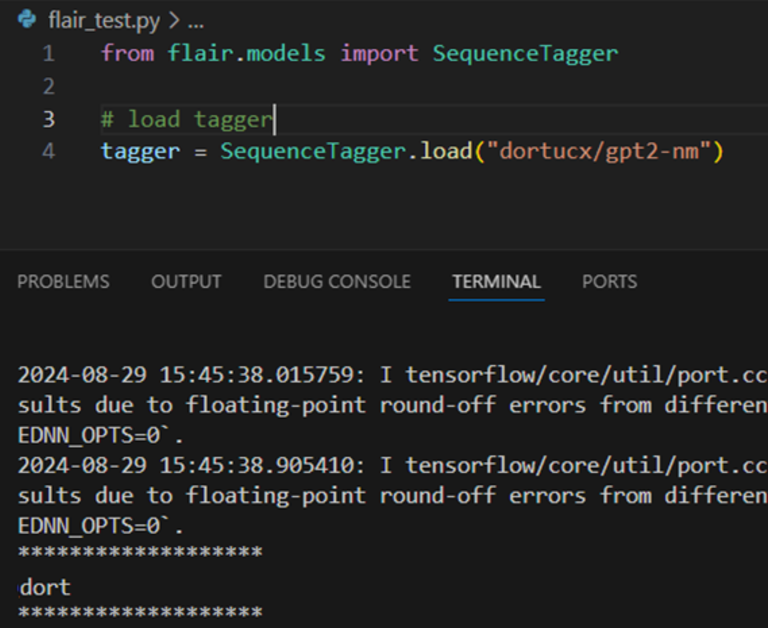

Aptitude

Aptitude is an NLP framework. Identical to FastAI, Aptitude merely torch.hundreds which is weak to code execution, and might obtain recordsdata instantly from the HuggingFace repository https://github.com/flairNLP/aptitude/blob/grasp/aptitude/file_utils.py#L384

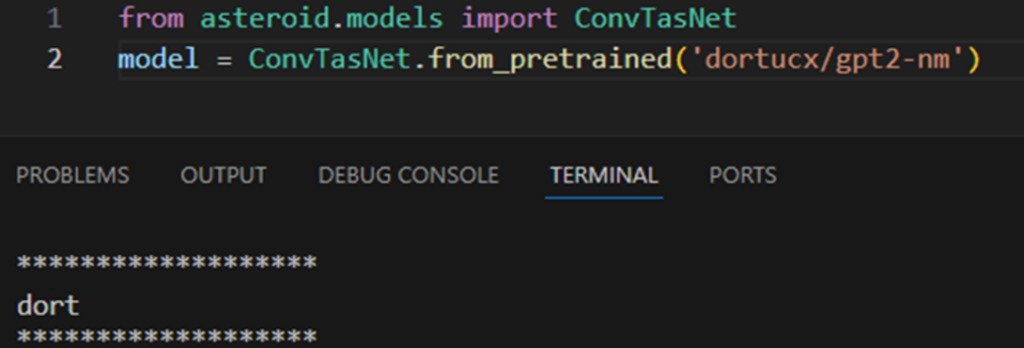

Asteroid

Asteroid is a PyTorch-based framework for audio. Being PyTorch-based often features a predictable hidden name to torch.load:

https://github.com/asteroid-team/asteroid/blob/grasp/asteroid/fashions/base_models.py#L114

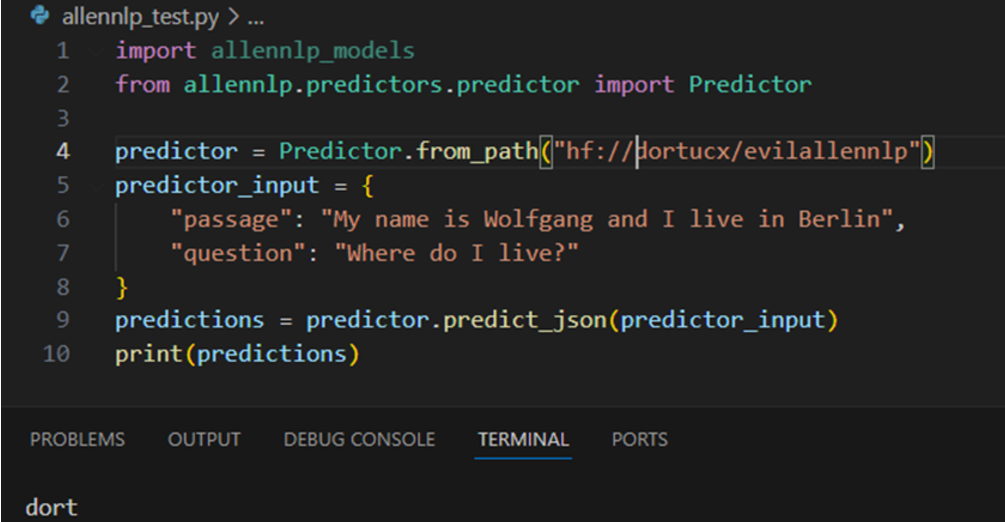

AllenNLP

AllenNLP was an NLP library for linguistic duties. It reached EOL on December 2022, nevertheless it nonetheless seems in Hugging Face documentation regardless of its obsolescence. It’s also primarily based on PyTorch:

Attributable to its EOL state, it’s each weak – and unlikely to ever be mounted.

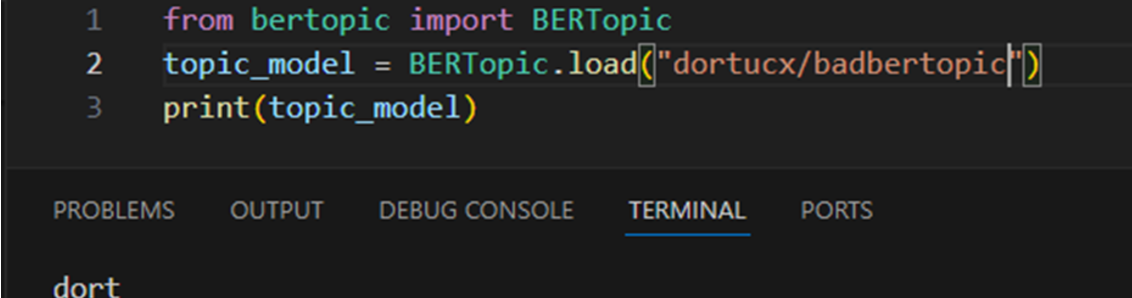

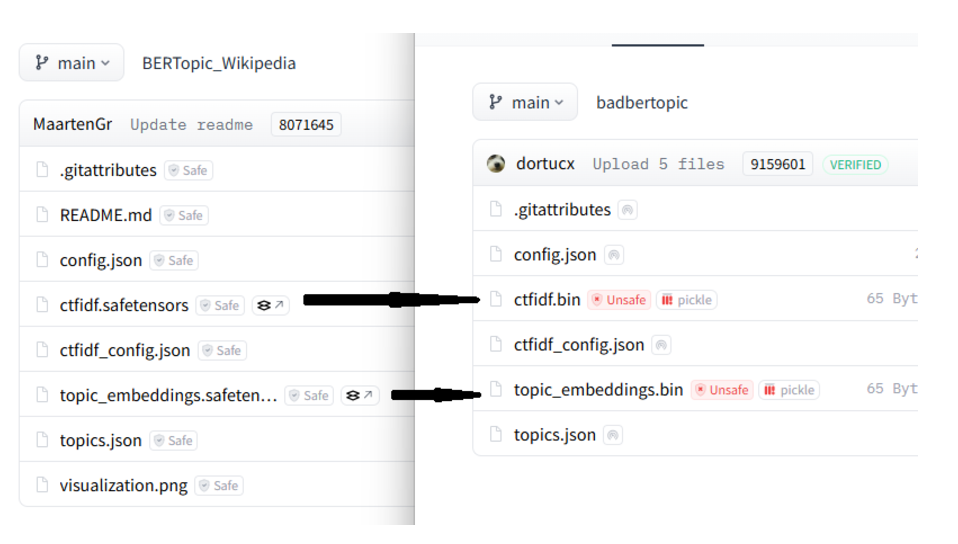

BERTopic BERTopic is a subject modeling framework, that means that it labels matters in textual content it receives.

Brew Your Personal!

There are most likely many, many extra exploitation strategies and libraries within the Hugging Face Built-in Libraries checklist. And given simply how fashionable PyTorch is it most likely goes approach past that.

The methodology for growing primary exploits for all of those is verysimple:

Obtain mannequin

Substitute fashions with Torch/TF payloads

If a mannequin has SafeTensors make Torches with the identical file identify, and delete the SafeTensor recordsdata

Add

Exploiting an automagic failover from SafeTensors to Torch in Bertopic

Whereas this doesn’t cowl all these libraries, the purpose is made – there are a whole lot of issues to look out for when utilizing the varied built-in libraries. We’ve reached out to the maintainers of those libraries, however sadly it appears torch.load pickle code execution is simply half and parcel for this know-how.

Conclusion

There are lots of, many, many potential instances to think about for Torch exploitation nonetheless within the HF ecosystem.

Even when SafeTensors is a viable possibility, many of those libraries assist varied codecs, which is once more the identical subject as we had with TF-Keras – legacy assist being out there means legacy vulnerabilities being exploitable.

within the subsequent weblog…

So we’ve mentioned the issues – weak frameworks, harmful configurations, malicious fashions – however what are a few of the options? How good are they? Can they be bypassed?

Spoiler alert: sure.