Anthropic researchers efficiently recognized tens of millions of ideas inside Claude Sonnet, one among their superior LLMs.

Their examine peels again the layers of a industrial AI mannequin, on this case, Anthropic‘s personal Claude 3 Sonnet, providing intriguing insights into what lies inside its “black box.”

AI fashions are sometimes thought-about black packing containers, which means you may’t ‘see’ inside them to grasp precisely how they work.

If you present an enter, the mannequin generates a response, however the reasoning behind its selections isn’t clear. Your enter goes in, and the output comes out – and never even AI corporations actually perceive what occurs inside.

Neural networks create their very own inner representations of data after they map inputs to outputs throughout knowledge coaching. The constructing blocks of this course of, known as “neuron activations,” are represented by numerical values.

Every idea is distributed throughout a number of neurons, and every neuron contributes to representing a number of ideas, making it tough to map ideas on to particular person neurons.

That is broadly analogous to our human brains. Simply as our brains course of sensory inputs and generate ideas, behaviors, and recollections, the billions, even trillions, of processes behind these features stay primarily unknown to science.

Anthropic’s examine makes an attempt to see inside AI’s black field with a way known as “dictionary learning.”

This includes decomposing complicated patterns in an AI mannequin into linear constructing blocks or “atoms” that make intuitive sense to people.

Mapping LLMs with Dictionary Studying

In October 2023, Anthropic utilized this technique to a tiny “toy” language mannequin and located coherent options equivalent to ideas like uppercase textual content, DNA sequences, surnames in citations, mathematical nouns, or perform arguments in Python code.

This newest examine scales up the method to work for right this moment’s bigger AI language fashions, on this case, Anthropic‘s Claude 3 Sonnet.

Right here’s a step-by-step of how the examine labored:

Figuring out patterns with dictionary studying

Anthropic used dictionary studying to investigate neuron activations throughout varied contexts and establish frequent patterns.

Dictionary studying teams these activations right into a smaller set of significant “features,” representing higher-level ideas realized by the mannequin.

By figuring out these options, researchers can higher perceive how the mannequin processes and represents info.

Extracting options from the center layer

The researchers targeted on the center layer of Claude 3.0 Sonnet, which serves as a vital level within the mannequin’s processing pipeline.

Making use of dictionary studying to this layer extracts tens of millions of options that seize the mannequin’s inner representations and realized ideas at this stage.

Extracting options from the center layer permits researchers to look at the mannequin’s understanding of data after it has processed the enter earlier than producing the ultimate output.

Discovering numerous and summary ideas

The extracted options revealed an expansive vary of ideas realized by Claude, from concrete entities like cities and other people to summary notions associated to scientific fields and programming syntax.

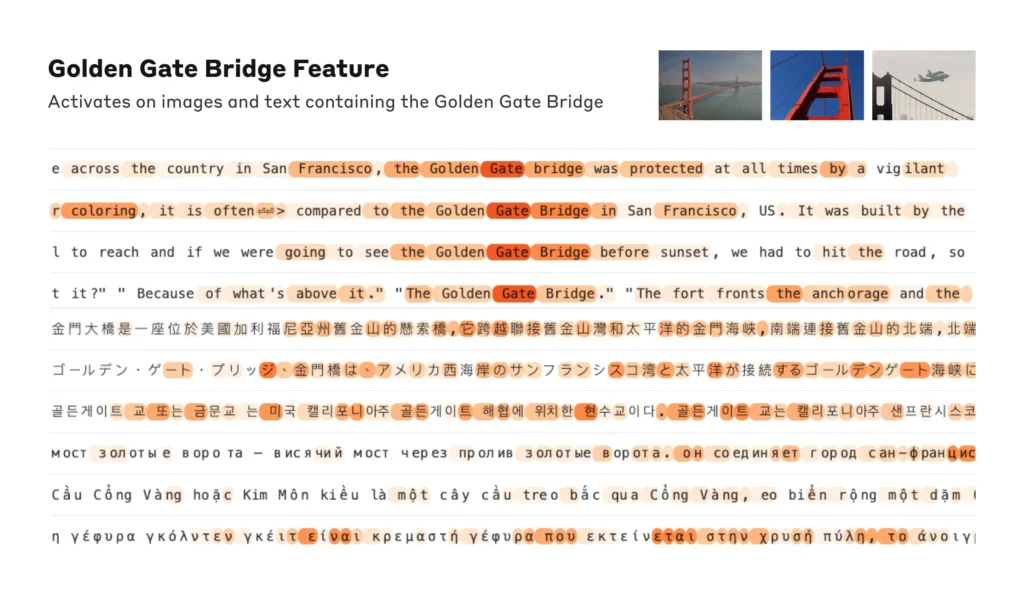

Curiously, the options have been discovered to be multimodal, responding to each textual and visible inputs, indicating that the mannequin can study and symbolize ideas throughout totally different modalities.

Moreover, the multilingual options counsel that the mannequin can grasp ideas expressed in varied languages.

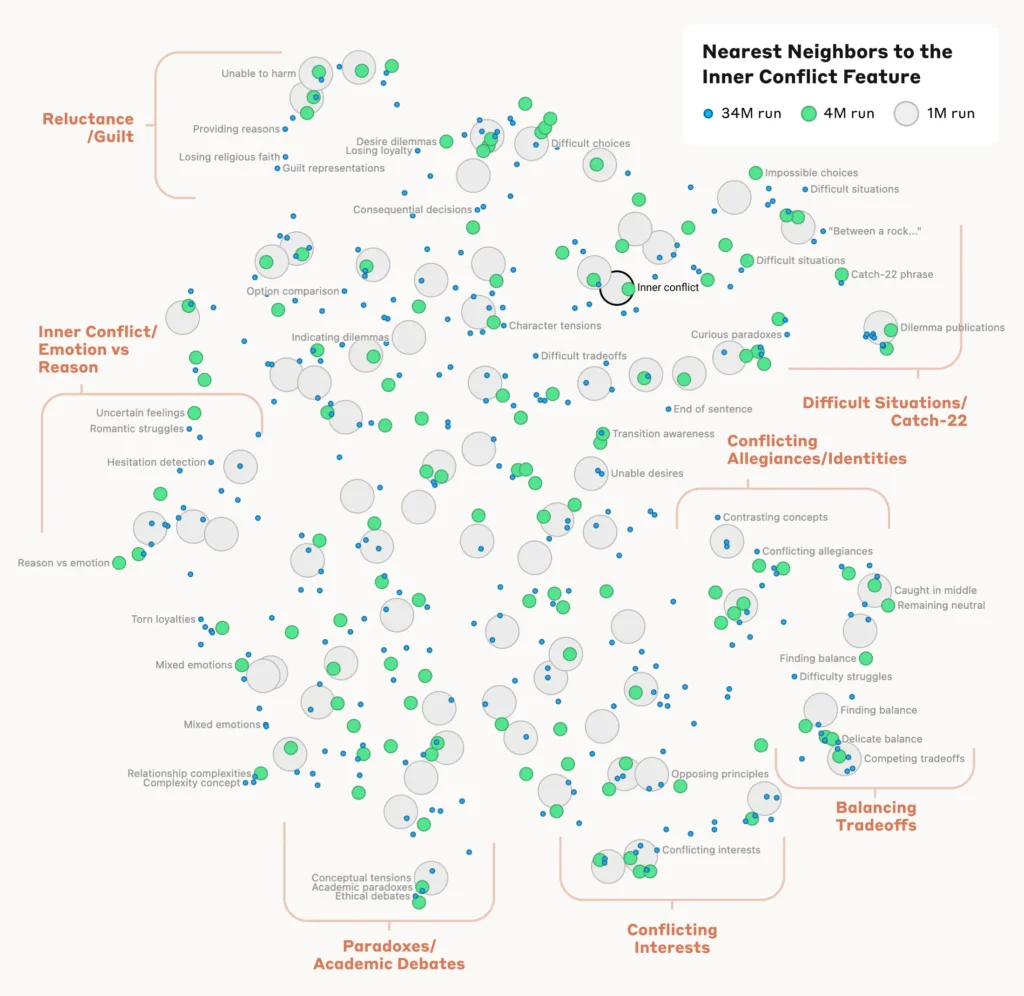

Analyzing the group of ideas

To grasp how the mannequin organizes and relates totally different ideas, the researchers analyzed the similarity between options based mostly on their activation patterns.

They found that options representing associated ideas tended to cluster collectively. For instance, options related to cities or scientific disciplines exhibited increased similarity to one another than to options representing unrelated ideas.

This means that the mannequin’s inner group of ideas aligns, to some extent, with human intuitions about conceptual relationships.

Verifying the options

To substantiate that the recognized options straight affect the mannequin’s habits and outputs, the researchers performed “feature steering” experiments.

This concerned selectively amplifying or suppressing the activation of particular options in the course of the mannequin’s processing and observing the influence on its responses.

By manipulating particular person options, researchers may set up a direct hyperlink between particular person options and the mannequin’s habits. As an illustration, amplifying a function associated to a selected metropolis triggered the mannequin to generate city-biased outputs, even in irrelevant contexts.

Why interpretability is vital for AI security

Anthropic’s analysis is essentially related to AI interpretability and, by extension, security.

Understanding how LLMs course of and symbolize info helps researchers perceive and mitigate dangers. It lays the inspiration for growing extra clear and explainable AI methods.

As Anthropic explains, “We hope that we and others can use these discoveries to make models safer. For example, it might be possible to use the techniques described here to monitor AI systems for certain dangerous behaviors (such as deceiving the user), to steer them towards desirable outcomes (debiasing), or to remove certain dangerous subject matter entirely.”

Unlocking a larger understanding of AI habits turns into paramount as they turn out to be ubiquitous for vital decision-making processes in fields akin to healthcare, finance, and felony justice. It additionally helps uncover the basis reason for bias, hallucinations, and different undesirable or unpredictable behaviors.

For instance, a current examine from the College of Bonn uncovered how graph neural networks (GNNs) used for drug discovery rely closely on recalling similarities from coaching knowledge relatively than actually studying complicated new chemical interactions. This makes it robust to grasp how precisely these fashions decide new drug compounds of curiosity.

Final 12 months, the UK authorities negotiated with main tech giants like OpenAI and DeepMind, in search of deeper entry and understanding of their AI methods’ inner decision-making processes.

Regulation just like the EU’s AI Act will strain AI corporations to be extra clear, although industrial secrets and techniques appear positive to stay underneath lock and key.

Anthropic’s analysis affords a glimpse of what’s contained in the field by ‘mapping’ info throughout the mannequin.

Nevertheless, the reality is that these fashions are so huge that, by Anthropic’s personal admission, “We think it’s quite likely that we’re orders of magnitude short, and that if we wanted to get all the features – in all layers! – we would need to use much more compute than the total compute needed to train the underlying models.”

That’s an fascinating level – reverse engineering a mannequin is extra computationally complicated than engineering the mannequin within the first place.

It’s harking back to vastly costly neuroscience initiatives just like the Human Mind Mission (HBP), which poured billions into mapping our personal human brains solely to finally fail.

By no means underestimate how a lot lies contained in the black field.