The requirement to check AI fashions, preserve people within the loop, and provides individuals the appropriate to problem automated selections made by AI are simply a number of the 10 obligatory guardrails proposed by the Australian authorities as methods to minimise AI threat and construct public belief within the know-how.

Launched for public session by Trade and Science Minister Ed Husic in September 2024, the guardrails may quickly apply to AI utilized in high-risk settings. They’re complemented by a brand new Voluntary AI Security Commonplace designed to encourage companies to undertake greatest observe AI instantly.

What are the obligatory AI guardrails being proposed?

Australia’s 10 proposed obligatory guardrails are designed to set clear expectations on methods to use AI safely and responsibly when growing and deploying it in high-risk settings. They search to handle dangers and harms from AI, construct public belief, and supply companies with better regulatory certainty.

Guardrail 1: Accountability

Just like necessities in each Canadian and EU AI laws, organisations might want to set up, implement, and publish an accountability course of for regulatory compliance. This would come with facets like insurance policies for information and threat administration and clear inner roles and tasks.

Guardrail 2: Danger administration

A threat administration course of to determine and mitigate the dangers of AI will must be established and applied. This should transcend a technical threat evaluation to contemplate potential impacts on individuals, neighborhood teams, and society earlier than a high-risk AI system may be put into use.

SEE: 9 progressive use instances for AI in Australian companies in 2024

Guardrail 3: Information safety

Organisations might want to shield AI techniques to safeguard privateness with cybersecurity measures, in addition to construct sturdy information governance measures to handle the standard of knowledge and the place it comes from. The federal government noticed that information high quality straight impacts the efficiency and reliability of an AI mannequin.

Guardrail 4: Testing

Excessive-risk AI techniques will must be examined and evaluated earlier than inserting them in the marketplace. They will even must be constantly monitored as soon as deployed to make sure they function as anticipated. That is to make sure they meet particular, goal, and measurable efficiency metrics and threat is minimised.

Guardrail 5: Human management

Significant human oversight shall be required for high-risk AI techniques. This may imply organisations should guarantee people can successfully perceive the AI system, oversee its operation, and intervene the place obligatory throughout the AI provide chain and all through the AI lifecycle.

Guardrail 6: Consumer data

Organisations might want to inform end-users if they’re the topic of any AI-enabled selections, are interacting with AI, or are consuming any AI-generated content material, so that they know the way AI is getting used and the place it impacts them. This may must be communicated in a transparent, accessible, and related method.

Guardrail 7: Difficult AI

Folks negatively impacted by AI techniques shall be entitled to problem use or outcomes. Organisations might want to set up processes for individuals impacted by high-risk AI techniques to contest AI-enabled selections or to make complaints about their expertise or therapy.

Guardrail 8: Transparency

Organisations have to be clear with the AI provide chain about information, fashions, and techniques to assist them successfully handle threat. It is because some actors could lack essential details about how a system works, resulting in restricted explainability, just like issues with right this moment’s superior AI fashions.

Guardrail 9: AI data

Protecting and sustaining a variety of data on AI techniques shall be required all through its lifecycle, together with technical documentation. Organisations have to be prepared to present these data to related authorities on request and for the aim of assessing their compliance with the guardrails.

SEE: Why generative AI tasks threat failure with out enterprise understanding

Guardrail 10: AI assessments

Organisations shall be topic to conformity assessments, described as an accountability and quality-assurance mechanism, to point out they’ve adhered to the guardrails for high-risk AI techniques. These shall be carried out by the AI system builders, third events, or authorities entities or regulators.

When and the way will the ten new obligatory guardrails come into power?

The obligatory guardrails are topic to a public session course of till Oct. 4, 2024.

After this, the federal government will search to finalise the guardrails and convey them into power, based on Husic, who added that this might embody the doable creation of a brand new Australian AI Act.

Different choices embody:

- The variation of present regulatory frameworks to incorporate the brand new guardrails.

- Introducing framework laws with related amendments to present laws.

Husic has stated the federal government will do that “as soon as we can.” The guardrails have been born out of an extended session course of on AI regulation that has been ongoing since June 2023.

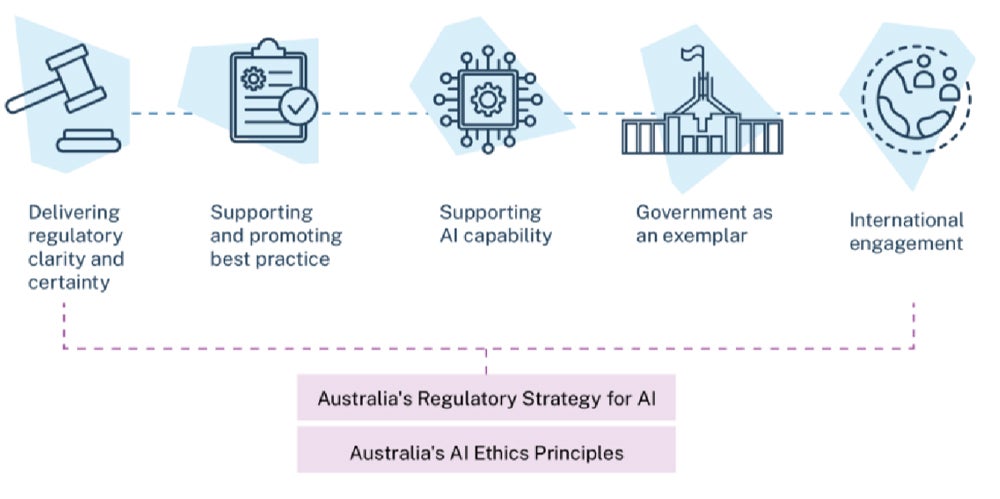

Why is the federal government taking the method it’s taking to regulation?

The Australian authorities is following the EU in taking a risk-based method to regulating AI. This method seeks to stability the advantages that AI guarantees to convey with deployment in high-risk settings.

Specializing in high-risk settings

The preventative measures proposed within the guardrails search “to avoid catastrophic harm before it occurs,” the federal government defined in its Secure and accountable AI in Australia proposals paper.

The federal government will outline high-risk AI as a part of the session. Nonetheless, it suggests that it’s going to think about situations like adversarial impacts to a person’s human rights, adversarial impacts to bodily or psychological well being or security, and authorized results akin to defamatory materials, amongst different potential dangers.

Companies want steering on AI

The federal government claims companies want clear guardrails to implement AI safely and responsibly.

A newly launched Accountable AI Index 2024, commissioned by the Nationwide AI Centre, exhibits that Australian companies persistently overestimate their functionality to make use of accountable AI practices.

The outcomes of the index discovered:

- 78% of Australian companies consider they have been implementing AI safely and responsibly, however in solely 29% of instances was this right.

- Australian organisations are adopting solely 12 out of 38 accountable AI practices on common.

What ought to companies and IT groups do now?

The obligatory guardrails will create new obligations for organisations utilizing AI in high-risk settings.

IT and safety groups are more likely to be engaged in assembly a few of these necessities, together with information high quality and safety obligations, and making certain mannequin transparency by the availability chain.

The Voluntary AI Security Commonplace

The federal government has launched a Voluntary AI Security Commonplace that’s accessible for companies now.

IT groups that wish to be ready can use the AI Security Commonplace to assist convey their companies on top of things with obligations beneath any future laws, which can embody the brand new obligatory guardrails.

The AI Security Commonplace contains recommendation on how companies can apply and undertake the usual by particular case-study examples, together with the widespread use case of a basic objective AI chatbot.