Apple unveils ‘Apple Intelligence’ for iPhone, iPad, and Mac gadgets whereas providing a $1 million bug bounty for cybersecurity specialists to seek out flaws in its Personal Cloud Compute servers, enhancing privateness and AI safety on iOS and macOS.

Apple as we speak unveiled its extremely anticipated AI-powered service, Apple Intelligence for iPhone, iPad, and Mac. This service, set to reach with iOS 18.1, iPadOS 18.1, and macOS Sequoia 15.1, combines on-device processing with highly effective cloud-based computing to deal with advanced AI duties.

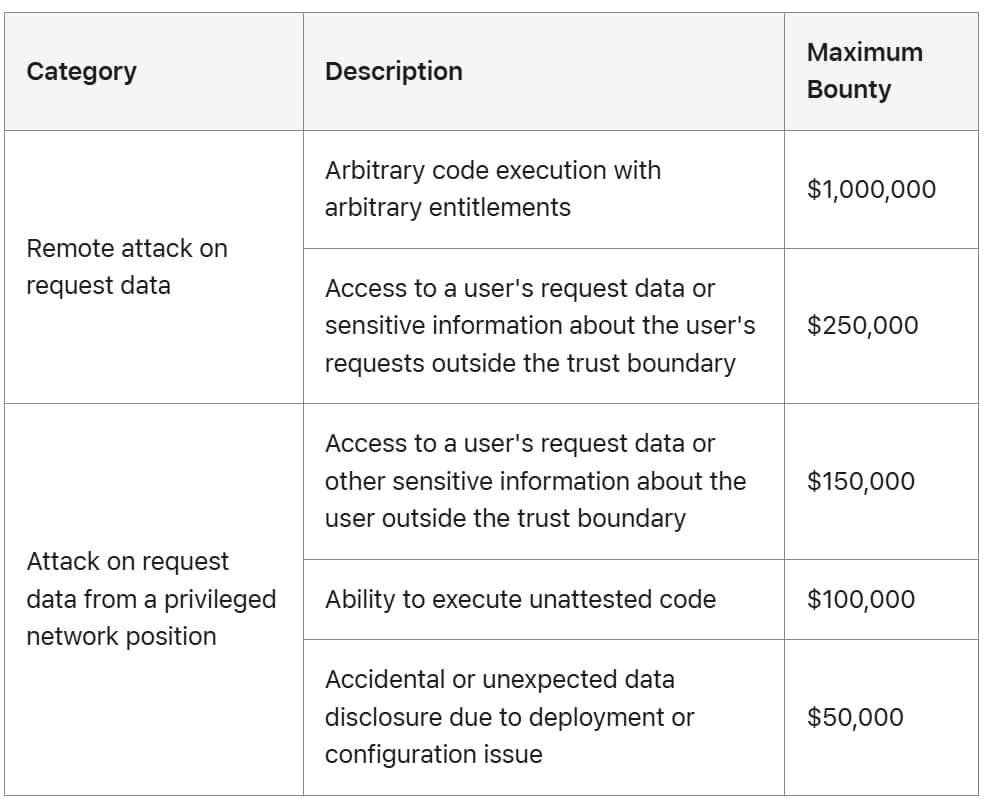

To make sure the safety of those cloud-based programs, Apple has launched a complete bug bounty program. The tech large is providing a reward of as much as $1 million to safety researchers who can efficiently determine and exploit vulnerabilities in its “Private Cloud Compute” servers.

These servers, particularly designed for Apple Intelligence, are liable for processing intensive AI duties that can’t be dealt with by particular person gadgets. This initiative is designed to strengthen the safety of the Personal Cloud Compute (PCC) servers that can underpin this service. The PCC servers, which can deal with sure AI-powered duties, are a essential element of Apple Intelligence.

“We believe Private Cloud Compute is the most advanced security architecture ever deployed for cloud AI compute at scale, and we look forward to working with the research community to build trust in the system and make it even more secure and private over time,” Apple said in its official assertion.

Apple has taken important steps to prioritize consumer privateness and safety. To additional strengthen belief, Apple has additionally opened up a Digital Analysis Setting (VRE) to researchers and safety fans.

The VRE supplies entry to the PCC software program, permitting people to scrutinize its code and determine potential vulnerabilities. This permits them to scrutinize the system’s safety measures and determine potential weaknesses.

The iPhone maker has additionally revealed a complete safety information, detailing the intricacies of PCC’s safety structure and the way it protects consumer knowledge. This information, coupled with the VRE, supplies a strong platform for safety researchers to delve deep into the system and uncover potential weaknesses.

This system gives a spread of rewards, together with $50,000 for unintended or sudden knowledge disclosure, $100,000 for uncertified code execution, $150,000 for entry to consumer request knowledge or delicate data outdoors the belief boundary, and $250,000.

Moreover, $1,000 for arbitrary code execution with out consumer permission or data. Apple additionally guarantees to think about awarding cash for any safety subject that considerably impacts PCC, even when it doesn’t match a broadcast class.

By providing a considerable reward for essential vulnerabilities, Apple is encouraging the worldwide cybersecurity neighborhood to contribute to the safety of its AI infrastructure. The corporate is especially concerned with vulnerabilities that would compromise consumer knowledge, execute code with out permission, or exploit system design flaws.

By inviting the safety neighborhood to scrutinize its infrastructure, Apple can also be displaying a dedication to transparency and safety. To study extra about Apple Bug Bounty Program go to this hyperlink.

RELATED TOPICS

- Google Launches $250,000 kvmCTF Bug Bounty Program

- OpenAI Launches $200 to $20k ChatGPT Bug Bounty Program

- When Moral Hackers Saved Corporations from Devastating Hacks

- Multichain hack: Hacker returns $1m, retains $150k as bug bounty

- A 19-year-old moral hacker is a millionaire now; due to his abilities