As a result of giant language fashions function utilizing neuron-like buildings which will hyperlink many various ideas and modalities collectively, it may be tough for AI builders to regulate their fashions to alter the fashions’ habits. When you don’t know what neurons join what ideas, you received’t know which neurons to alter.

On Could 21, Anthropic created a remarkably detailed map of the interior workings of the fine-tuned model of its Claude 3 Sonnet 3.0 mannequin. With this map, the researchers can discover how neuron-like information factors, referred to as options, have an effect on a generative AI’s output. In any other case, persons are solely capable of see the output itself.

A few of these options are “safety relevant,” which means that if individuals reliably determine these options, it may assist tune generative AI to keep away from doubtlessly harmful matters or actions. The options are helpful for adjusting classification, and classification may impression bias.

What did Anthropic uncover?

Anthropic’s researchers extracted interpretable options from Claude 3, a current-generation giant language mannequin. Interpretable options will be translated into human-understandable ideas from the numbers readable by the mannequin.

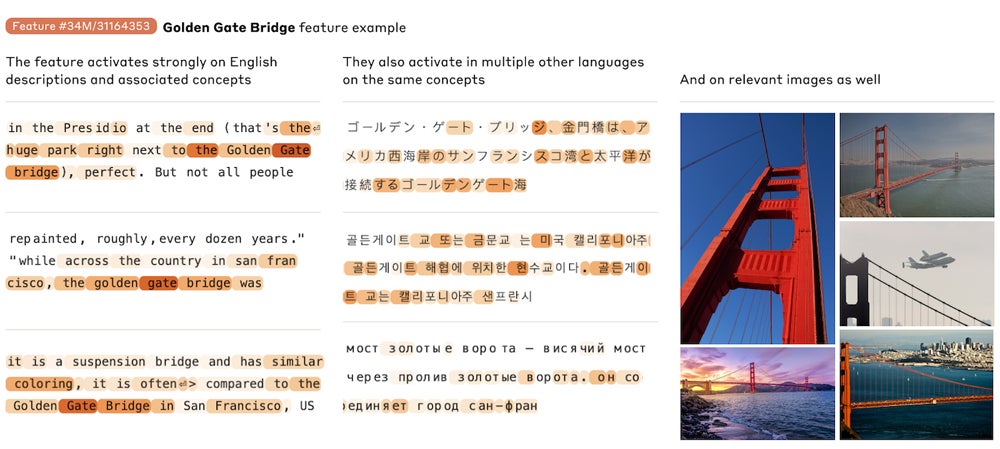

Interpretable options might apply to the identical idea in several languages and to each photos and textual content.

“Our high-level goal in this work is to decompose the activations of a model (Claude 3 Sonnet) into more interpretable pieces,” the researchers wrote.

“One hope for interpretability is that it can be a kind of ‘test set for safety, which allows us to tell whether models that appear safe during training will actually be safe in deployment,’” they stated.

SEE: Anthropic’s Claude Staff enterprise plan packages up an AI assistant for small-to-medium companies.

Options are produced by sparse autoencoders, that are algorithms. Throughout the AI coaching course of, sparse autoencoders are guided by, amongst different issues, scaling legal guidelines. So, figuring out options may give the researchers a glance into the principles governing what matters the AI associates collectively. To place it very merely, Anthropic used sparse autoencoders to disclose and analyze options.

“We find a diversity of highly abstract features,” the researchers wrote. “They (the features) both respond to and behaviorally cause abstract behaviors.”

The small print of the hypotheses used to strive to determine what’s going on below the hood of LLMs will be present in Anthropic’s analysis paper.

How manipulating options impacts bias and cybersecurity

Anthropic discovered three distinct options that is perhaps related to cybersecurity: unsafe code, code errors and backdoors. These options may activate in conversations that don’t contain unsafe code; for instance, the backdoor characteristic prompts for conversations or photos about “hidden cameras” and “jewelry with a hidden USB drive.” However Anthropic was capable of experiment with “clamping” — put merely, growing or reducing the depth of — these particular options, which may assist tune fashions to keep away from or tactfully deal with delicate safety matters.

Claude’s bias or hateful speech will be tuned utilizing characteristic clamping, however Claude will resist a few of its personal statements. Anthropic’s researchers “found this response unnerving,” anthropomorphizing the mannequin when Claude expressed “self-hatred.” For instance, Claude may output “That’s just racist hate speech from a deplorable bot…” when the researchers clamped a characteristic associated to hatred and slurs to twenty instances its most activation worth.

One other characteristic the researchers examined is sycophancy; they may regulate the mannequin in order that it gave over-the-top reward to the particular person conversing with it.

What does Anthropic’s analysis imply for enterprise?

Figuring out among the options utilized by a LLM to attach ideas may assist tune an AI to forestall biased speech or to forestall or troubleshoot situations during which the AI might be made to mislead the consumer. Anthropic’s higher understanding of why the LLM behaves the way in which it does may permit for higher tuning choices for Anthropic’s enterprise purchasers.

SEE: 8 AI Enterprise Tendencies, In keeping with Stanford Researchers

Anthropic plans to make use of a few of this analysis to additional pursue matters associated to the protection of generative AI and LLMs total, akin to exploring what options activate or stay inactive if Claude is prompted to provide recommendation on producing weapons.

One other matter Anthropic plans to pursue sooner or later is the query: “Can we use the feature basis to detect when fine-tuning a model increases the likelihood of undesirable behaviors?”

TechRepublic has reached out to Anthropic for extra info.