Picture by Writer

One of many fields that underpins information science is machine studying. So, if you wish to get into information science, understanding machine studying is without doubt one of the first steps you’ll want to take.

However the place do you begin? You begin by understanding the distinction between the 2 major sorts of machine studying algorithms. Solely after that, we are able to discuss particular person algorithms that needs to be in your precedence listing to study as a newbie.

The primary distinction between the algorithms relies on how they study.

Picture by Writer

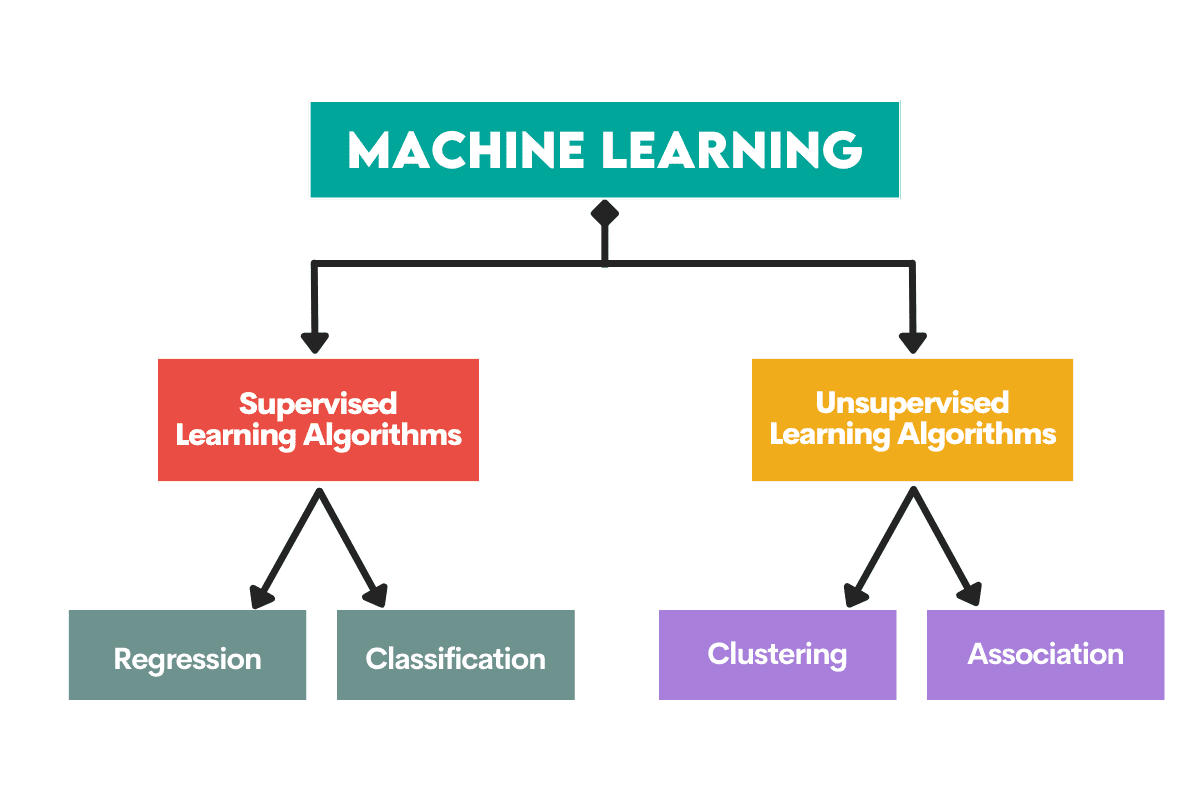

Supervised studying algorithms are skilled on a labeled dataset. This dataset serves as a supervision (therefore the title) for studying as a result of some information it comprises is already labeled as an accurate reply. Primarily based on this enter, the algorithm can study and apply that studying to the remainder of the information.

Alternatively, unsupervised studying algorithms study on an unlabeled dataset, that means they interact find patterns in information with out people giving instructions.

You possibly can learn extra intimately about machine studying algorithms and sorts of studying.

There are additionally another sorts of machine studying, however not for novices.

Algorithms are employed to unravel two major distinct issues inside every kind of machine studying.

Once more, there are some extra duties, however they aren’t for novices.

Picture by Writer

Supervised Studying Duties

Regression is the duty of predicting a numerical worth, referred to as steady final result variable or dependent variable. The prediction relies on the predictor variable(s) or unbiased variable(s).

Take into consideration predicting oil costs or air temperature.

Classification is used to foretell the class (class) of the enter information. The final result variable right here is categorical or discrete.

Take into consideration predicting if the mail is spam or not spam or if the affected person will get a sure illness or not.

Unsupervised Studying Duties

Clustering means dividing information into subsets or clusters. The purpose is to group information as naturally as doable. Which means information factors inside the identical cluster are extra comparable to one another than to information factors from different clusters.

Dimensionality discount refers to lowering the variety of enter variables in a dataset. It mainly means lowering the dataset to only a few variables whereas nonetheless capturing its essence.

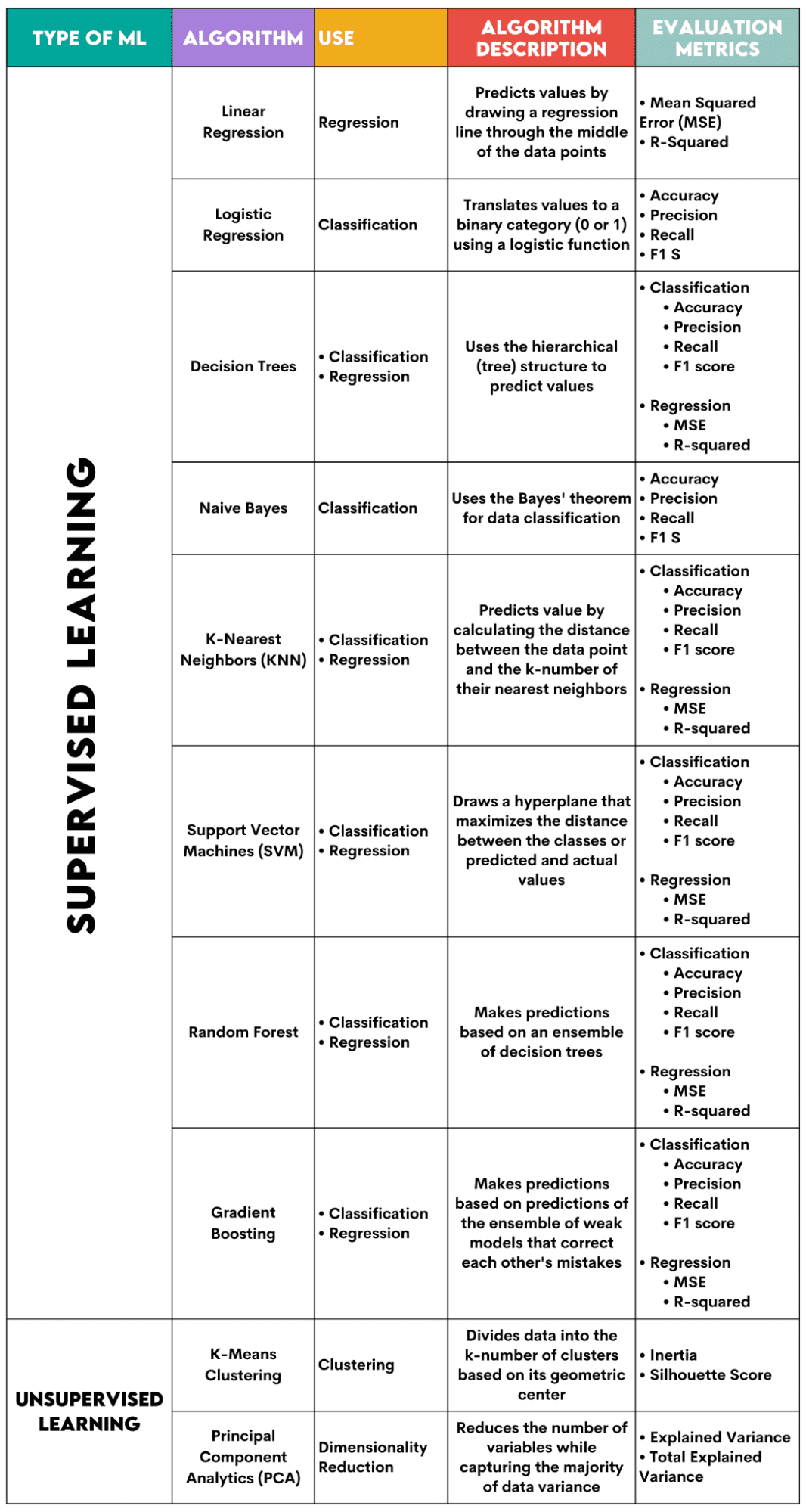

Right here’s an outline of the algorithms I’ll cowl.

Picture by Writer

Supervised Studying Algorithms

When selecting the algorithm to your downside, it’s essential to know what activity the algorithm is used for.

As an information scientist, you’ll in all probability apply these algorithms in Python utilizing the scikit-learn library. Though it does (nearly) every little thing for you, it’s advisable that you understand at the least the overall ideas of every algorithm’s internal workings.

Lastly, after the algorithm is skilled, it’s best to consider how nicely it performs. For that, every algorithm has some normal metrics.

1. Linear Regression

Used For: Regression

Description: Linear regression attracts a straight line referred to as a regression line between the variables. This line goes roughly by means of the center of the information factors, thus minimizing the estimation error. It reveals the anticipated worth of the dependent variable based mostly on the worth of the unbiased variables.

Analysis Metrics:

- Imply Squared Error (MSE): Represents the typical of the squared error, the error being the distinction between precise and predicted values. The decrease the worth, the higher the algorithm efficiency.

- R-Squared: Represents the variance proportion of the dependent variable that may be predicted by the unbiased variable. For this measure, it’s best to try to get to 1 as shut as doable.

2. Logistic Regression

Used For: Classification

Description: It makes use of a logistic operate to translate the information values to a binary class, i.e., 0 or 1. That is carried out utilizing the brink, normally set at 0.5. The binary final result makes this algorithm excellent for predicting binary outcomes, resembling YES/NO, TRUE/FALSE, or 0/1.

Analysis Metrics:

- Accuracy: The ratio between right and complete predictions. The nearer to 1, the higher.

- Precision: The measure of mannequin accuracy in constructive predictions; proven because the ratio between right constructive predictions and complete anticipated constructive outcomes. The nearer to 1, the higher.

- Recall: It, too, measures the mannequin’s accuracy in constructive predictions. It’s expressed as a ratio between right constructive predictions and complete observations made within the class. Learn extra about these metrics right here.

- F1 Rating: The harmonic imply of the mannequin’s recall and precision. The nearer to 1, the higher.

3. Determination Timber

Used For: Regression & Classification

Description: Determination bushes are algorithms that use the hierarchical or tree construction to foretell worth or a category. The basis node represents the entire dataset, which then branches into determination nodes, branches, and leaves based mostly on the variable values.

Analysis Metrics:

- Accuracy, precision, recall, and F1 rating -> for classification

- MSE, R-squared -> for regression

4. Naive Bayes

Used For: Classification

Description: This can be a household of classification algorithms that use Bayes’ theorem, that means they assume the independence between options inside a category.

Analysis Metrics:

- Accuracy

- Precision

- Recall

- F1 rating

5. Okay-Nearest Neighbors (KNN)

Used For: Regression & Classification

Description: It calculates the space between the take a look at information and the k-number of the closest information factors from the coaching information. The take a look at information belongs to a category with a better variety of ‘neighbors’. Relating to the regression, the anticipated worth is the typical of the ok chosen coaching factors.

Analysis Metrics:

- Accuracy, precision, recall, and F1 rating -> for classification

- MSE, R-squared -> for regression

6. Help Vector Machines (SVM)

Used For: Regression & Classification

Description: This algorithm attracts a hyperplane to separate totally different courses of knowledge. It’s positioned on the largest distance from the closest factors of each class. The upper the space of the information level from the hyperplane, the extra it belongs to its class. For regression, the precept is analogous: hyperplane maximizes the space between the anticipated and precise values.

Analysis Metrics:

- Accuracy, precision, recall, and F1 rating -> for classification

- MSE, R-squared -> for regression

7. Random Forest

Used For: Regression & Classification

Description: The random forest algorithm makes use of an ensemble of determination bushes, which then decide forest. The algorithm’s prediction relies on the prediction of many determination bushes. Information can be assigned to a category that receives probably the most votes. For regression, the anticipated worth is a median of all of the bushes’ predicted values.

Analysis Metrics:

- Accuracy, precision, recall, and F1 rating -> for classification

- MSE, R-squared -> for regression

8. Gradient Boosting

Used For: Regression & Classification

Description: These algorithms use an ensemble of weak fashions, with every subsequent mannequin recognizing and correcting the earlier mannequin’s errors. This course of is repeated till the error (loss operate) is minimized.

Analysis Metrics:

- Accuracy, precision, recall, and F1 rating -> for classification

- MSE, R-squared -> for regression

Unsupervised Studying Algorithms

9. Okay-Means Clustering

Used For: Clustering

Description: The algorithm divides the dataset into k-number clusters, every represented by its centroid or geometric heart. Via the iterative strategy of dividing information right into a k-number of clusters, the purpose is to reduce the space between the information factors and their cluster’s centroid. Alternatively, it additionally tries to maximise the space of those information factors from the opposite clusters’s centroid. Merely put, the information belonging to the identical cluster needs to be as comparable as doable and as totally different as information from different clusters.

Analysis Metrics:

- Inertia: The sum of the squared distance of every information level’s distance from the closest cluster centroid. The decrease the inertia worth, the extra compact the cluster.

- Silhouette Rating: It measures the cohesion (information’s similarity inside its personal cluster) and separation (information’s distinction from different clusters) of the clusters. The worth of this rating ranges from -1 to +1. The upper the worth, the extra the information is well-matched to its cluster, and the more serious it’s matched to different clusters.

10. Principal Part Analytics (PCA)

Used For: Dimensionality Discount

Description: The algorithm reduces the variety of variables utilized by developing new variables (principal parts) whereas nonetheless making an attempt to maximise the captured variance of the information. In different phrases, it limits information to its commonest parts whereas not dropping the essence of the information.

Analysis Metrics:

- Defined Variance: The share of the variance coated by every principal part.

- Whole Defined Variance: The share of the variance coated by all principal parts.

Machine studying is a necessary a part of information science. With these ten algorithms, you’ll cowl the most typical duties in machine studying. In fact, this overview offers you solely a basic concept of how every algorithm works. So, that is only a begin.

Now, you’ll want to learn to implement these algorithms in Python and resolve actual issues. In that, I like to recommend utilizing scikit-learn. Not solely as a result of it’s a comparatively easy-to-use ML library but in addition due to its in depth supplies on ML algorithms.

Nate Rosidi is an information scientist and in product technique. He is additionally an adjunct professor instructing analytics, and is the founding father of StrataScratch, a platform serving to information scientists put together for his or her interviews with actual interview questions from high corporations. Nate writes on the most recent tendencies within the profession market, offers interview recommendation, shares information science initiatives, and covers every little thing SQL.