Researchers printed a research evaluating the accuracy and high quality of summaries that LLMs produce. Claude 3 Opus carried out significantly properly however people nonetheless have the sting.

AI fashions are extraordinarily helpful for summarizing lengthy paperwork if you don’t have the time or inclination to learn them.

The posh of rising context home windows means we get to immediate fashions with longer paperwork, which challenges their capability to at all times get the info straight within the abstract.

The researchers from the College of Massachusetts Amherst, Adobe, the Allen Institute for AI, and Princeton College, printed a research that sought to learn the way good AI fashions are at summarizing book-length content material (>100k tokens).

FABLES

They chose 26 books printed in 2023 and 2024 and had numerous LLMs summarize the texts. The latest publication dates have been chosen to keep away from potential knowledge contamination within the fashions’ unique coaching knowledge.

As soon as the fashions produced the summaries, they used GPT-4 to extract decontextualized claims from them. The researchers then employed human annotators who had learn the books and requested them to fact-check the claims.

The ensuing knowledge was compiled right into a dataset referred to as “Faithfulness Annotations for Book-Length Summarization” (FABLES). FABLES incorporates 3,158 claim-level annotations of faithfulness throughout 26 narrative texts.

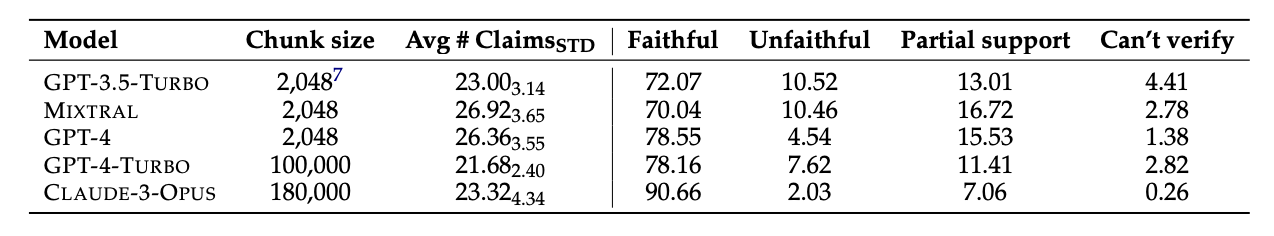

The take a look at outcomes confirmed that Claude 3 Opus was “the most faithful book-length summarizer by a significant margin,” with over 90% of its claims verified as trustworthy, or correct.

GPT-4 got here a distant second with solely 78% of its claims verified as trustworthy by the human annotators.

The exhausting half

The fashions underneath take a look at all appeared to wrestle with the identical issues. Nearly all of the info the fashions bought fallacious associated to occasions or states of characters and relationships.

The paper famous that “most of these claims can only be invalidated via multi-hop reasoning over the evidence, highlighting the task‘s complexity and its difference from existing fact-verification settings.”

The LLMs additionally often not noted crucial data of their summaries. Additionally they over-emphasize content material in direction of the top of books, lacking out on vital content material nearer the start.

Will AI exchange human annotators?

Human annotators or fact-checkers are costly. The researchers spent $5,200 to have the human annotators confirm the claims within the AI summaries.

Might an AI mannequin have finished the job for much less? Easy reality retrieval is one thing Claude 3 is sweet at, however its efficiency when verifying claims that require a deeper understanding of the content material is much less constant.

When offered with the extracted claims and prompted to confirm them, all of the AI fashions fell in need of human annotators. They carried out significantly badly at figuring out untrue claims.

Despite the fact that Claude 3 Opus was the most effective declare verifier by a long way, the researchers concluded it “ultimately performs too poorly to be a reliable auto-rater.”

In relation to understanding the nuances, complicated human relationships, plot factors, and character motivations in an extended narrative, it appears people nonetheless have the sting for now.