Google’s newest flagship I/O convention noticed the corporate double down on its Search Generative Expertise (SGE) – which can embed generative AI into Google Search.

SGE, which goals to carry AI-generated solutions to over a billion customers by the top of 2024, depends on Gemini, Google’s household of huge language fashions (LLMs), to generate human-like responses to look queries.

As a substitute of a conventional Google search, which primarily shows hyperlinks, you’ll be introduced with an AI-generated abstract of outcomes, primarily summarising the reply to your question.

This “AI Overview” has been criticized for offering nonsense info, and Google is quick engaged on options earlier than it begins mass rollout.

However except for recommending including glue on pizza and saying pythons are mammals, there’s one other bugbear with Google’s new AI-driven search technique: its environmental footprint.

Whereas conventional serps merely retrieve current info from the web, generative AI programs like SGE should create completely new content material for every question. This course of requires vastly extra computational energy and power than standard search strategies.

Billions of Google searches are performed each day, between 3 and 10 billion, in accordance with most estimates. The impacts of making use of AI to even a small share could possibly be unbelievable.

Sasha Luccioni, a researcher on the AI firm Hugging Face who research the environmental influence of those applied sciences, lately mentioned the sharp enhance in power consumption SGE may set off.

Luccioni and her crew estimate that producing search info with AI may require 30 instances as a lot power as a traditional search.

“It just makes sense, right? While a mundane search query finds existing data from the Internet, applications like AI Overviews must create entirely new information,” she instructed Scientific American.

In 2023, Luccioni and her colleagues discovered that coaching the LLM BLOOM emitted greenhouse gases equal to 19 kilograms of CO2 per day of use, or the quantity generated by driving 49 miles in a median gas-powered automobile. Additionally they discovered that producing simply two pictures utilizing AI can devour as a lot power as totally charging a median smartphone.

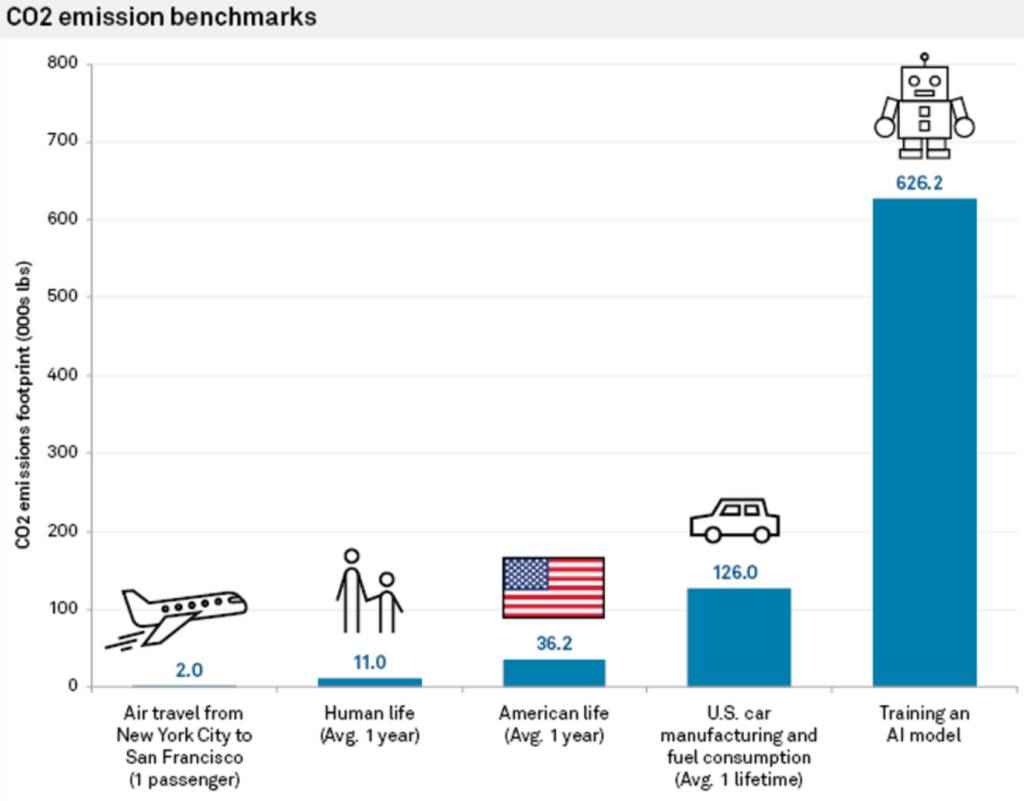

Earlier research have additionally assessed the CO2 emissions associated to AI mannequin coaching, which could exceed the emissions of a whole bunch of business flights or the common automobile throughout its lifetime.

In an interview with Reuters final 12 months, John Hennessy, chair of Google’s mum or dad firm, Alphabet, himself admitted to the elevated prices related to AI-powered search.

“An exchange with a large language model could cost ten times more than a traditional search,” he acknowledged, though he predicted prices to lower because the fashions are fine-tuned.

AI search’s pressure on infrastructure and assets

Knowledge facilities housing AI servers are projected to double their power consumption by 2026, probably utilizing as a lot energy as a small nation.

With chip producers like NVIDIA rolling out larger, extra highly effective chips, it may quickly take the equal of a number of nuclear energy stations to run large-scale AI workloads.

When AI corporations reply to questions on how this may be sustained, they sometimes quote renewables’ elevated effectivity and capability and improved energy effectivity of AI {hardware}.

Nonetheless, the transition to renewable power sources for information facilities is proving to be sluggish and sophisticated.

As Shaolei Ren, a pc engineer on the College of California, Riverside, who research sustainable AI, defined, “There’s a supply and demand mismatch for renewable energy. The intermittent nature of renewable energy production often fails to match the constant, stable power required by data centers.”

Because of this mismatch, fossil gas vegetation are being stored on-line longer than deliberate in areas with excessive concentrations of tech infrastructure.

Improvements in energy-efficient AI {hardware} are positively impacting AI’s power footprint, with corporations like NVIDIA and Delta making large strides in lowering their {hardware}’s power footprint.

Rama Ramakrishnan, an MIT Sloan Faculty of Administration professor, defined that whereas the variety of searches going by way of LLMs is more likely to enhance, the price per question appears to lower as corporations work to make {hardware} and software program extra environment friendly.

However will that be sufficient to offset rising power calls for? “It’s difficult to predict,” Ramakrishnan says. “My guess is that it’s probably going to go up, but it’s probably not going to go up dramatically.”

Because the AI race heats up, mitigating environmental impacts has turn out to be vital. Necessity is the mom of invention; the stress is on tech corporations to create options to maintain AI’s momentum rolling.

SGE may pressure water provides, too

We will additionally speculate in regards to the water calls for created by SGE, which can probably mirror will increase in information middle water consumption attributed to the generative AI trade.

In line with latest Microsoft environmental experiences, water consumption has rocketed by as much as 50% in some areas, with the Las Vegas information middle water consumption doubling. Google’s experiences additionally registered a 20% enhance in information middle water expenditure in 2023 in comparison with 2022.

Shaolei Ren, a researcher on the College of California, Riverside, attributes nearly all of this progress to AI, stating, “It’s fair to say the majority of the growth is due to AI, including Microsoft’s heavy investment in generative AI and partnership with OpenAI.”

Ren estimated that every interplay with ChatGPT, consisting of 5 to 50 prompts, consumes a staggering 500ml of water.

In a paper revealed in 2023, Ren’s crew wrote, “The global AI demand may be accountable for 4.2 – 6.6 billion cubic meters of water withdrawal in 2027, which is more than the total annual water withdrawal of 4 – 6 Denmark or half of the United Kingdom.”

Utilizing Ren’s analysis, we will create some serviette calculations for a way Google’s SGE may issue into these predictions.

Let’s say Google processes a median of 8.5 billion each day searches worldwide. Assuming that even a fraction of those searches, say 10%, make the most of SGE and generate AI-powered responses with a median of fifty phrases per response, the water consumption could possibly be phenomenal.

Utilizing Ren’s estimate of 500 milliliters of water per 5 to 50 prompts, we will roughly calculate that 850 million SGE-powered searches (10% of Google’s each day searches) would devour roughly 85 billion milliliters or 85 million liters of water each day.

That is equal to the each day water consumption of a metropolis with a inhabitants of over 500,000 folks every single day.

In actuality, precise water consumption could differ relying on components such because the effectivity of Google’s information facilities and the particular implementation and scale of SGE.

Nonetheless, it’s very affordable to take a position that SGE and different types of AI search will additional ramp up AI’s useful resource utilization.

How the trade reacts will decide whether or not international AI experiences like SGE may be sustainable at a large scale.