With a scarcity of applied sciences and laws to blunt the impression of pretend audio, photographs, and video created by deep-learning neural networks and generative AI methods, deepfakes might serve up some pricey shocks to companies within the coming yr, specialists say.

Presently, deepfakes prime the listing of regarding cyber threats, with a 3rd of firms contemplating deepfakes to be a essential or main menace. Some 61% of firms have skilled a rise in assaults utilizing deepfakes prior to now yr, in response to a report launched this week by Deep Intuition, a threat-prevention agency. Nevertheless, attackers possible will solely innovate and higher adapt deepfakes to enhance upon present fraud methods, utilizing generative AI to create assaults in opposition to monetary establishments’ know-your-customer (KYC) measures, manipulate inventory markets with reputational assaults in opposition to particular publicly traded corporations, and blackmail executives and board members with pretend — however nonetheless embarrassing — content material.

Within the brief time period, the impression of a deepfake marketing campaign aiming to undermine the popularity of an organization may very well be so nice that it impacts the agency’s common creditworthiness, says Abhi Srivastava, affiliate vp of digital financial system at Moody’s Rankings, a monetary info agency.

“Deepfakes have potential for substantial and broad-based harm to corporations,” he says. “Financial frauds are one of the most immediate threats. Such deepfake-based frauds are credit negative for firms because they expose them to possible business disruptions and reputational damage and can weaken profitability if the losses are high.”

Deepfakes have already turn out to be a device for attackers behind business-leader impersonation fraud — prior to now known as enterprise e-mail compromise (BEC) — the place AI-generated audio and video of a company govt are used to idiot lower-level staff into transferring cash or taking different delicate actions. In an incident disclosed in February, for instance, a Hong Kong-based worker of a multinational company transferred about $25.5 million after attackers used deepfakes throughout a convention name to instruct the employee to make the transfers.

You Ain’t Seen Nothing But

With monetary establishments not often assembly their very own prospects, firm staff more and more working remotely, and deepfake know-how turning into simpler to make use of, the variety of assaults will solely improve and turn out to be simpler, says Carl Froggett, CIO at Deep Intuition and the previous head of world infrastructure protection at monetary agency Citi.

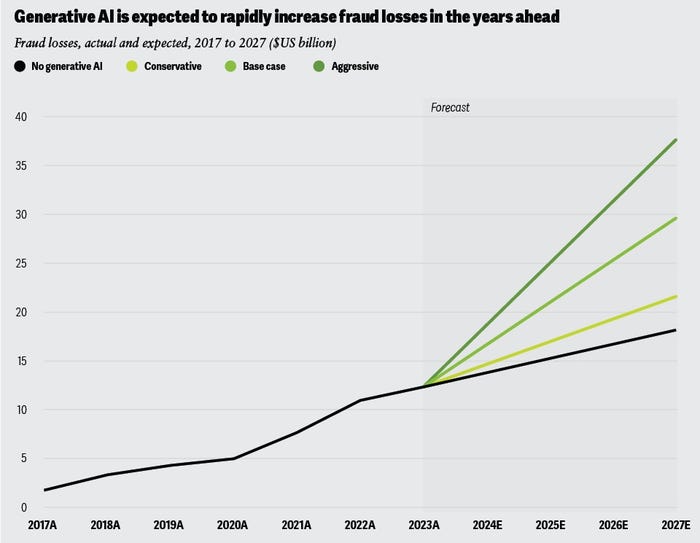

Generative AI has supercharged fraud losses, main predicted losses to double by 2027. Supply: Deloitte Insights

Total, three-quarters of firms noticed a rise in deepfakes impersonating a C-level govt, in response to the “Voice of SecOps” report printed by Deep Intuition.

“Key individuals, CEOs, board members, they’re going to be the targets especially for individual and deepfake kind of reputational damage, so we can no longer cut them some slack … and let them bypass a security measure,” Froggett says. “We don’t really have a good technical answer for them at this point, and they’re just going to get worse, so identity and phishing-resistant technology are going to be really important.”

Monetary establishments will really feel probably the most ache. Monetary fraud losses are set to speed up as a result of generative AI, with consultancy Deloitte forecasting that losses for the banking trade might attain $40 billion by 2027, double the expected losses previous to the arrival of generative AI.

Faux CEOs, Actual Losses

Deepfakes have additionally arguably had an impression on inventory market costs. A yr in the past, an image of an explosion on the Pentagon shared by means of a verified Twitter account and propagated by a number of information companies induced the S&P 500 to shed 1% of its worth in minutes, earlier than merchants found it was possible AI-generated. In April, India’s Nationwide Inventory Change (NSE) needed to situation a warning to buyers after a deepfake video appeared of the NSE’s CEO recommending particular shares.

Nevertheless, a deepfake of a CEO recommending a inventory or releasing misinformation is unlikely to set off the US Securities and Change Fee’s materials disclosure rule, says James Turgal, vp of world cyber-risk and board relations at Optiv, a cyber advisory agency.

“The threshold for an SEC cyber disclosure would be difficult based upon a deepfake video or voice impersonation, as there would have to be proof, from the shareholders’ point of view, that the deepfake attack caused a material impact on a corporation’s information technology system,” he says.

Whereas firms that don’t stem deepfake fraud’s impression on their very own operations might face a credit score penalty, the regulatory image remains to be blurry, says Moody’s Srivastava.

“If deepfakes grow in scale and frequency and turbocharge cyberattacks, the same extant regulatory implications that apply to cyberattacks can also apply to it,” he says. “However, when it comes to deepfakes as a standalone threat, it appears that most jurisdictions are still deliberating whether to enact new legislation or if existing laws are sufficient, with most of the focus being election-related and adult deepfakes.”

Is Expertise a Answer or the Drawback?

Sadly, the technological image round deepfakes nonetheless favors the attacker, says Optiv’s Turgal.

So, whereas looking for technical options, many firms are reinforcing processes designed to create further checks that may cease deepfake scams, requiring verbal authentication by senior leaders for financial transactions over a specific amount, and code authentication despatched to a trusted digital system, he says. Some firms are even transferring away from know-how and embracing person-to-person interplay as the ultimate verify.

“As the deepfake technology threat grows, I see a move back by some companies to good old-fashioned person-to-person interaction to create a low-tech two-factor authentication solution to mitigate the high-tech threat,” Turgal says.

Creating trusted channels of communication needs to be a precedence for all firms, and never only for delicate processes — equivalent to initiating a fee or switch — but in addition for communications to the general public, says Deep Intuition’s Froggett.

“The best companies are already preparing, trying to think of the eventualities. … You need legal, regulatory, and compliance groups — obviously, marketing and communication — to be able to mobilize to combat any misinformation,” he says. “You’re already seeing that more mature financials have that in place and are practicing it as part of their DNA.”

Different industries, Froggett provides, should evolve comparable capabilities as properly.