NVIDIA CEO Jensen Huang used the keynote opening deal with at Taiwan’s Computex 2024 occasion to unveil next-gen tech and the roadmap the corporate will pursue to turn into the main AI {hardware} platform.

The theme of his deal with was “Accelerate everything” and Huang unveiled new chips, sooner AI networking, and an formidable improvement roadmap. From AI PCs to huge AI factories, NVIDIA needs to be the enabler of what comes subsequent with AI.

“The future of computing is accelerated,” Huang stated. “With our innovations in AI and accelerated computing, we’re pushing the boundaries of what’s possible and driving the next wave of technological advancement.”

Right here’s a fast have a look at a few of the bulletins:

New chips

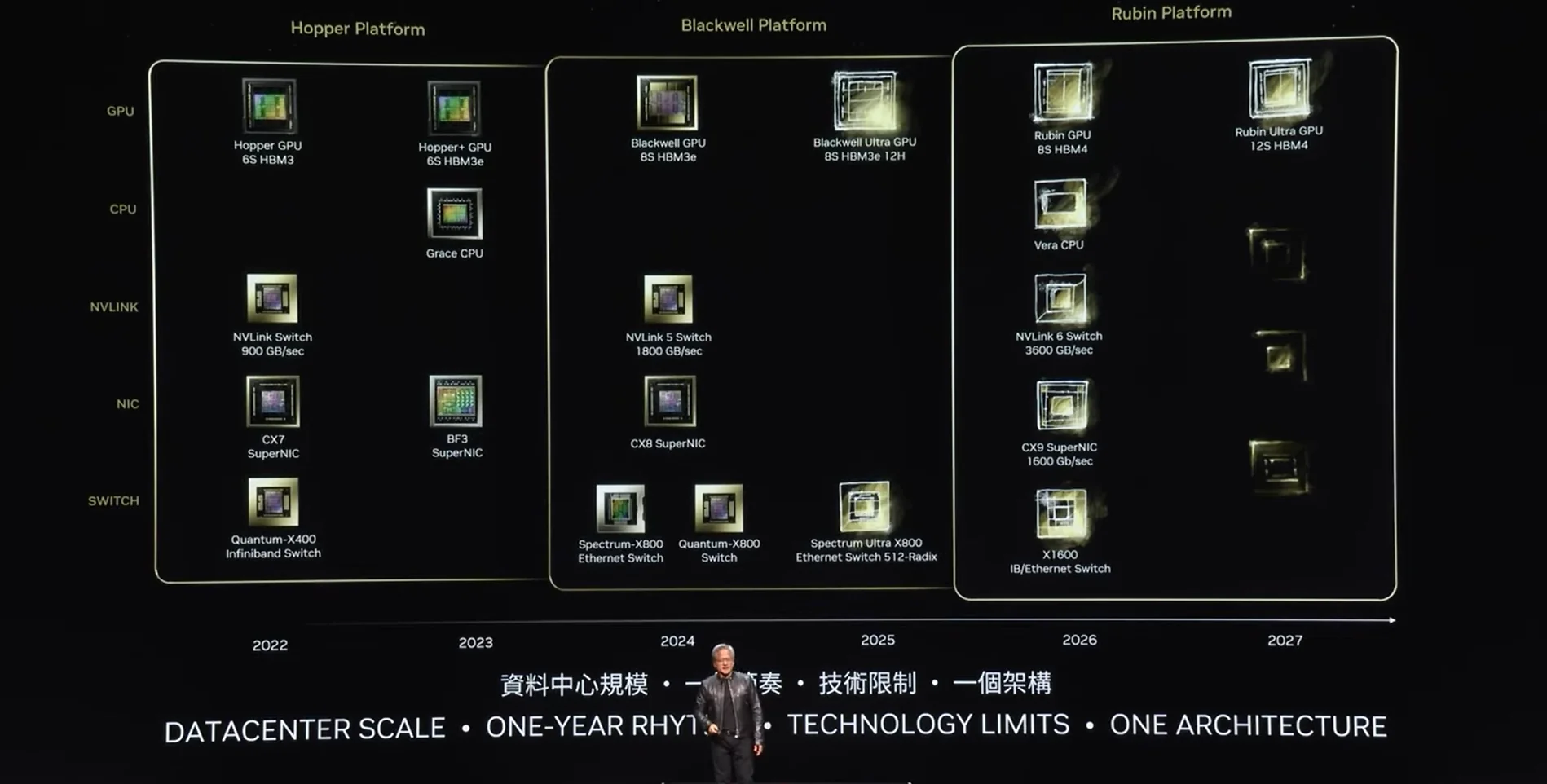

The Blackwell GPUs are scorching off the manufacturing line however Huang says we are able to already count on an upgraded Blackwell Extremely GPU later this yr. He stated that NVIDIA’s improvement roadmap will see a brand new household of chips launched yearly.

“Our company has a one-year rhythm. Our basic philosophy is very simple: build the entire data center scale, disaggregate and sell to you parts on a one-year rhythm, and push everything to technology limits,” Huang defined.

Huang introduced that 2026 will see its Rubin platform succeed the Blackwell household of GPUs.

AI factories

Huang named a number of computing corporations that can construct “AI factories” that can ship cloud, on-premises, embedded, and edge AI programs utilizing NVIDIA GPUs and networking.

Huang stated, “The next industrial revolution has begun. Companies and countries are partnering with NVIDIA to shift the trillion-dollar traditional data centers to accelerated computing and build a new type of data center — AI factories — to produce a new commodity: artificial intelligence.”

NVIDIA MGX (Modular Server Reference Structure) is a blueprint for constructing knowledge heart servers that focuses on accelerated computing. It makes use of a standardized method for constructing servers utilizing NVIDIA merchandise, which makes it lots simpler for these AI factories to be constructed.

NVIDIA Spectrum-X

NVIDIA makes use of NVLink to maneuver knowledge between GPUs, however shifting knowledge between GPU pods inside or between knowledge facilities presents an extra problem.

NVIDIA Spectrum-X is the world’s first Ethernet networking platform particularly designed to boost the efficiency of AI workloads. Naturally, NVIDIA Spectrum-X is optimized for NVIDIA’s {hardware}.

NVIDIA says Spectrum-X accelerates generative AI community efficiency by 1.6x over conventional Ethernet materials.

Final week we reported that Huge Tech computing corporations are working to develop an open accelerated AI networking normal with out NVIDIA’s enter.

Even so, Huang stated Spectrum-X is already being adopted by corporations like Dell Applied sciences, Hewlett Packard Enterprise, and Lenovo.

NIM

NVIDIA NIM, which stands for NVIDIA Inference Microservices, is a set of instruments designed to simplify and speed up the deployment of generative AI fashions.

Earlier than you should utilize an AI mannequin, builders have to deploy it. As an alternative of getting to tinker with the nuts and bolts of that course of, NVIDIA NIMs simplify deployment by robotically containerizing AI fashions and optimizing them for NVIDIA {hardware}.

It’s just like the distinction between shopping for chopping, and assembling all of the substances for a meal versus popping a readymade meal within the oven.

Huang introduced that the Llama 3 NIM was now out there as a free obtain for builders to check out. Huang additionally unveiled Nvidia Ace NIMs which features a Digital Human mannequin able to producing AI human fashions with lifelike pores and skin.

You may watch the total keynote deal with to see extra particulars on AI RTX-powered PCs and laptops, Nividia’s Isaac ROS 3.0 robotics platform, and the way factories are utilizing NVIDIA’s tech to create digital twins on their factories to coach robots.