AI Overviews, the subsequent evolution of Search Generative Expertise, will roll out within the U.S. this week and in additional international locations quickly, Google introduced on the Shoreline Amphitheater in Mountain View, CA. Google confirmed a number of different modifications coming to Google Cloud, Gemini, Workspace and extra, together with AI actions and summarization that may work throughout apps — opening up some fascinating choices for small companies.

Search will embody AI Overviews

AI Overviews is the growth of Google’s Search Generative Expertise, the AI generated solutions that seem on the high of Google searches. You could have seen SGE in motion already, as choose U.S. customers have been in a position to strive it since final October. SGE can generate photos or textual content. AI Overviews provides AI-generated info to the highest of any Google Search Engine outcomes.

With AI Overviews, “Google does the work for you. Instead of piecing together all the information yourself, you can ask your questions” and “get an answer instantly,” stated Liz Reid, Google’s vice chairman of Search.

By the tip of the yr, AI Overviews will come to over a billion folks, Reid stated. Google desires to have the ability to reply “ten questions in one,” linking duties collectively in order that the AI could make correct connections between info. That is doable by multi-step reasoning. For instance, somebody may ask not just for one of the best yoga studios within the space, but in addition for the space between the studios and their residence and the studios’ introductory affords. All of this info will probably be listed in handy columns on the high of the Search outcomes.

Quickly, AI Overviews will be capable of reply questions on movies offered to it, too.

AI Overviews is rolling out in “the coming weeks” within the U.S. and will probably be accessible in Search Labs first.

Does AI Overviews truly make Google Search extra helpful? Google says it can rigorously be aware which photos are AI generated and which come from the net, however AI Overviews could dilute Search’s usefulness if the AI solutions show incorrect, irrelevant or deceptive.

Gemini 1.5 Professional will get some upgrades, together with a 2 million context window for choose customers

Google’s massive language mannequin Gemini 1.5 Professional is getting high quality enhancements and a brand new model, Gemini 1.5 Flash. New options for builders within the Gemini API embody video body extraction, parallel perform calling, and context caching for builders. Native video body extraction and parallel perform calling can be found now. Context caching is predicted to drop in June.

Obtainable at the moment globally, Gemini 1.5 Flash is a smaller mannequin targeted on responding shortly. Customers of Gemini 1.5 Professional and Gemini 1.5 will be capable of enter info for the AI to research in a 1 million context window.

On high of that, Google is increasing Gemini 1.5 Professional’s context window to 2 million for choose Google Cloud clients. To get the broader context window, be a part of the waitlist in Google AI Studio or Vertex AI.

The last word purpose is “infinite context,” Google CEO Sundar Pichai stated.

Gemma 2 is available in 27B parameter dimension

Google’s small language mannequin, Gemma, will get a significant overhaul in June. Gemma 2 can have a 27B parameter mannequin, in response to builders requesting a much bigger Gemma mannequin that’s nonetheless sufficiently small to suit inside compact tasks. Gemma 2 can run effectively on a single TPU host in Vertex AI, Google stated. Gemma 2 will probably be accessible in June.

Plus, Google rolled out PaliGemma, a language and imaginative and prescient mannequin for duties like picture captioning and asking questions based mostly on photos. PaliGemma is on the market now in Vertex AI.

Gemini summarization and different options will probably be hooked up to Google Workspace

Google Workspace is getting a number of AI enhancements, that are enabled by Gemini 1.5’s lengthy context window and multimodality. For instance, customers can ask Gemini to summarize lengthy e-mail threads or Google Meet calls. Gemini will probably be accessible within the Workspace facet panel subsequent month on desktop for companies and customers who use the Gemini for Workspace add-ons and the Google One AI Premium plan. The Gemini facet panel is now accessible in Workspace Labs and for Gemini for Workspace Alpha customers.

Workspace and AI Superior clients will be capable of use some new Gemini options going ahead, beginning for Labs customers this month and usually accessible in July:

- Summarize e-mail threads.

- Run a Q&A in your e-mail inbox.

- Use longer urged replies in Good Reply to attract contextual info from e-mail threads.

Gemini 1.5 could make connections between apps in Workspace, equivalent to Gmail and Docs. Google Vice President and Common Supervisor for Workspace Aparna Pappu demonstrated this by exhibiting how small enterprise house owners may use Gemini 1.5 to prepare and monitor their journey receipts in a spreadsheet based mostly on an e-mail. This function, Information Q&A, is rolling out to Labs customers in July.

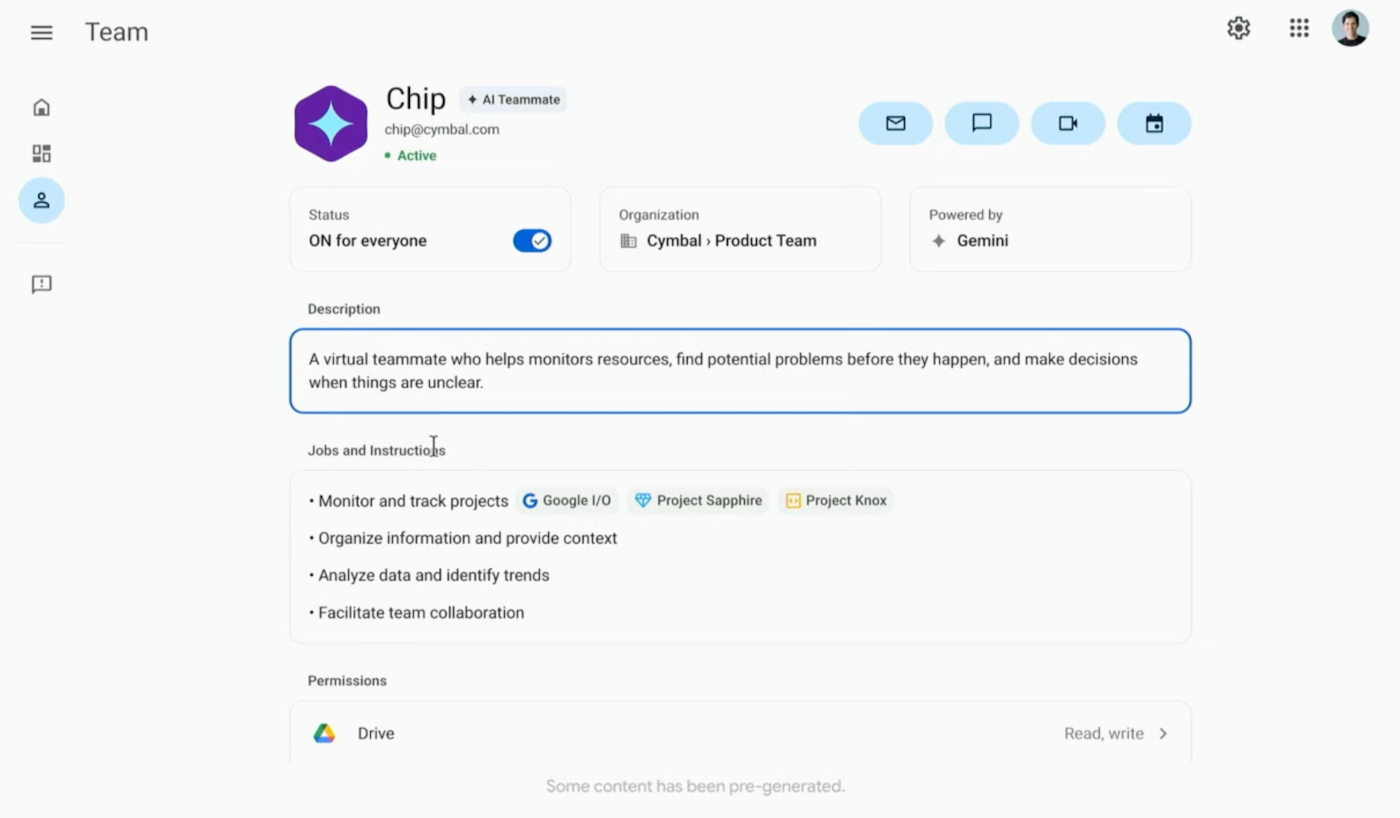

Subsequent, Google desires to have the ability to add a Digital Teammate to Workspace. The Digital Teammate will act like an AI coworker, with an id, a Workspace account and an goal. (However with out the necessity for PTO.) Workers can ask questions on work to the assistant, and the assistant will maintain the “collective memory” of the workforce it really works with.

Google hasn’t introduced a launch date for Digital Teammate but. They plan so as to add third-party capabilities to it going ahead. That is simply speculative, however Digital Teammate is perhaps particularly helpful for enterprise if it connects to CRM functions.

Voice and video capabilities are coming to the Gemini app

Talking and video capabilities are coming to the Gemini app later this yr. Gemini will be capable of “see” by your digital camera and reply in actual time.

Customers will be capable of create “Gems,” personalized brokers to do issues like act as private writing coaches. The thought is to make Gemini “a true assistant,” which might, for instance, plan a visit. Gems are coming to Gemini Superior this summer season.

The addition of multimodality to Gemini comes at an fascinating time in comparison with the demonstration of ChatGPT with GPT-4o earlier this week. Each confirmed very natural-sounding dialog. OpenAI’s AI voice responded to interruption, however mis-read or mis-interpreted some conditions.

SEE: OpenAI confirmed off how the most recent iteration of the GPT-4 mannequin can reply to stay video.

Imagen 3 improves at producing textual content

Google introduced Imagen 3, the subsequent evolution of its picture era AI. Imagen 3 is meant to be higher at rendering textual content, which has been a significant weak point for AI picture turbines previously. Choose creators can strive Imagen 3 in ImageFX at Google Labs at the moment, and Think about 3 is coming quickly for builders in Vertex AI.

Google and DeepMind reveal different inventive AI instruments

One other inventive AI product Google introduced was Veo, their next-generation generative video mannequin from DeepMind. Veo created a powerful video of a automobile driving by a tunnel and onto a metropolis avenue. Veo can be utilized by choose creators in VideoFX, an experimental software discovered at labs.google.

Different inventive sorts would possibly need to use the Music AI Sandbox, a set of generative AI instruments for making music. Neither public nor personal launch dates for Music AI Sandbox have been introduced.

Sixth-generation Trillium GPUs enhance the facility of Google Cloud information facilities

Pichai launched Google’s sixth era Google Cloud TPUs, referred to as Trillium. Google claims the TPUs present a 4.7X enchancment over the earlier era. Trillium TPUs are meant so as to add higher efficiency to Google Cloud information facilities, and compete with NVIDIA’s AI accelerators. Time on Trillium will probably be accessible to Google Cloud clients in late 2024. Plus, NVIDIA’s Blackwell GPUs will probably be accessible in Google Cloud beginning in 2025.

TechRepublic lined Google I/O remotely.