A menace actor is utilizing a PowerShell script that was seemingly created with the assistance of a man-made intelligence system similar to OpenAI’s ChatGPT, Google’s Gemini, or Microsoft’s CoPilot.

The adversary used the script in an e mail marketing campaign in March that focused tens of organizations in Germany to ship the Rhadamanthys data stealer.

AI-based PowerShell deploys infostealer

Researchers at cybersecurity firm Proofpoint attributed the assault to a menace actor tracked as TA547, believed to be an preliminary entry dealer (IAB).

TA547, also referred to as Scully Spider, has been lively since at the least 2017 delivering quite a lot of malware for Home windows (ZLoader/Terdot, Gootkit, Ursnif, Corebot, Panda Banker, Atmos) and Android (Mazar Bot, Purple Alert) methods.

Not too long ago, the menace actor began utilizing the Rhadamanthys modular stealer that continually expands its information assortment capabilities (clipboard, browser, cookies.

The information stealer has been distributed since September 2022 to a number of cybercrime teams beneath the malware-as-a-service (MaaS) mannequin.

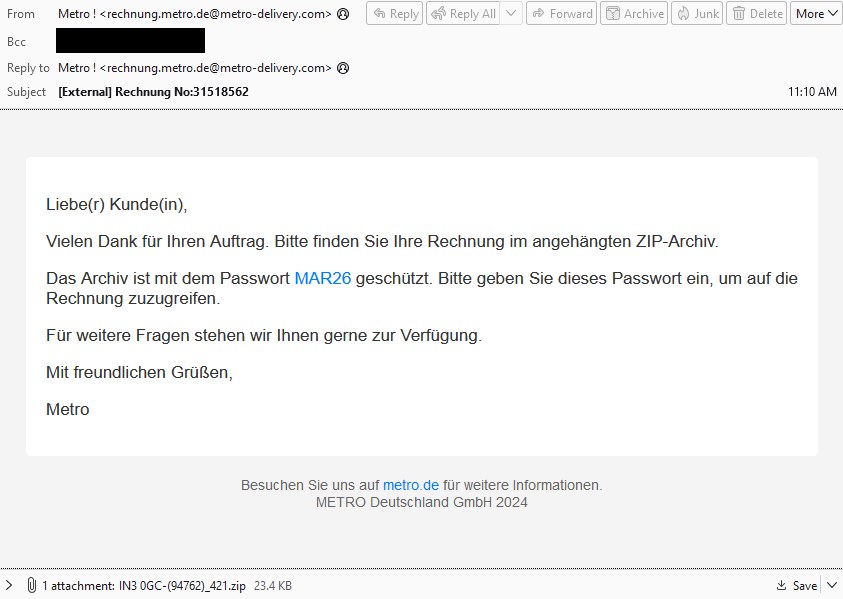

Based on Proofpoint researchers, TA547 impersonated the Metro cash-and-carry German model in a latest e mail marketing campaign utilizing invoices as a lure for “dozens of organizations across various industries in Germany.”

supply: Proofpoint

The messages included a ZIP archive protected with the password ‘MAR26’, which contained a malicious shortcut file (.LNK). Accessing the shortcut file triggered PowerShell to run a distant script.

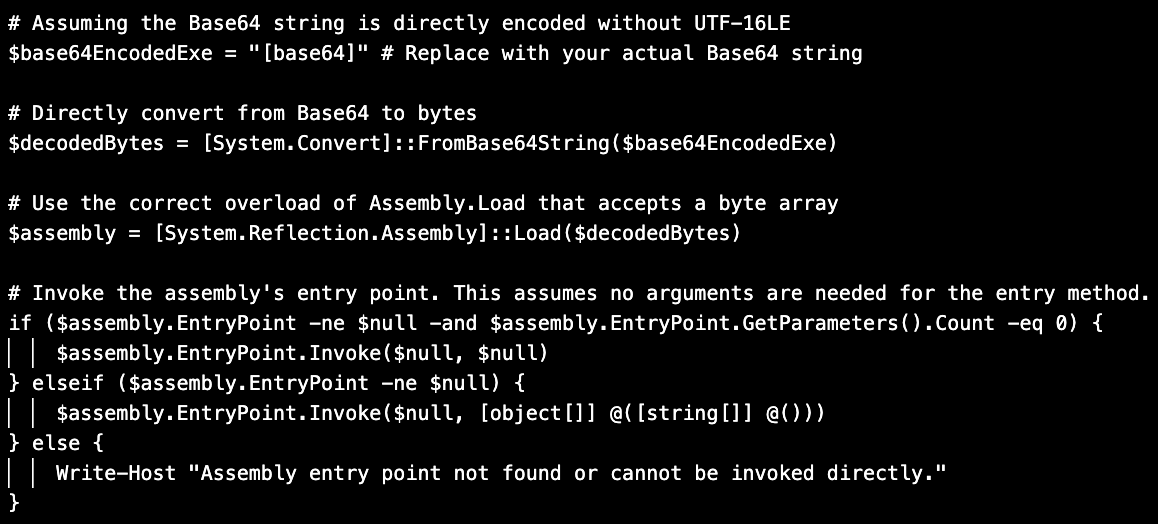

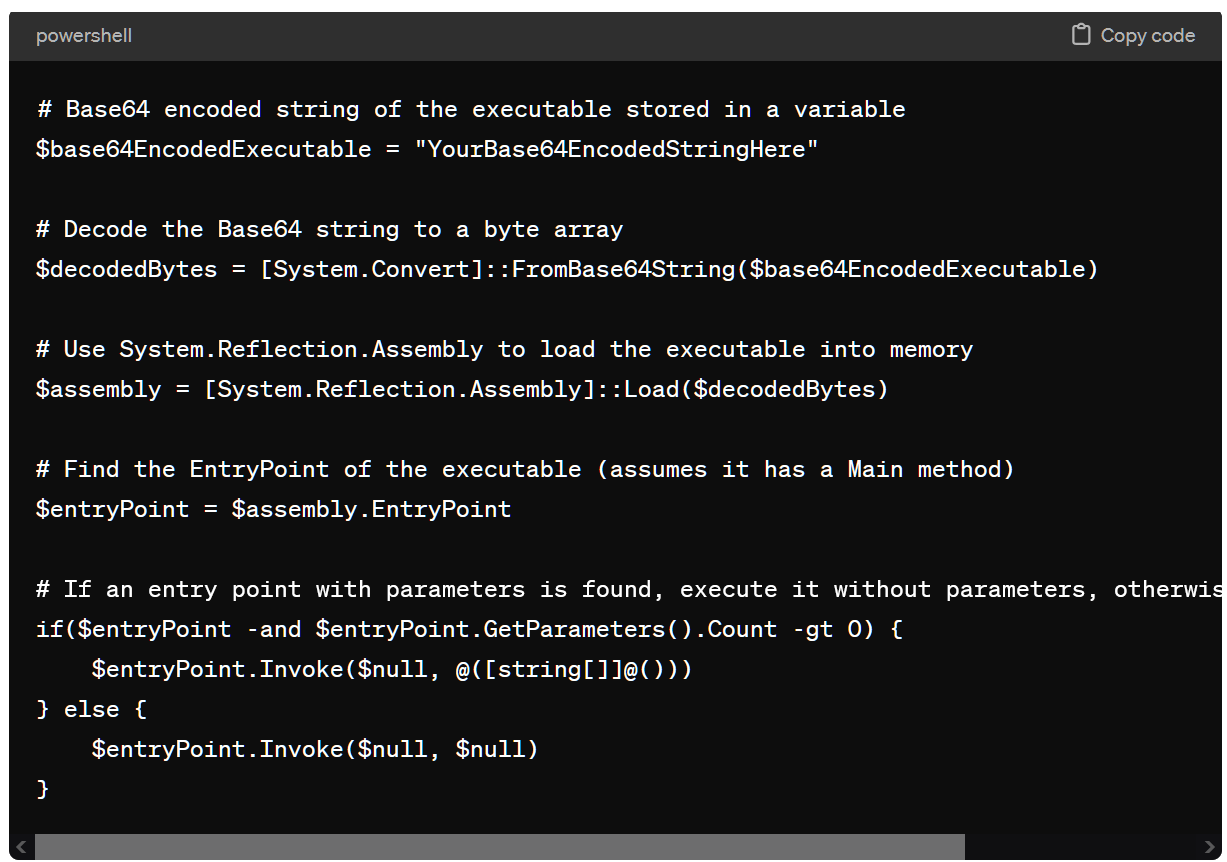

“This PowerShell script decoded the Base64-encoded Rhadamanthys executable file stored in a variable and loaded it as an assembly into memory and then executed the entry point of the assembly” – Proofpoint

The researchers clarify that this technique allowed the malicious code to be executed in reminiscence with out touching the disk.

Analyzing the PowerShell script that loaded Rhadamanthys, the researchers observed that it included a pound/hash signal (#) adopted by particular feedback for every part, that are unusual in human-created code.

supply: Proofpoint

The researchers say that these traits are typical to code originating from generative AI options like ChatGPT, Gemini, or CoPilot.

Whereas they can’t be completely sure that the PowerShell code got here from a big language mannequin (LLM) resolution, the researchers say that the script content material suggests the opportunity of TA547 utilizing generative AI for writing or rewriting the PowerShell script.

BleepingComputer used ChatGPT-4 to create an analogous PowerShell script and the output code seemed just like the one seen by Proofpoint, together with variable names and feedback, additional indicating it’s seemingly that AI was used to generate the script.

supply: BleepingComputer

One other principle is that they copied it from a supply that relied on generative AI for coding.

AI for malicious actions

Since OpenAI launched ChatGPT in late 2022, financially motivated menace actors have been leveraging AI energy to create custom-made or localized phishing emails, run community scans to establish vulnerabilities on hosts or networks, or construct extremely credible phishing pages.

Some nation-state actors related to China, Iran, and Russia have additionally turned to generative AI to enhance productiveness when researching targets, cybersecurity instruments, and strategies to ascertain persistence and evade detection, in addition to scripting assist.

In mid-February, OpenAI introduced that it blocked accounts related to state-sponsored hacker teams Charcoal Storm, Salmon Storm (China), Crimson Storm (Iran), Emerald Sleet (North Korea), and Forest Blizzard (Russia) abusing ChatGPT for malicious functions.

As most massive language studying fashions try to limit output if it could possibly be used for malware or malicious habits, menace actors have launched their very own AI Chat platforms for cybercriminals.